To avoid angering the almighty algorithm, people are creating a new vocabulary

6 July 2022 – If you’ve spent any time on social media (especially Linkedin) discussing the major issues of the day – abortion, gun control, Ukraine, etc. – you know the danger of ending up suspended or blocked. I’ve been in Linkedin Jail twice for violating Linkedin policy – once for using a George Bernard Shaw quote, and another for “inciting violence” with this graphic:

LinkedIn is notorious because as I wrote and explained earlier this year, it is stocked with very organized Russian and rightwing activists and Microsoft (owner of Linkedin) almost immediately removes content upon complaint and delays investigations for weeks. Almost all of the *forbidden* content is irony, humor and playful intelligence (I hang out with a very smart crowd). Scores of my Linkedin mates have had their wrists slapped for apparently heinous transgressions. But we wear these as badges of honor – and we call in our attorneys and file letters with Linkedin which usually lifts any ban or suspension within 48 hours.

But I’m reminded of the reality of social media which is comparable to going to a nightclub. You can’t choose the music, you can’t alter the decor, you can’t vet the clientele. Your only choice is … do I want to dance? It’s like that old show Outer Limits: “There is nothing wrong with your television. We control the horizontal. We control the vertical…”

And so, once again, we are ruled by an algorithm. It has become “Snowflake Central” because the artificial intelligence is culturally challenged and so inherently does not get irony or anything subtle. Black or white judged from a moribund cultural reference point. But there are workarounds. I had a long piece on abortion but when I wrote about “rape” (a forbidden word, normally flagged) I wrote “r*pe” – because a friend of mine at Linkedin said the algorithm does not pick up stuff like that.

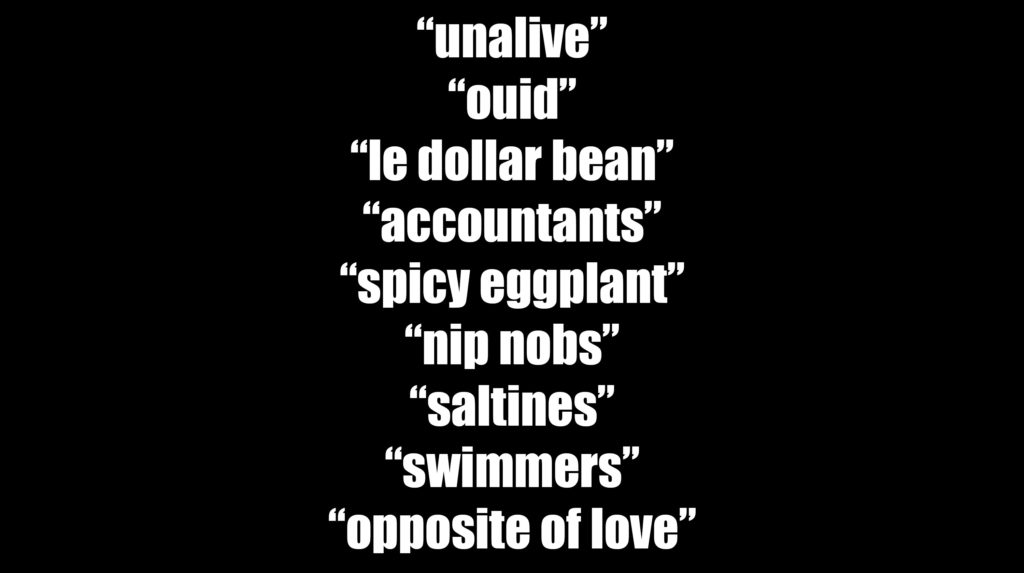

Or my favorite. The word “kill” will also (normally) get flagged. So you’ll see stuff like “the bad guy unalived his enemy”.

Yep. Algorithms are causing human language to reroute around words in real time. “Algospeak” is becoming increasingly common across the Internet as people seek to bypass content moderation filters on social media platforms such as Linkedin, Instagram, TikTok, and Twitch. And YouTube to some degree.

Algospeak refers to code words or turns of phrase users have adopted in an effort to create a brand-safe lexicon that will avoid getting their posts removed or down-ranked by content moderation systems. For instance, in many online chats and posts and videos, it’s common to say “unalive” rather than “dead,” as I noted above. In a similar vein, you’ll see “SA” instead of “sexual assault,” or “spicy eggplant” instead of “vibrator.”

But this is not new. As I wrote last year, as the pandemic pushed more people to communicate and express themselves online, social media platforms began creating more sophisticated algorithmic content moderation systems which have had an unprecedented impact on the words we choose – particularly on TikTok. As my friend Ernie Smith (a master wordsmith) noted it gave rise to a new form of “internet-driven Aesopian language”.

TikTok is different. Unlike other mainstream social platforms, the primary way content is distributed on TikTok is through an algorithmically curated “For You” page; having followers doesn’t guarantee people will see your content. This shift has led average users to tailor their videos primarily toward the algorithm, rather than a following, which means abiding by content moderation rules is more crucial than ever.

Last year I wrote a monograph “TikTok and the Algorithmic Revolution” for my media clients. You can read a good chunk of it for free by clicking here.

When the pandemic broke out, people on TikTok and other apps began referring to it as the “Backstreet Boys reunion tour” or calling it the “panini” or “panda express” as platforms down-ranked videos mentioning the pandemic by name in an effort to combat misinformation. When young people began to discuss struggling with mental health, they talked about “becoming unalive” in order to have frank conversations about suicide without algorithmic punishment. Sex workers, who have long been censored by moderation systems, refer to themselves on TikTok as “accountants” and use the corn emoji as a substitute for the word “porn.”

As discussions of major events are filtered through algorithmic content delivery systems, more users are bending their language. Recently, in discussing the invasion of Ukraine, people on YouTube and TikTok have used the sunflower emoji to signify the country. When encouraging fans to follow them elsewhere, users will say “blink in lio” for “link in bio.” And as Taylor Lorenz (another wordsmith I follow) noted:

Euphemisms are especially common in radicalized or harmful communities. Pro-anorexia eating disorder communities have long adopted variations on moderated words to evade restrictions. One paper from the School of Interactive Computing, Georgia Institute of Technology found that the complexity of such variants even increased over time. Last year, anti-vaccine groups on Facebook began changing their names to “dance party” or “dinner party” and anti-vaccine influencers on Instagram used similar code words, referring to vaccinated people as “swimmers.”

Of course, tailoring language to avoid scrutiny predates the Internet. Many religions have avoided uttering the devil’s name lest they summon him, while people living in repressive regimes developed code words to discuss taboo topics. Early Internet users used alternate spelling or “leetspeak” to bypass word filters in chat rooms, image boards, online games and forums. But algorithmic content moderation systems are more pervasive on the modern Internet, and often end up silencing marginalized communities and important discussions.

During YouTube’s “adpocalypse” in 2017, when advertisers pulled their dollars from the platform over fears of unsafe content, LGBTQ creators spoke about having videos demonetized for saying the word “gay.” Some began using the word less or substituting others to keep their content monetized. More recently, users on TikTok have started to say “cornucopia” rather than “homophobia,” or say they’re members of the “leg booty” community to signify that they’re LGBTQ. Sean Szolek-VanValkenburgh, a TikTok creator with over 1.2 million followers, nails it:

“There’s a line we have to toe, it’s an unending battle of saying something and trying to get the message across without directly saying it. It disproportionately affects the LGBTQIA community and the BIPOC community because we’re the people creating that verbiage and coming up with the colloquiums.”

And as I noted last week in my piece about the U.S. Supreme Court overturning Roe v Wade, conversations about women’s health, pregnancy and menstrual cycles on TikTok are also consistently down-ranked. Anja Health, a start-up offering umbilical cord blood banking, replaces the words for “sex,” “period” and “vagina” with other words or spells them with symbols in the captions. Many users say “nip nops” rather than “nipples”. Many companies have stated they feel like they need a disclaimer because it makes them feel unprofessional to have these weirdly spelled words in their captions, especially for content that’s supposed to be serious and medically inclined.

Because algorithms online will often flag content mentioning certain words, devoid of context, some users avoid uttering them altogether, simply because they have alternate meanings. The most absurd? You have to say ‘saltines’ when you’re literally talking about crackers now. Several social media platforms consider the word “cracker” a slur. Several platforms have even gone so far as to remove certain emotes because people were using them to communicate certain words.

The reality is that tech companies have been using automated tools to moderate content for a really long time and while it’s touted as this sophisticated machine learning, it’s often just a list of words they think are problematic. The Linguistic Anthropology Department at UCLA in the U.S. will publish a study this fall. In one part (a draft I saw) there is a presentation about language on TikTok. They outlined how, by self-censoring words in the captions of TikToks, new algospeak code words emerged. For instance, TikTok users now use the phrase “le dollar bean” instead of “lesbian” because it’s the way TikTok’s text-to-speech feature pronounces “Le$bian,” a censored way of writing “lesbian” that users believe will evade content moderation.

But we’re back in the game of whack-a-mole. Trying to stomp out specific words on platforms is a fool’s errand. The people using platforms to organize real harm are pretty good at figuring out how to get around these systems. And it leads to collateral damage of literal speech. Attempting to regulate human speech at a scale of billions of people in dozens of different languages and trying to contend with things such as humor, sarcasm, local context and slang can’t be done by simply down-ranking certain words.

Because in the end, we’ll end up creating new ways of speaking to avoid this kind of moderation. Then end up embracing some of these words and they become common vernacular – all born out of this effort to resist moderation.”

Bottom line? Aggressive moderation is never going to be a real solution to the harms that we see from big tech companies’ business practices. That’s a task for policymakers and for building better things, better tools, better protocols and better platforms. Ultimately, you’ll never be able to sanitize the Internet.