It may be that “unaugmented” photography becomes more of a niche. On the other hand, certified unaugmented photos might become more valuable as art in the same way that a handcrafted vase is worth more than one pushed off a factory line.

5 July 2022 – When you are the founder and head of a media company (ok, and allegedly “semi-retired”) that chronicles the exploits of three overlapping, entangled industries … advertising, cybersecurity, and TMT (technology-media-telecom) … you get invited to scores of wonderous events and demonstrations and meet hundreds of fascinating people. But you must do it that way because as the late, great John le Carré often said “A desk is a dangerous place from which to watch the world”.

Carré was correct. Much of the technology I read about or see at conferences I also force myself “to do”. Because writing about technology is not “technical writing.” It is about framing a concept, about creating a narrative. Technology affects people both positively and negatively. You need to provide perspective.

Of course, you will realize straight away that if you are not careful you can find yourself going through a mental miasma with all the overwhelming tech out there.

My days riding the conference circuit are over, save some keynote speeches I’ll give later this year and my attendance at this summer’s Mykonos, Greece unconference which has been revived after a long hiatus. But I continent stay current on the transformational technologies that will take us into the next decade and maybe beyond.

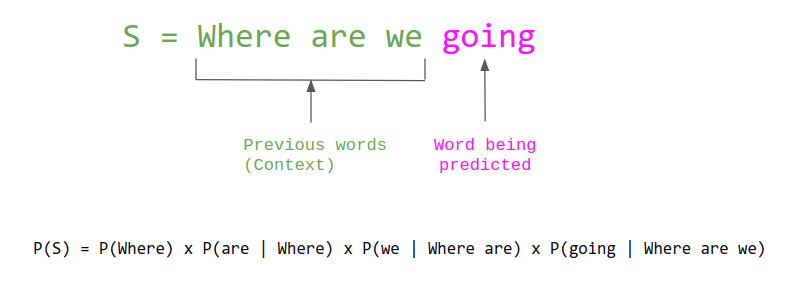

Last year I wrote a number of posts on GPT-3 (Generative Pre-trained Transformer 3) which is an autoregressive language model that uses deep learning to produce human-like text. It is the third-generation language prediction model in the GPT-n series (the successor to much-written-about GPT-2) which was created by OpenAI, a San Francisco-based artificial intelligence research laboratory. It is part of a trend in large language models exercising natural language processing (NLP) systems of pre-trained language representations.

At its core, a large language model is an extremely sophisticated text predictor. A human gives it a chunk of text as input, and the model generates its best guess as to what the next chunk of text should be. It can then repeat this process – taking the original input together with the newly generated chunk, treating that as a new input, and generating a subsequent chunk – until it reaches a length limit.

How does does the model go about generating these predictions? It has ingested effectively all of the text available on the Internet. The output it generates is language that it calculates to be a statistically plausible response to the input it is given, based on everything that humans have previously published online.

And so an amazingly rich and nuanced insights can be extracted from the patterns latent in massive datasets, far beyond what the human mind can recognize on its own. This is the basic premise of modern machine learning. For instance, having initially trained on a dataset of half a trillion words, GPT-3 is able to identify and dazzingly riff on the linguistic patterns contained therein. For my detailed piece on GPT-3 click here. GPT-4 is expected to be out by 2023.

This (quite logically) led to DALL·E 2 which is new AI system that can create realistic images and art from a description in natural language. Note: it comes from merging the names of the Pixar robot charcter WALL-E and artist Salvador Dalí.

DALL-E 2 has created a sensation. As I noted in a short monograph last year, its creative ability is a wonder. A good friend, Casey Newton, explains that “creative revolution” in this article.

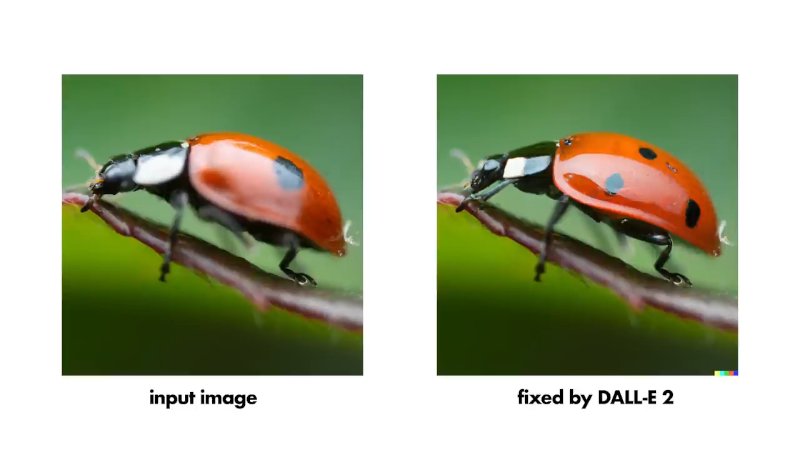

But as a number of my colleagues have noted, I don’t fully understand the appeal of asking an AI for a picture of a gorilla eating a waffle while wearing headphones. Nicholas Sherlock thought of one thing better: feed the AI a picture of a fuzzy ladybug and asked it to focus the subject. It did. See photo which leads this post. He also fed in a lot of other photos he shot and asked it to make subtle variations of them. It did a pretty good job on those, too.

Micael Widell (a fellow photographer whom I follow) shows more of that in a recent video that might be the best use I’ve seen yet of using DALL-E 2. The part about DALL-E 2 editing is at about the 4:45 mark:

As Micael points out, right now the results are not perfect, but they are really not bad. And DALL-E 3 is in the works.

And we might not totally agree with Micael’s thesis that photography will be dead when you can just ask the AI to mangle your smartphone pictures or just ask it to create whatever picture you want out of whole cloth. Sure, it will change things. But people still ride horses. People even still take black and white photos on film even though we don’t have to anymore.

Of course, if you made your living making horseshoes or selling black and white film, you probably had to adapt to changing times. Some did and — of course — some didn’t. But this is probably no different than that. It may be that “unaugmented” photography becomes more of a niche. On the other hand, certified unaugmented photos might become more valuable as art in the same way that a handcrafted vase is worth more than one pushed off a factory line. Who knows.