It boggles the mind that so many people in the legal industry and media creation industry are talking about AI and LLM AIs “ethics” (as if it exists), and “bad” training sets with biases and inaccuracies. It produces “fluffy content”, and incorrect answers, false information and skewed output – leading to poor human decisions made in reliance on biased output.

It all pales to insignificance when things like Lavender have been unleashed into the world.

War ruled by deliberate, murderous algorithmic bias.

6 April 2024 – – The story exploded across the internet this past week. No, not the murder of 7 World Central Kitchen workers whom the Israel Defence Forces killed in an air strike in Gaza. The battle over the provision of essential humanitarian aid, already so brutal over the past few months, will become increasingly central to the conflict. For all the pacifying rhetoric from the U.S., there is no reason to think that the war’s humanitarian phase will be any less destructive than the direct military violence Palestinians in Gaza have faced so far. American “concerns” amount to nothing more than political theater – but very real for the rest of the world watching on in horror. But that story needs a more nuanced treatment which I will delay until later this week.

My story today is the bigger story of the past week – the Israeli military’s bombing campaign in Gaza using a previously undisclosed AI-powered database that at one stage identified 37,000 potential targets based on their apparent links to Hamas, according to intelligence sources involved in the war. In addition to talking about their use of the AI system, called Lavender, the intelligence sources claim that Israeli military officials permitted large numbers of Palestinian civilians to be killed, particularly during the early weeks and months of the conflict, using a “calculus” of how many deaths were “allowable”.

Lavender was developed by the Israel Defense Forces’ elite intelligence division, Unit 8200, which is comparable to the U.S. National Security Agency or GCHQ in the UK.

At no point did Israeli intelligence officers question the AI’s accuracy, or approach, or the legal or moral justification for Israel’s bombing strategy – until now when its has been revealed.

At no point were details provided about the specific kinds of data used to train Lavender’s algorithm, or how the program reached its conclusions. Sources said that during the first few weeks of the war, Unit 8200 refined Lavender’s algorithm and “tweaked” its search parameters to increase the number of locations to bomb.

This report on Lavender was produced by +972 Magazine, a left-wing news and opinion online magazine, established in August 2010 by a group of four Israeli writers in Tel Aviv. The report’s accuracy was verified by numerous current and former Israeli intelligence officers. It is an unusually candid testimony that provides a rare glimpse into the first-hand experiences of Israeli intelligence officials who have been using machine-learning systems to help identify targets during the six-month war.

The report is free to read and I have a link below. I could screen shot 100s of paragraphs from the entire report but there are just too many so you need to read it. But herein a few points from report … I avoided saying “key points” because every damn sentence in the report seems “key” … and a few comments from others who read the report, plus just a few selected screen shots:

– The testimonies published by +972 may explain how such a western military with such advanced capabilities, with weapons that can conduct highly surgical strikes, has conducted a war with such a vast human toll.

– Israel’s use of powerful AI systems in its war on Hamas has entered uncharted territory for advanced warfare, raising a host of legal and moral questions, and transforming the relationship between military personnel and machines.

– “This is unparalleled, in my memory,” said one intelligence officer who used Lavender, adding that they had more faith in a “statistical mechanism” than a grieving soldier. “Everyone there, including me, lost people on October 7. The machine did it coldly. And that made it easier”.

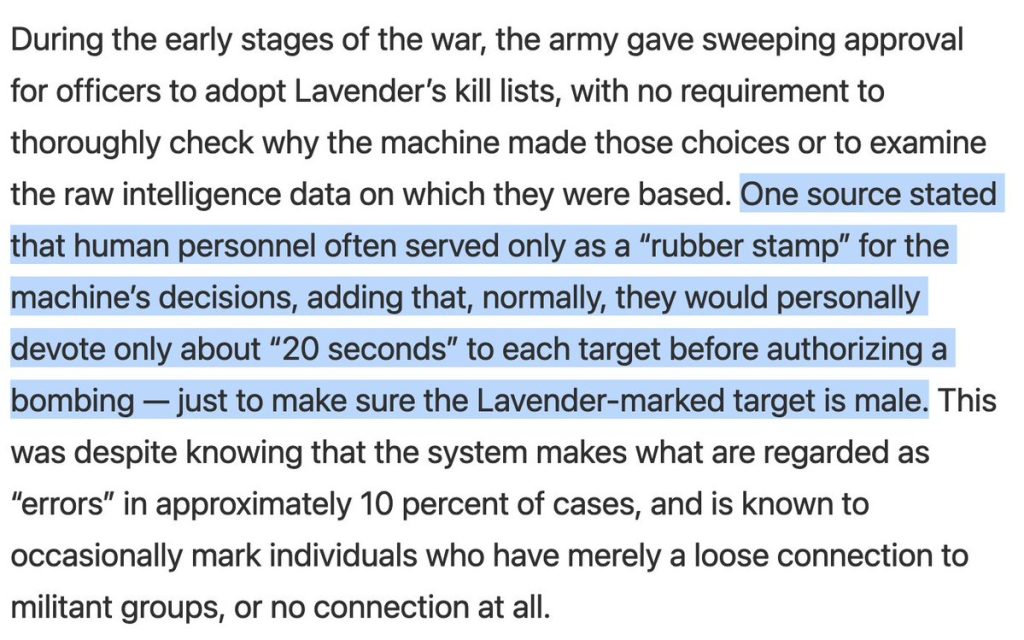

– Another Lavender user questioned whether humans’ role in the selection process was meaningful. “I would invest 20 seconds for each target at this stage, and do dozens of them every day. I had zero added-value as a human, apart from being a stamp of approval. It saved a lot of time”.

– All six of the intelligence officers interviewed by +972 Magazine said that Lavender had played a central role in the war, processing masses of data to rapidly identify potential “junior” operatives to target. Four of the sources said that, at one stage early in the war, Lavender listed as many as 37,000 Palestinian men who had been linked by the AI system to Hamas or PIJ, exceeding the known numbers.

– Several of the sources described how, for certain categories of targets, the IDF applied pre-authorised allowances for the estimated number of civilians who could be killed before a strike was authorized.

– Two sources said that during the early weeks of the war they were permitted to kill 15 or 20 civilians during airstrikes on low-ranking militants. Attacks on such targets were typically carried out using unguided munitions known as “dumb bombs”, the sources said, destroying entire homes and killing all their occupants.

– One intelligence officer said “You don’t want to waste expensive bombs on unimportant people – it’s very expensive for the country and there’s a shortage of those bombs”.

– Another said the principal question they were faced with was whether the “collateral damage” to civilians allowed for an attack. “Because we usually carried out the attacks with dumb bombs, and that meant literally dropping the whole house on its occupants. But even if an attack is averted, you don’t care – you immediately move on to the next target. Because of the system, the targets never end. You have another 36,000 waiting”.

– According to conflict experts quizzed on the Israeli attack plan, if Israel has been using dumb bombs to flatten the homes of thousands of Palestinians who were linked, with the assistance of AI, to militant groups in Gaza, that could help explain the shockingly high death toll in the war.

– The health ministry in the Hamas-run territory says 33,000 Palestinians have been killed in the conflict in the past six months. UN data shows that in the first month of the war alone, 1,340 families suffered multiple losses, with 312 families losing more than 10 members.

– Responding to the publication of the testimonies in +972 Magazine, the IDF said in a statement that its operations were carried out in accordance with the rules of proportionality under international law. It said dumb bombs are “standard weaponry” that are used by IDF pilots in a manner that ensures “a high level of precision”. The statement described Lavender as a database used “to cross-reference intelligence sources, in order to produce up-to-date layers of information on the military operatives of terrorist organisations. This is not a list of confirmed military operatives eligible to attack”.

– In earlier military operations conducted by the IDF, producing human targets was often a more labor-intensive process. Multiple sources who described Lavender system said the decision was made to “incriminate” an individual, or identify them as a legitimate target – discussed but then signed off by a legal adviser.

– In the weeks and months after 7 October, this model for approving strikes on human targets was dramatically “tweaked” and accelerated, according to the sources. As the IDF’s bombardment of Gaza intensified, they said, commanders demanded a continuous pipeline of targets. Said one intelligence officer “We were constantly being pressured: ‘Bring us more targets’. They really shouted at us. We were told: now we have to fuck up Hamas, no matter what the cost. Whatever you can, you bomb. Simple”.

– To meet this demand, the IDF came to rely heavily on Lavender to generate a database of individuals judged to have the characteristics of a PIJ or Hamas militant.

– The only internal investigation of the Lavender system, based on randomly sampling and cross-checking its predictions, showed Lavender had achieved a 90% accuracy rate, leading the IDF to approve its sweeping use as a target recommendation tool. But how this accuracy rating was achieved was never revealed.

– Lavender created a database of tens of thousands of individuals who were marked as predominantly low-ranking members of Hamas’s military wing, they added. This was used alongside another AI-based decision support system, called the Gospel, which recommended buildings and structures as targets rather than individuals. The accounts include first-hand testimony of how intelligence officers worked with Lavender and how the reach of its dragnet could be “adjusted”. At its peak, the system managed to generate 37,000 people as potential human targets. But the numbers changed all the time, because it depends on where you set the bar of what a Hamas operative is. The definition became “fluid”.

– Said one intelligence analyst: “There were times when a Hamas operative was defined more broadly, and then the machine started bringing us all kinds of civil defense personnel, police officers, on whom it would be a shame to waste bombs. They help the Hamas government, but they don’t really endanger soldiers”.

– In the weeks after the Hamas-led 7 October assault on southern Israel, in which Palestinian militants killed nearly 1,200 Israelis and kidnapped about 240 people, the sources said there was a decision to treat Palestinian men linked to Hamas’s military wing as potential targets, regardless of their rank or importance. The IDF’s targeting processes in the most intensive phase of the bombardment became more “relaxed”, said one source. There was “a completely permissive policy regarding the casualties of bombing operations, a policy so permissive that in my opinion it had simply become revenge”.

– Another source, who justified the use of Lavender to help identify low-ranking targets, said that “when it comes to a junior militant, you don’t want to invest manpower and time in it”. They said that in wartime there was insufficient time to carefully “incriminate every target”. He said “so you’re willing to take the margin of error of using artificial intelligence, risking collateral damage and civilians dying, and risking attacking by mistake, and you just live with it. Because it is just much easier to bomb a suspect’s family’s home”.

– This strategy risked higher numbers of civilian casualties, and the sources said the IDF imposed pre-authorized limits on the number of civilians it deemed acceptable to kill in a strike aimed at a single Hamas militant. The ratio was said to have changed over time, and varied according to the seniority of the target.

– According to the testimonies in the +972 Magazine report, the IDF judged it permissible to kill more than 100 civilians in attacks on a top-ranking Hamas officials. Said one intelligence source “We had a calculation for how many civilians could be killed] for the brigade commander, how many civilians for a battalion commander, and so on. It was a simple calculus”.

– One other said “Oh, there were regulations, but they were just very lenient. We’ve killed people with collateral damage in the high double digits, if not low triple digits. These are things that haven’t happened before. There were significant fluctuations in the figure that military commanders would tolerate at different stages of the war”.

– One source said that the limit on permitted civilian casualties “went up and down” over time, and at one point was as low as five. During the first week of the conflict, the source said, permission was given to kill 15 non-combatants to take out junior militants in Gaza. However, they said estimates of civilian casualties were imprecise, as it was not possible to know definitively how many people were in a building.

– Another intelligence officer said “It’s not just that you can kill any person who is a Hamas soldier, which is clearly permitted and legitimate in terms of international law. But they directly tell you: ‘You are allowed to kill them along with many civilians’. In practice, the proportionality criterion simply did not exist”.

– International law experts said they had never remotely heard of a one-to-15 ratio being deemed acceptable, especially for lower-level combatants. One said “We know there is a calculus out there, with a lot of leeway, but that strikes me as extreme”.

Just a few screen shots from the report:

You can read the full report by clicking here.

I spent the week reading the report, plus the tsunami of comment on the report from the wide-range of AI experts and military experts sounding off.

I have written terabytes on the role AI is playing in more and more parts of our lives, both fascinating and scary. But in this case, it has the most dramatic moral and physical consequences. As shocked and upset as we all were after October 7th, now I am simply steaming with rage on how the situation in Gaza has been handled by the Israeli government. There are more and more actions that simply can not be defended by the major goal understood to be eliminating Hamas.

Which was an impossible goal as most military experts have noted. A long-time friend, a former Israeli military intelligence officer, told me that “eradicating Hamas and the other Palestinian militant factions was a hopeless task. We continue to suffer ambushes and other attacks around Gaza City, Central Gaza, and Khan Younis, the other major arenas of combat. Looking at the massive complex of tunnels that extend subterraneously throughout the Strip and serve as the infrastructure for guerilla attacks, holding territory is much less appealing to the Israelis than taking it, and provides significantly diminishing returns. There really is no plan“.

My view? I am told that major combat operations are “drawing to a close”. Of that, I am not so sure. For now, despite delays mainly around hostage negotiations that have advanced and retreated in fits and starts, the Israeli military remains committed to the long-anticipated invasion of Rafah, where the several battalions of Hamas combatants that have yet to see any combat are bunkered. The U.S. is intent “on military victory against Hamas” and no doubt will not stop the flow of weapons to Israel.

But even if major combat operations are drawing to a close, the Israelis and the Americans will remain far from achieving their political objectives, the most important of which are the pacification of Palestinian militancy and the imposition of a new governing authority through which to delegate colonial control.

So for me, to finish the job, my guess is they are rapidly converging on a comprehensive strategy that has been battle-tested over the course of a century of US-led counterinsurgency campaigns: undercut popular support for the resistance and “win hearts and minds” by seizing total control over the provision of basic necessities. In practice, this means the imposition of total siege conditions and the cultivation of starvation, disease, and other forms of deprivation. Thus, through the controlled entry of aid via the political actors they want to empower, Israel and the U.S. can play kingmakers.

But of course, that’s the cynic in me.

Now, as to AI and Lavender, the most important takeaway from this reporting (genuinely stunning, but extremely important) is that the value of military “AI” systems like this doesn’t lie in decision-making, but in the ability to use the sheen of computerized “intelligence” to justify the actions you already wanted.

As far as wartime rhetoric goes, “We killed thousands of innocent people because the quasi-mystical unexplainable hyper-intelligent artificial intelligence gave it a thumbs up” is a lot more defensible than “we killed thousands of people because we wanted to kill them”.

But as I noted last year in my long piece on AI/generative AI, the only important application was in its military use. Its commercial use is for childish games. Militarized “AI” would always be pitched as a way of augmenting human decision-making, but in an increasingly, completely opaque way – a post hoc rationale for killing. The real “explainability problem” in machine learning + lethality writ large.

And keep this passage in mind next time you read the phrase “human in the loop” used in a reassuring manner:

Bottom line? I imagine this will have a huge beneficial psychological implication for the military personnel involved – these machines generally can’t explain their “decisions,” but you’ve been told the system is “intelligent,” so it’s far easier to absolve yourself of responsibility for pressing that button.

Whether for its repressive capabilities, economic potential, or military advantage, AI supremacy will be a strategic objective of every government, every military, with the resources to compete. Across the entire geopolitical spectrum, the competition for AI supremacy will be fierce. And will be uncontrollable. There is a zero-sum dynamic at work. Few people are focusing on how all of this is upending the global power supply chains.