Implants are becoming more sophisticated — and are attracting commercial interest

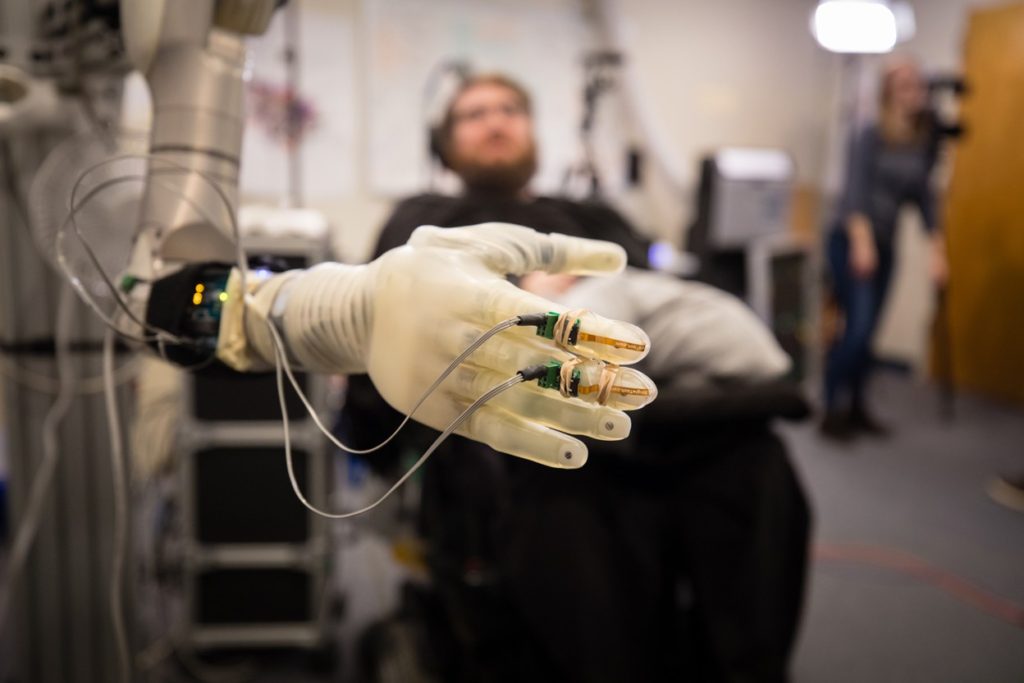

ABOVE: A person with paralysis controls a prosthetic arm using their brain activity

24 May 2022 – A large percentage for my blog subscribers – about 45% – are in software development and artificial intelligence. I have been promising this post on brain-reading devices, which is a mash-up of a series by Liam Drew (writer, neurobiologist) and his team at Nature Magazine. But then the Ukraine War started, I was distracted and … yada yada yada. I returned to the piece today and realised it might have interest to my entire technology community.

James Johnson hopes to drive a car again one day. If he does, he will do it using only his thoughts.

In March 2017, Johnson broke his neck in a go-carting accident, leaving him almost completely paralysed below the shoulders. He understood his new reality better than most. For decades, he had been a carer for people with paralysis. “There was a deep depression,” he says. “I thought that when this happened to me there was nothing — nothing that I could do or give.”

But then Johnson’s rehabilitation team introduced him to researchers from the nearby California Institute of Technology (Caltech) in Pasadena, who invited him to join a clinical trial of a brain–computer interface (BCI). This would first entail neurosurgery to implant two grids of electrodes into his cortex. These electrodes would record neurons in his brain as they fire, and the researchers would use algorithms to decode his thoughts and intentions. The system would then use Johnson’s brain activity to operate computer applications or to move a prosthetic device. All told, it would take years and require hundreds of intensive training sessions. “I really didn’t hesitate,” says Johnson.

The first time he used his BCI, implanted in November 2018, Johnson moved a cursor around a computer screen. “It felt like The Matrix,” he says. “We hooked up to the computer, and lo and behold I was able to move the cursor just by thinking.”

Johnson has since used the BCI to control a robotic arm, use Photoshop software, play ‘shoot-’em-up’ video games, and now to drive a simulated car through a virtual environment, changing speed, steering and reacting to hazards. “I am always stunned at what we are able to do,” he says, “and it’s frigging awesome.”

Johnson is one of an estimated 35 people who have had a BCI implanted long-term in their brain. Only around a dozen laboratories conduct such research, but that number is growing. And in the past five years, the range of skills these devices can restore has expanded enormously. Last year alone, scientists described a study participant using a robotic arm that could send sensory feedback directly to his brain; a prosthetic speech device for someone left unable to speak by a stroke; and a person able to communicate at record speeds by imagining himself handwriting.

ABOVE: James Johnson uses his neural interface to create art by blending images

So far, the vast majority of implants for recording long-term from individual neurons have been made by a single company: Blackrock Neurotech, a medical-device developer based in Salt Lake City, Utah. But in the past seven years, commercial interest in BCIs has surged. Most notably, in 2016, entrepreneur Elon Musk launched Neuralink in San Francisco, California, with the goal of connecting humans and computers. The company has raised US$363 million. Last year, Blackrock Neurotech and several other newer BCI companies also attracted major financial backing.

Bringing a BCI to market will, however, entail transforming a bespoke technology, “road-tested” in only a small number of people, into a product that can be manufactured, implanted and used at scale. Large trials will need to show that BCIs can work in non-research settings and demonstrably improve the everyday lives of users — at prices that the market can support. The timeline for achieving all this is uncertain, but the field is bullish. “For thousands of years, we have been looking for some way to heal people who have paralysis,” says Matt Angle, founding chief executive of Paradromics, a neurotechnology company in Austin, Texas. “Now we’re actually on the cusp of having technologies that we can leverage for those things.”

Interface evolution

In June 2004, researchers pressed a grid of electrodes into the motor cortex of a man who had been paralysed by a stabbing. He was the first person to receive a long-term BCI implant. Like most people who have received BCIs since, his cognition was intact. He could imagine moving, but he had lost the neural pathways between his motor cortex and his muscles. After decades of work in many labs in monkeys, researchers had learnt to decode the animals’ movements from real-time recordings of activity in the motor cortex. They now hoped to infer a person’s imagined movements from brain activity in the same region.

In 2006, a landmark paper described how the man had learnt to move a cursor around a computer screen, control a television and use robotic arms and hands just by thinking. The study was co-led by Leigh Hochberg, a neuroscientist and critical-care neurologist at Brown University in Providence, Rhode Island, and at Massachusetts General Hospital in Boston. It was the first of a multicentre suite of trials called BrainGate, which continues today.

The following is an incredible video. Three participants with paralysis in the BrainGate clinical trial controlled a tablet computer just by thinking about it, thanks to a tiny brain implant:

“It was a very simple, rudimentary demonstration,” Hochberg says. “The movements were slow or imprecise — or both. But it demonstrated that it might be possible to record from the cortex of somebody who was unable to move and to allow that person to control an external device.”

Today’s BCI users have much finer control and access to a wider range of skills. In part, this is because researchers began to implant multiple BCIs in different brain areas of the user and devised new ways to identify useful signals. But Hochberg says the biggest boost has come from machine learning, which has improved the ability to decode neural activity. Rather than trying to understand what activity patterns mean, machine learning simply identifies and links patterns to a user’s intention.

“We have neural information; we know what that person who is generating the neural data is attempting to do; and we’re asking the algorithms to create a map between the two,” says Hochberg. “That turns out to be a remarkably powerful technique.”

Motor independence

Asked what they want from assistive neurotechnology, people with paralysis most often answer “independence”. For people who are unable to move their limbs, this typically means restoring movement.

One approach is to implant electrodes that directly stimulate the muscles of a person’s own limbs and have the BCI directly control these. “If you can capture the native cortical signals related to controlling hand movements, you can essentially bypass the spinal-cord injury to go directly from brain to periphery,” says Bolu Ajiboye, a neuroscientist at Case Western Reserve University in Cleveland, Ohio.

In 2017, Ajiboye and his colleagues described a participant who used this system to perform complex arm movements, including drinking a cup of coffee and feeding himself. “When he first started the study,” Ajiboye says, “he had to think very hard about his arm moving from point A to point B. But as he gained more training, he could just think about moving his arm and it would move.” The participant also regained a sense of ownership of the arm.

Ajiboye is now expanding the repertoire of command signals his system can decode, such as those for grip force. He also wants to give BCI users a sense of touch, a goal being pursued by several labs.

In 2015, a team led by neuroscientist Robert Gaunt at the University of Pittsburgh in Pennsylvania, reported implanting an electrode array in the hand region of a person’s somatosensory cortex, where touch information is processed. When they used the electrodes to stimulate neurons, the person felt something akin to being touched.

Gaunt then joined forces with Pittsburgh colleague Jennifer Collinger, a neuroscientist advancing the control of robotic arms by BCIs. Together, they fashioned a robotic arm with pressure sensors embedded in its fingertips, which fed into electrodes implanted in the somatosensory cortex to evoke a synthetic sense of touch1. It was not an entirely natural feeling — sometimes it felt like pressure or being prodded, other times it was more like a buzzing, Gaunt explains. Nevertheless, tactile feedback made the prosthetic feel much more natural to use, and the time it took to pick up an object was halved, from roughly 20 seconds to 10.

Implanting arrays into brain regions that have different roles can add nuance to movement in other ways. Neuroscientist Richard Andersen — who is leading the trial at Caltech in which Johnson is participating — is trying to decode users’ more-abstract goals by tapping into the posterior parietal cortex (PPC), which forms the intention or plan to move. That is, it might encode the thought ‘I want a drink’, whereas the motor cortex directs the hand to the coffee, then brings the coffee to the mouth.

Andersen’s group is exploring how this dual input aids BCI performance, contrasting use of the two cortical regions alone or together. Unpublished results show that Johnson’s intentions can be decoded more quickly in the PPC, “consistent with encoding the goal of the movement”, says Tyson Aflalo, a senior researcher in Andersen’s laboratory. Motor-cortex activity, by contrast, lasts throughout the whole movement, he says, “making the trajectory less jittery”.

This new type of neural input is helping Johnson and others to expand what they can do. Johnson uses the driving simulator, and another participant can play a virtual piano using her BCI.

Movement into meaning

“One of the most devastating outcomes related to brain injuries is the loss of ability to communicate,” says Edward Chang, a neurosurgeon and neuroscientist at the University of California, San Francisco. In early BCI work, participants could move a cursor around a computer screen by imagining their hand moving, and then imagining grasping to ‘click’ letters — offering a way to achieve communication. But more recently, Chang and others have made rapid progress by targeting movements that people naturally use to express themselves.

The benchmark for communication by cursor control — roughly 40 characters per minute — was set in 2017 by a team led by Krishna Shenoy, a neuroscientist at Stanford University in California.

Then, last year, this group reported an approach that enabled study participant Dennis Degray, who can speak but is paralysed from the neck down, to double the pace.

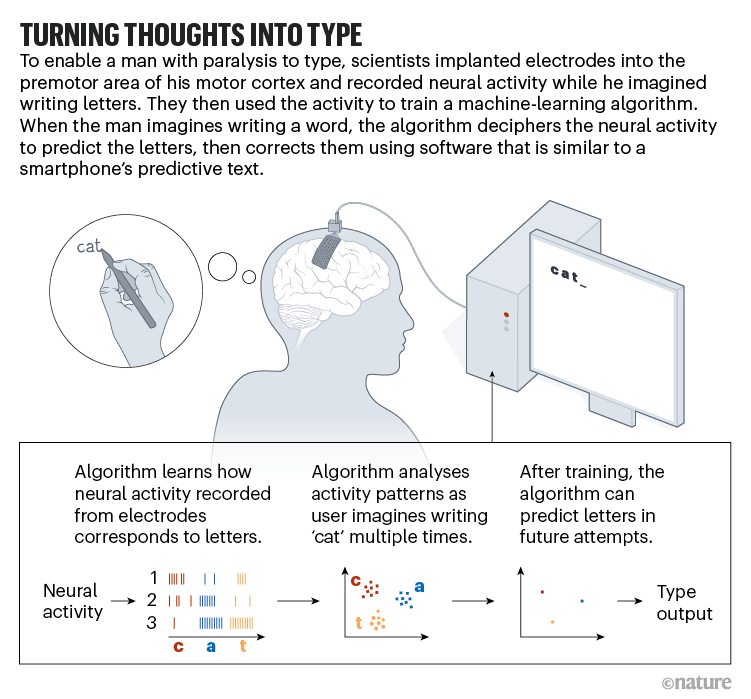

Shenoy’s colleague Frank Willett suggested to Degray that he imagine handwriting while they recorded from his motor cortex (see ‘Turning thoughts into type’). The system sometimes struggled to parse signals relating to letters that are handwritten in a similar way, such as r, n and h, but generally it could easily distinguish the letters. The decoding algorithms were 95% accurate at baseline, but when autocorrected using statistical language models that are similar to predictive text in smartphones, this jumped to 99%.

“You can decode really rapid, very fine movements,” says Shenoy, “and you’re able to do that at 90 characters per minute.”

Degray has had a functional BCI in his brain for nearly 6 years, and is a veteran of 18 studies by Shenoy’s group. He says it’s remarkable how effortless tasks become. He likens the process to learning to swim, saying, “You thrash around a lot at first, but all of a sudden, everything becomes understandable.”

Chang’s approach to restoring communication focuses on speaking rather than writing, albeit using a similar principle. Just as writing is formed of distinct letters, speech is formed of discrete units called phonemes, or individual sounds. There are around 50 phonemes in English, and each is created by a stereotyped movement of the vocal tract, tongue and lips.

Chang’s group first worked on characterizing the part of the brain that generates phonemes and, thereby, speech — an ill-defined region called the dorsal laryngeal cortex. Then, the researchers applied these insights to create a speech-decoding system that displayed the user’s intended speech as text on a screen. Last year, they reported that this device enabled a person left unable to talk by a brainstem stroke to communicate, using a preselected vocabulary of 50 words and at a rate of 15 words per minute. “The most important thing that we’ve learnt,” Chang says, “is that it’s no longer a theoretical; it’s truly possible to decode full words.”

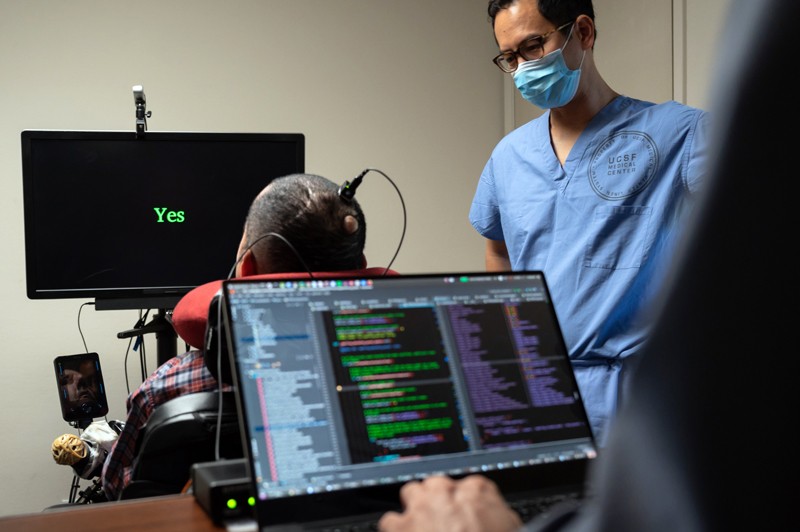

ABOVE: Neuroscientist Edward Chang at the University of California, San Francisco, helps a man with paralysis to speak through a brain implant that connects to a computer.

Unlike other high-profile BCI breakthroughs, Chang didn’t record from single neurons. Instead, he used electrodes placed on the cortical surface that detect the averaged activity of neuronal populations. The signals are not as fine-grained as those from electrodes implanted in the cortex, but the approach is less invasive.

The most profound loss of communication occurs in people in a completely locked-in state, who remain conscious but are unable to speak or move. In March, a team including neuroscientist Ujwal Chaudhary and others at the University of Tübingen, Germany, reported restarting communication with a man who has amyotrophic lateral sclerosis (ALS, or motor neuron disease). The man had previously relied on eye movements to communicate, but he gradually lost the ability to move his eyes.

The team of researchers gained consent from the man’s family to implant a BCI and tried asking him to imagine movements to use his brain activity to choose letters on a screen. When this failed, they tried playing a sound that mimicked the man’s brain activity — a higher tone for more activity, lower for less — and taught him to modulate his neural activity to heighten the pitch of a tone to signal ‘yes’ and to lower it for ‘no’. That arrangement allowed him to pick out a letter every minute or so.

The method differs from that in a paper published in 2017, in which Chaudhary and others used a non-invasive technique to read brain activity. Questions were raised about the work and the paper was retracted, but Chaudhary stands by it.

These case studies suggest that the field is maturing rapidly, says Amy Orsborn, who researches BCIs in non-human primates at the University of Washington in Seattle. “There’s been a noticeable uptick in both the number of clinical studies and of the leaps that they’re making in the clinical space,” she says. “What comes along with that is the industrial interest”.

Lab to market

Although such achievements have attracted a flurry of attention from the media and investors, the field remains a long way from improving day-to-day life for people who’ve lost the ability to move or speak. Currently, study participants operate BCIs in brief, intensive sessions; nearly all must be physically wired to a bank of computers and supervised by a team of scientists working constantly to hone and recalibrate the decoders and associated software. “What I want,” says Hochberg, speaking as a critical-care neurologist, “is a device that is available, that can be prescribed, that is ‘off the shelf’ and can be used quickly.” In addition, such devices would ideally last users a lifetime.

Many leading academics are now collaborating with companies to develop marketable devices. Chaudhary, by contrast, has co-founded a not-for-profit company, ALS Voice, in Tübingen, to develop neurotechnologies for people in a completely locked-in state.

Blackrock Neurotech’s existing devices have been a mainstay of clinical research for 18 years, and it wants to market a BCI system within a year, according to chairman Florian Solzbacher. The company came a step closer last November, when the US Food and Drug Administration (FDA), which regulates medical devices, put the company’s products onto a fast-track review process to facilitate developing them commercially.

This possible first product would use four implanted arrays and connect through wires to a miniaturized device, which Solzbacher hopes will show how people’s lives can be improved. “We’re not talking about a 5, 10 or 30% improvement in efficacy,” he says. “People can do something they just couldn’t before.”

Blackrock Neurotech is also developing a fully implantable wireless BCI intended to be easier to use and to remove the need to have a port in the user’s cranium. Neuralink and Paradromics have aimed to have these features from the outset in the devices they are developing.

These two companies are also aiming to boost signal bandwidth, which should improve device performance, by increasing the number of recorded neurons. Paradromics’s interface — currently being tested in sheep — has 1,600 channels, divided between 4 modules.

Neuralink’s system uses very fine, flexible electrodes, called threads, that are designed to both bend with the brain and to reduce immune reactions, says Shenoy, who is a consultant and adviser to the company. The aim is to make the device more durable and recordings more stable. Neuralink has not published any peer-reviewed papers, but a 2021 blogpost reported the successful implantation of threads in a monkey’s brain to record at 1,024 sites. Academics would like to see the technology published for full scrutiny, and Neuralink has so far trialled its system only in animals. But, Ajiboye says, “if what they’re claiming is true, it’s a game-changer”.

Just one other company besides Blackrock Neurotech has implanted a BCI long-term in humans — and it might prove an easier sell than other arrays. Synchron in New York City has developed a ‘stentrode’ — a set of 16 electrodes fashioned around a blood-vessel stent. Fitted in a day in an outpatient setting, this device is threaded through the jugular vein to a vein on top of the motor cortex. First implanted in a person with ALS in August 2019, the technology was put on a fast-track review path by the FDA a year later.

ABOVE: The ‘stentrode’ interface can translate brain signals from the inside of a blood vessel without the need for open-brain surgery

Akin to the electrodes Chang uses, the stentrode lacks the resolution of other implants, so can’t be used to control complex prosthetics. But it allows people who cannot move or speak to control a cursor on a computer tablet, and so to text, surf the Internet and control connected technologies.

Synchron’s co-founder, neurologist Thomas Oxley, says the company is now submitting the results of a four-person feasibility trial for publication, in which participants used the wireless device at home whenever they chose. “There’s nothing sticking out of the body. And it’s always working,” says Oxley. The next step before applying for FDA approval, he says, is a larger-scale trial to assess whether the device meaningfully improves functionality and quality of life.

Challenges ahead

Most researchers working on BCIs are realistic about the challenges before them. “If you take a step back, it is really more complicated than any other neurological device ever built,” says Shenoy. “There’s probably going to be some hard growing years to mature the technology even more.”

Orsborn stresses that commercial devices will have to work without expert oversight for months or years — and that they need to function equally well in every user. She anticipates that advances in machine learning will address the first issue by providing recalibration steps for users to implement. But achieving consistent performance across users might present a greater challenge.

“Variability from person to person is the one where I don’t think we know what the scope of the problem is,” Orsborn says. In non-human primates, even small variations in electrode positioning can affect which circuits are tapped. She suspects there are also important idiosyncrasies in exactly how different individuals think and learn — and the ways in which users’ brains have been affected by their various conditions.

Finally, there is widespread acknowledgement that ethical oversight must keep pace with this rapidly evolving technology. BCIs present multiple concerns, from privacy to personal autonomy. Ethicists stress that users must retain full control of the devices’ outputs. And although current technologies cannot decode people’s private thoughts, developers will have records of users’ every communication, and crucial data about their brain health. Moreover, BCIs present a new type of cybersecurity risk.

There is also a risk to participants that their devices might not be supported forever, or that the companies that manufacture them fold. There are already instances in which users were let down when their implanted devices were left unsupported.

Degray, however, is eager to see BCIs reach more people. What he would like most from assistive technology is to be able to scratch his eyebrow, he says. “Everybody looks at me in the chair and they always say, ‘Oh, that poor guy, he can’t play golf any more.’ That’s bad. But the real terror is in the middle of the night when a spider walks across your face. That’s the bad stuff.”

For Johnson, it’s about human connection and tactile feedback; a hug from a loved one. “If we can map the neurons that are responsible for that and somehow filter it into a prosthetic device some day in the future, then I will feel well satisfied with my efforts in these studies.”