Meta hopes to use tiny human expressions to create a virtual world of personalised ads

21 January 2022 (Paris, France) – As I have noted before, it occurred to me that the term “metaverse” has a lot in common with “information superhighway”, both in the sense of a single unified system, and in the creation of a term well in advance of any actual product. But then products and methods and mechanisms appear and voila – all of a sudden it is not just a term but a real thing.

Yes, the metaverse (so far) is mostly hopes and dreams, but proponents say it will be a digital realm where people routinely use virtual avatars to work, play, shop and enjoy entertainment. Microsoft’s acquisition of Activision Blizzard certainly demonstrates that and you can read why in my analysis from earlier this week by clicking here.

And also Facebook (Meta). Pupil movements, body poses and nose scrunching are among the flickers of human expression that Meta wants to harvest in building its metaverse, according to an analysis of dozens of patents recently granted to Facebook’s parent company. Zuckerberg has pledged to spend $10bn a year over the next decade into the nebulous and much-hyped concept denoting an immersive virtual world filled with avatars. Even Apple is pursuing similar aims that Big Tech executives describe as part of the next evolution of the internet.

NOTE: Apple earlier this month briefly became the first corporation ever valued above $3 trillion during intraday trading. Investors and analysts say they are betting that Apple will introduce extended reality devices in the next year or so and open up potential for a new leg of growth in coming years. The company, for its part, says little about future plans, though Tim Cook has praised such technology in recent years and said it would be a critically important part of Apple’s future. Analysts think Apple could extend its ecosystem of hardware, software and services into the metaverse. But that’s a subject for a future post.

The Financial Times has reviewed hundreds of Facebook/Meta applications to the U.S. Patent and Trademark Office, many of which were granted this month. I went through a large portion of the patent filings myself and I read the Financial Times analysis + a few media interviews on the subject, and then ran the whole lot by my IP crowd and my tech/software mavens. All of this reveals that Meta has patented multiple technologies that wield users’ biometric data in order to help power what the user sees and ensure their digital avatars are animated realistically. But the patents also indicate how the Silicon Valley group intends to cash in on its virtual world, with hyper-targeted advertising and sponsored content that mirrors its existing $85bn-a-year ad-based business model.

This includes proposals for a “virtual store” where users can buy digital goods, or items that correspond with real-world goods that have been sponsored by brands. Said Nick Clegg, Meta’s head of global affairs, during a recent interview:

“Clearly ads play a part in that. For us, the business model in the metaverse is commerce-led”.

The patents do not mean that Meta will definitely build the technology, but they offer the clearest indication yet of how the company aims to make its immersive world into a reality.

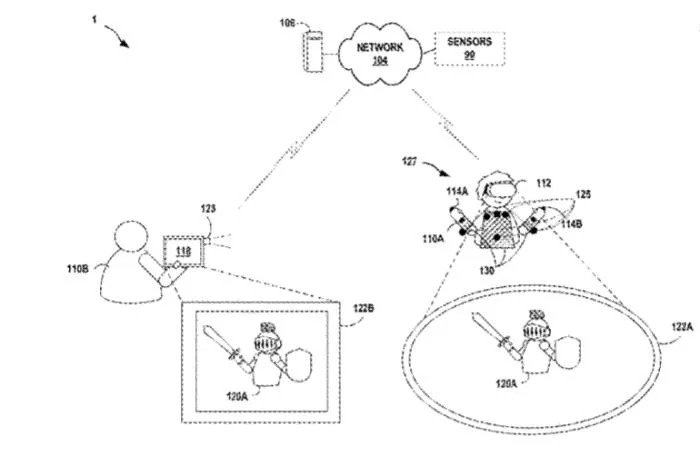

ABOVE: Meta patent filing showing a ‘wearable magnetic sensor system’. Sketch gives example of a soldier in sword and armour appearing in a virtual world © Meta patent

Some of the patents relate to eye and face tracking technology, typically collected in a headset via tiny cameras or sensors, which may be used to enhance a user’s virtual or augmented reality experience. For example, a person will be shown brighter graphics where their gaze falls, or ensuring their avatar mirrors what they are doing in real life. One Meta patent, granted on January 4, lays out a system for tracking a user’s facial expressions through a headset that will then “adapt media content” based on those responses.

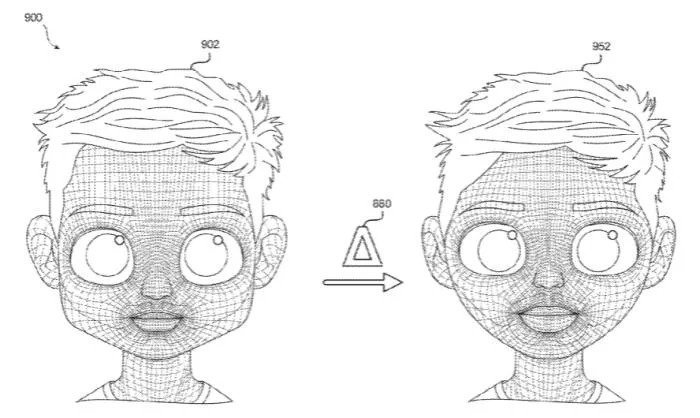

There is a “wearable magnetic sensor system” to be placed around a torso for “body pose tracking”. The patent includes sketches of a user wearing the device but appearing in virtual reality as a soldier complete with a sword and armour. Another patent proposes an “avatar personalisation engine” that can create three dimensional avatars based on a user’s photos, using tools including a so-called skin replicator. Noelle Martin, a legal reformer with the University of Western Australia, has spent more than a year researching Meta’s human-monitoring ambitions and he noted:

“Meta aims to be able to simulate you down to every skin pore, every strand of hair, every micromovement. The objective is to create 3D replicas of people, places and things, so hyper-realistic and tactile that they’re indistinguishable from what’s real, and then to intermediate any range of services, In truth, they’re undertaking a global human-cloning programme.”

ABOVE: Meta patent application image showing an ‘avatar personalisation engine’ that can create 3D avatars based on a user’s photos using tools such as a so-called skin replicator © Meta patent application

The project has allowed the company, which in recent times has been stung by other scandals over moderation and privacy, to attract engineers from rivals such as Microsoft amid a fierce battle for talent between the world’s biggest technology companies.

NOTE: Since changing its name from Facebook to Meta in late October in a corporate rebranding, the company’s share price has risen about 5 per cent to $329.21. Critics remain sceptical of the vision, suggesting the effort is a distraction from recent scrutiny after whistleblower Frances Haugen last year publicly accused the company of prioritising profit over safety. What are they going to do with more data and how are they going to make sure it is secure? Do they care?

Some patents appear focused on helping Meta with its ambitions to find new revenue sources amid concern over fading interest with younger users in its core social networking products such as Facebook. Zuckerberg has indicated the company plans to keep the prices of its headsets low, but instead draw revenues in its metaverse from advertising, and by supporting sales of digital goods and services in its virtual world.

One patent explores how to present users with personalised advertising in augmented reality, based on age, gender, interest and “how the users interact with a social media platform”, including their likes and comments. Another seeks to allow third parties to “sponsor the appearance of an object” in a virtual store that mirrors the layout of a retail store, through a bidding process similar to the company’s existing advertising auction process. The patents indicate how Meta could offer ads in its immersive world that are even more personalised than what is possible within its existing web-based products.

Research shows that eye gaze direction and pupil activity may implicitly contain information about a user’s interests and emotional state. For example, if a user’s eyes linger over an image, this may indicate they like it. For those of you who followed my series on the work of the International Federation for Information Processing (IFIP) you know that technologies to measure gaze direction and pupil reactivity have become efficient, cheap, and compact and are finding increasing use in many fields, including gaming, marketing, driver safety, military, and healthcare. To summarise my 3-part series:

• Through the lens of advanced data analytics, gaze patterns can reveal much more information than a user wishes and expects to give away.

• Drawing from a broad range of scientific disciplines, the IFIP has provided a structured overview of personal data that can be inferred from recorded eye activities.

• It’s analysis of the literature shows that eye tracking data may implicitly contain information about a user’s biometric identity, gender, age, ethnicity, body weight, personality traits, drug consumption habits, emotional state, skills and abilities, fears, interests, and sexual preferences.

• Certain eye tracking measures may even reveal specific cognitive processes and can be used to diagnose various physical and mental health conditions.

• By portraying the richness and sensitivity of gaze data, all of these papers provide an important basis for consumer education, privacy impact assessments, and further research into the societal implications of eye tracking.

Nick Clegg clearly alluded to all of this in another interview:

“Clearly, you could do something similar to existing ad targeting systems in the metaverse — where you’re not selling eye-tracking data to advertisers, but in order to understand whether people engage with an advertisement or not, you need to be able to use data to know”.

Brittan Heller, a well-know technology lawyer at Foley Hoag who has built a specialised practice around the areas of law, technology, content moderation and human rights (an expert on disinformation, civic engagement, cyberhate and online extremism) puts in rather bluntly:

“My nightmare scenario is that targeted advertising based on our involuntary biological reactions to stimuli is going to start showing up in the metaverse. Most people don’t realise how valuable that could be. Right now there are no legal constraints on that.”

Meta’s response was classic Big Tech:

“While we don’t comment on specific coverage of our patents or our reasons for filing them, it’s important to note that our patents don’t necessarily cover the technology used in our products and services.”

Get out your 🍿