23 October 2020 (Serifos, Greece) – As I have noted in my coverage of the Google antitrust suit dropped by the DOJ this week, and as I have said in previous posts, there is more involved here than just legal questions. There are complex dynamics at work. We need to examine and distinguish between different kinds of economies of scale, operational and technical and innovative economies of scale and the necessary and legal structures.

The DOJ case may not address any of these issues. Most telling was a comment made to me by a senior staff attorney at a U.S. law firm which will be heavily involved in defending Google in this DOJ suit: “We kind of breathed a sigh of relief. They did not come after us for the really big stuff”. It added credence to the stories in the media that reported many DOJ attorneys working on the case (some of whom resigned) believed it was rushed for political gain.

The power of Google (and Apple and Amazon and Facebook) has forced a reinterpretation of narratives, data, software, and news events – in so many ways. What we have are four companies aggressively competing and cooperating with each other, and driving each other on, and each trying somehow to commoditise the others’ businesses. But none of them quite pose a threat to the others’ core. News and information flow differently now on Google News than they did before Facebook. But capturing the human attention that constitutes that flow is Facebook’s raison d’être (and value to advertisers), but not Google’s raison d’être. Apple won’t do better search than Google and Amazon won’t do better operating systems than Apple. But the adjacencies and the new endpoints that they create do overlap, even if these companies get to them from different directions. Different reasons, different philosophies, but similar endpoints.

And you cannot understand it without diving into the technology. Last week I finally completed my paper on how the Google-dominated programmatic ad world works which you’ll all receive in due course. Over the last few days I have been attending various sessions on entertainment and the Internet of Things at the virtual Cannes Lions event.

I tend to wax lyrical about Cannes Lions. It is what I like to call “The Conference of the World’s Attention Merchants”. Because over the last decade, the festival has evolved to include not only more brand clients but also, due to media fragmentation which has led to the change of some and the birth of others, an expansion into tech companies, social media platforms, consultancies, entertainment and media companies — essentially the entire attention ecosystem. Now retired, and assuming they revive (and I am still alive), the only events I’ll be attending is Cannes Lions and the Mobile World Congress in Barcelona.

So, pulling together my notes from the Cannes Lions sessions plus my research notes, let’s take a brief look at how Google has assembled (almost) all the parts of a truly smart home – DOJ lawsuits and data privacy be damned.

When Google Now was introduced in 2012, I wrote a post “Context is king” (now lost because it was on a previous web site since gone mute). My point was that the writing was on the wall: here was a system that would develop to know who you are, where you are, and what you’re doing. It would contextually deliver information or actions that are hyper-relevant to you. But while hyper-relevant information and services are useful when you’re out and about, they’re even more useful when you’re at home.

And now .. ta-da! … Google has the pieces to deliver them in the smart home.

Above: the new Nest Wifi router and Nest WiFi point which doubles as a Google Assistant speaker

Some of those pieces Google has built up over years – namely, the treasure trove of personal data users provide to it when using its web search services. Many people rightly focus on how Google uses that information to provide you with contextual ads.

But if you use Google Maps, it also knows where you are, where you’ve been, and where you might be going. Search on your computer for a hardware store, for example, and Maps can surface the location on your phone when you leave the house. And if you’ve configured it with your home and work address, Google matches time and location with those destinations. It’s why you might see a notification telling you to leave for work earlier than usual due to traffic.

Context in the home is a challenge

The type of contextual information that Google fleshes out on you is more difficult to gather when you’re at home, as most of us are these days. That’s because, in the home, Google needs different information about you. And it’s not information that you might type into a search box or ask your Google Assistant.

A smart home needs to know who is home, what room they’re in, and how they may like their environment to be, such as in terms of lighting and temperature. That contextual information comes from sensors, cameras, microphones, and the like. It’s why back in May Google added a feature to its Nest Aware subscription plan that alerts you when your Nest products hear certain sounds. Broken glass indicating a break-in and sirens from smoke alarms to know if there’s an emergency situation were the first two contextual alerts offered. Those are relatively simple audio events to listen for, but Google has AI and ML smarts that could listen for and interpret less clear-cut situations, too.

Every smart device is a data endpoint

Even without a camera in your kitchen, a nearby Nest audio product would be able to hear the sound of running water, know which direction the sound is coming from, and recognize that it’s the kitchen sink. In other words, even without video proof Google can detect that someone is present in the kitchen.

Let’s take things a step further. Suppose you have a connected “Works with Google” toothbrush that Google knows you use every morning just prior to leaving for work. By taking that context and combining it with other information it already has, Google could offer to remotely start your car as you brush when the temperature outside is below freezing, because why sit with clean but chattering teeth for the first five minutes of your commute?

That “Works with Google” program is a key element here, and not just because it may allow voice control for devices. Each of these supported products is also an endpoint for Google to gather more contextual information about us and our homes.

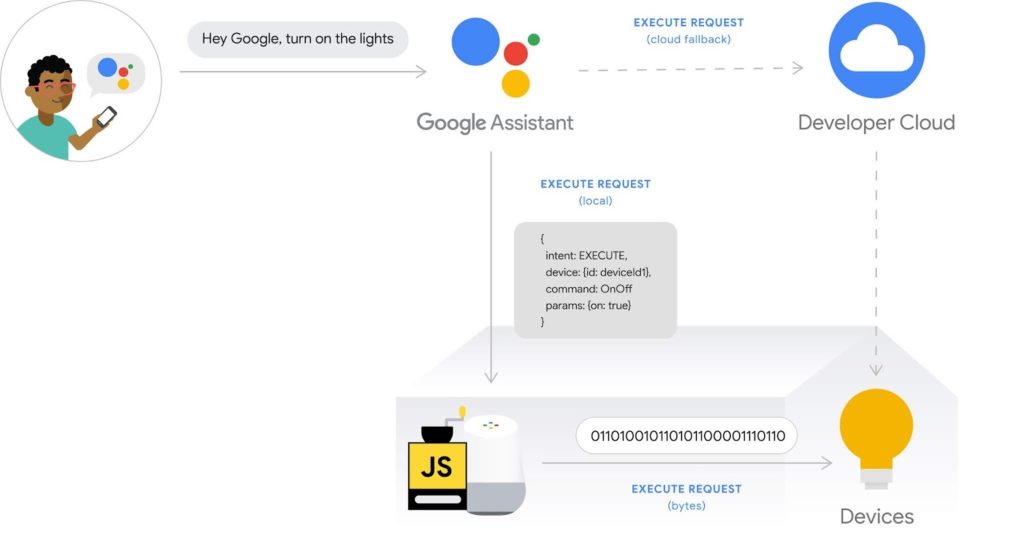

Don’t forget about Google’s “Voice Match” feature and its move towards local processing. Currently, Google matches contextual information with the person asking about their calendar events and such. It knows who is asking the question, so it can potentially determine who is at home or even who is in what room simply by hearing that person’s voice.

Yes, data privacy experts/mavens reading this, that sounds pretty damn invasive. However, in their defence (*choke*) Google is making a push for localized actions on its smart speakers so that the captured audio and other data stays in the home instead of getting uploaded to its servers. This could remove some of the privacy invasion feelings people may have.

Presence detection is camera-less and invisible

Also, Google has also been making advances in detecting people in front of its smart devices. Nest Hub, Nest Hub Max, Nest Mini, and Nest Wifi all permit ultrasonic pulses to detect when someone is nearby.

Note: the latter two devices don’t have cameras. Yet Google is able to detect presence in the smart home with an alternative solution. It can do the same with Nest thermostats as well, with a traditional IR proximity sensor.

And just this week, it was reported that Google is testing a project called “Blue Steel” on the Nest Hub Max. Simply by standing in front of the smart speaker, a person can ask Google questions or issue smart home commands without saying the “OK Google” hotword. Since “Blue Steel” is still an internal project, it’s not yet clear if Google is using ultrasonic pulses for person detection. One way or another, a camera-less approach to context in the smart home is important because it eliminates something many people, including my family, don’t want – cameras in every room of the house.

The smart home has come far in 2 short years

Whether a smart home uses cameras, microphones, or other sensors, it all brings me back to the 2019 Consumer Electronics Show. The general consensus was the smart home really wasn’t that *smart*. Almost all the media reports coming out of that event said the same thing: what was needed were devices that require less user interaction from a voice or programming standpoint to make them smart. Instead, by algorithmically learning our personal preferences and schedules, a smart home with predictive intelligence should optionally suggest what to turn on, watch, or listen to as well as when, where and in the best lighting/temperature climate for a truly personalized and smart home experience.”

Well, we’re not quite there yet but given just Google’s efforts to advance and mature its products, combining them with AI, ML, and our data profiles, we’re on our way – for better or worse – to an intelligent, contextual smart home experience. Your call if you want it.

But this will happen.