“The Lives of Others”: the Siri, Google and Cortana edition

“The Lives of Others”: the Siri, Google and Cortana edition

12 April 2019 (Brussels, Belgium) — This past December when I was in the States I had the opportunity to hear a presentation by Florian Schaub, a professor at the University of Michigan who has probably done the most research on the technical operation of smart speakers … and the massive privacy issues related to those speakers … and has become the “go to” source for that technology. As he stated in the December presentation, and as he has been quoted this week, we don’t necessarily think another human is actually listening to what you’re telling your smart speaker in the intimacy of your home. We just think these machines are doing “magic” machine learning. But the fact is there is still manual processing involved.

And if you don’t subscribe to Bloomberg Technology, you should. At last week’s International Journalism Festival in Perugia, Italy (I’ll have a full report next week) they were the talk of the town, having built up a mighty force of investigative reporters and blowing the lid off a number of technology stories. And this week they did it again. They have unearthed the secrets of Amazon’s analysts in Romania, reporting on their work for the first time:

A global team reviews audio clips in an effort to help the voice-activated assistant respond to commands. Amazon has not previously acknowledged the existence of this process, or the level of human intervention.

You can read the full story by clicking here.

Amazon, in its marketing and privacy policy materials, doesn’t explicitly say humans are listening to recordings of some conversations picked up by Alexa. Although their website does say “We take the security and privacy of our customers’ personal information seriously. We have strict technical and operational safeguards, and have a zero tolerance policy for the abuse of our system”. And that employees do not have direct access to information that can identify the person or account as part of this workflow.

But they do say “We use your requests to Alexa to train our speech recognition and natural language understanding systems. We only annotate an extremely small sample of Alexa voice recordings in order to improve the customer experience. For example, this information helps us train our speech recognition and natural language understanding systems, so Alexa can better understand your requests, and ensure the service works well for everyone”.

That last paragraph must refer to the Romanians, especially this recent Amazon job posting one of my Romanian sources sent me, placed by Alexa Data Services in Bucharest looking for “human reviewers”:

Every day she [Alexa] listens to thousands of people talking to her about different topics and different languages, and she needs our help to make sense of it all. This is big data handling like you’ve never seen it. We’re creating, labeling, curating and analyzing vast quantities of speech on a daily basis.

The Bloomberg story has been picked up (and added to) by the Financial Times, the Register, The Intercept, and a host of other sites. So I have read through all the material, called a few of my tech geek contacts to get their read, and done a bit of a mash-up for you.

Those damn sneezes!

Sneezes and homophones (words that sound like other words) are messing with smart speakers. So something had to be done … like allow strangers to hear recordings of your private conversations. As one of my cyber security colleagues, Jack Kaft, said:

This Romania thing. I do find it somewhat amusing that we expect non-native speakers of any language that can be hired cheaply to accurately work out something said in a mumble. Or understand local slang. Or patois. Which means it is unlikely to improve things much. But then I can actually answer my own question. Because it cuts to the nub of the issue. It’s all about cheap labor. And having worked on similar contracted projects, if these guys get it right say 50% of the time, then corporate is happy. Oh, the consumer just does not get how the tech world works.

And it’s a little like that Stasi agent in the movie “The Lives of Others”. They’re contracted to work for the device manufacturer – machine learning data analysts – and the snippets they hear were never intended for third-party consumption. But this stuff gets released, nonetheless.

Most amusing story I came across? In the UK, an Alexa user requested the top ten hookers in the UK (a position in rugby) but kept getting sex worker references. Turns out the UK Alexa was interpreting based on its U.S. programming.

As the Financial Times explained earlier this week in part of its continuing series on AI:

Supervised learning requires what is known as “human intelligence” to train algorithms, which very often means cheap labour in the developing world. So Amazon sends fragments of recordings to the training team to improve Alexa’s speech recognition. Thousands are employed to listen to Alexa recordings in Boston, India and Romania.

Huh. Boston. The developing world. They must mean South Boston. But more amusing(?) is the Financial Times did a search of Amazon’s Mechanical Turk marketplace and found one ad that showed a “human intelligence task” that would pay someone 25 cents to spend 12 minutes teaching an algorithm to make a green triangle navigate a maze to reach a green square. And another to decipher voice segments. Also at 25 cents. That’s an hourly rate of $1.25.

As the Bloomberg article points out, Alexa (should) only respond to a “wake word”, at least according to Amazon. However, because Alexa can misinterpret sounds and homophones as its default wake word, the Romania team was able to hear audio never intended for transmission to Amazon. The team received recordings of embarrassing and disturbing material, including at least one sexual assault. Interesting to note that Amazon encourages staff disturbed by what they hear to console each other, but didn’t elaborate on whether counselling was available.

Privacy? Did somebody mention privacy?

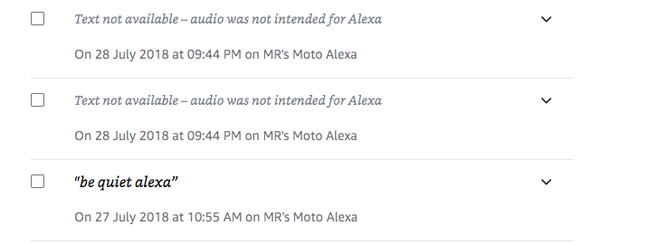

The retention of the audio files is purportedly voluntary, but this is far from clear in the information Amazon gives users. Amazon and Google allow the voice recordings to be deleted from your account, for example, but this may not be permanent: the recording could continue to be used for training purposes (Google’s explanation can be found here, and Amazon’s explanation can be found here). But a personal history shows Amazon continues to hold audio files of data “not intended for Alexa”:

Privacy campaigners cite two areas of concern:

1. Voice platforms should offer an “auto purge” function deleting recordings older than a day, or 30 days

2. There has to be some assurance that, once deleted, a file is gone forever. Right now, neither Amazon or Google have provided any kind of formal legal clarity.

Oh, yes, those smart TVs

Not covered in the smart speaker stories, but much discussed at the Mobile World Congress in February and by my tech geek chats this week, are those “smart TVs” connected to the web with their all-hearing microphones. And the fact that all of these TVs have “connected to the web” enabled by default. So you need to go through all kinds of machinations to disable the microphone function. And it is pretty tough to just buy a simple, “dumb” TV. Well, except in the Far East where there is a lively trade of dumb TVs.

Yes, you can make a smart TV a dumb TV by not allowing it to connect to the internet. Don’t plug in that RJ-45 network cable, or enable/provide the WiFi login details (or provide an isolated firewalled connection), etc. Of course, some people will point out that if the manufacturers are persistent enough, they could do things like have the TV’s WiFi automatically connect to open WiFi networks, or embed 3G/LTE modems into the devices or other similar shenanigans. But, if you are sufficiently paranoid about that happening, this can be defeated by disabling/disconnecting those components from the antennas they use.

Favorite story: most of the new Samsung TVs have “Voice Recognition” so many buyers just turn it off. And many wonder if Samsung actually bothers to honor your choice of “privacy” settings after that eavesdropping firestorm a few years ago. A few of my chums are doing some experiments to see.

So you are only totally screwed if the smart TV requires an “always-on” internet connection to even work. My Geek Squad was not aware of any smart TVs that have gone that far yet. Yet.

Oh, yes, and those new smoke alarms and other devices that have a microphone … that they didn’t tell you about, and you didn’t dream would have one. No, there is nothing in the settings manual to allow you to turn it off. Of course they aren’t documented … otherwise you would have known there was a microphone to turn off.

And let’s be clear. Be it Amazon or Apple or Google or any of the others, they have teams of highly-paid lawyers specifically to craft end-user license agreements (EULAs) or software license agreements that will allow them to do whatever it is they want whether or not you consent. It all starts with that magical phrase in every EULA “we may share your data with third parties in order to enhance our product and improve your experience”.

And if you have looked at any of the recent EU EULA updates to account for GDPR, your “consent” is in there. Is it “informed consent”? Is the EULA illegal and so void and therefore unenforceable? OK, then just go through an expensive court case to find out. Or if in Europe, call your national data protection authority. Better yet, call Max Schrems.

Ah, yes. Big tech is about as honest, transparent and moral as your local drug dealer. Well, actually, my local drug dealer is far more honest. And he is offering Easter Week specials!