“AI is anything that doesn’t work yet” – Larry Tesler

12 July 2022 – Over the last 10 years I’ve had the opportunity to read numerous books and long-form essays that question mankind’s relation to the machine – best friend or worst enemy, saving grace or engine of doom? Such questions have been with us since James Watt’s introduction of the first efficient steam engine in the same year that Thomas Jefferson wrote the Declaration of Independence. My primary authors of late have been Charles Arthur, Ugo Bardi, Wendy Chun, Jacques Ellul, Lewis Lapham, Donella Meadows, Maël Renouard, Matt Stoller, and Simon Winchester.

But lately these questions have been fortified with the not entirely fanciful notion that machine-made intelligence, now freed like a genie from its bottle, has moved beyond its birth pangs. These are no longer the “early years” of machine learning and AI. It is growing phenomenally, assuming the role of some fiendish Rex Imperator. Many feel it will ultimately come to rule us all, for always, and with no evident existential benefit to the sorry and vulnerable weakness that is humankind.

A decade into the artificial intelligence boom, scientists in research and industry have made incredible breakthroughs. Increases in computing power, theoretical advances and a rolling wave of capital have revolutionised domains from biology and design to transport and language analysis. In this past year alone advancements in artificial intelligence have led to small but significant scientific breakthroughs across disciplines. AI systems are helping us explore spaces that we couldn’t previously access. In biochemistry, for example, researchers are using AI to develop new neural networks that “hallucinate” proteins with new, stable structures. This breakthrough expands our capacity to understand the construction of a protein. In another discipline, researchers at Oxford are using DeepMind to develop fundamentally new techniques in mathematics. They have established a new theorem with the help of DeepMind, known as knot theory, by connecting algebraic and geometric invariants of knots.

Meanwhile, NASA scientists took these developments to space on the Kepler spacecraft. Using a neural network called ExoMiner, scientists have discovered 301 exoplanets outside of our solar system. We don’t need to wait for AI to create thinking machines and artificial minds to see dramatic changes in science. By enhancing our capacity, AI is transforming how we look at the world.

And some personal advice. Do not ever be ashamed of taking baby steps or really basic beginnings to get into this stuff. Years ago I dove head first into natural language processing and network science, and then pursued a certificate of advanced studies at ETH Zürich because I immediately understood that I had to learn this stuff to really know how tech worked. But I was fortunate because I began with a (very) patient team at the Microsoft Research Lab in Amsterdam – a Masterclass in basic machine learning, speech recognition, and natural language understanding. But it provided a base for getting to sophisticated levels of ML and AI.

Which takes me to my most fun read last year:

This edition of Frankenstein was annotated specifically for scientists and engineers and is – like the creature himself – the first of its kind and just as monstrous in its composition and development for the purposes of educating students who are pursuing science, technology, engineering, and mathematics (STEM). Up until this edition, Frankenstein has been primarily edited and published for and read by humanities students, students equally in need of reading this cautionary tale about forbidden knowledge and playing God. As Mary Shelley herself wrote:

“Most fear what they do not understand. The psychological effects may of not been considered while creating, or even in adaptation to technology. With each adaptation there is error. Is this error the result of differences within a new evolution in which one must learn on their own and be used as a guide for a re-evolution?”

All so, so appropriate because the action in Frankenstein takes place in the 1790s, by which time James Watt (1736–1819) had radically improved the steam engine and in effect started the Industrial Revolution, which accelerated the development of science and technology as well as medicine and machines in the nineteenth century. The new steam engine powered paper mills, printed newspapers, and further developed commerce through steamboats and then trains. These same years were charged by the French Revolution, and anyone wishing to do a chronology of the action in Frankenstein will discover that Victor went off to the University of Ingolstadt in 1789, the year of the Fall of the Bastille, and he developed his creature in 1793, the year of the Reign of Terror in France. Terror (as well as error) was the child of both revolutions, and Mary’s novel records the terrorizing effects of the birth of the new revolutionary age, in the shadows of which we still live. (Hat tip to my researcher, Catarina Conti, for pulling out those historical nuggets).

Which seems so often to have been the way with the new, with mishaps being so frequently the inadvertent handmaiden of invention. Would-be astronauts burn up in their capsule while still on the ground; rubber rings stiffen in an unseasonable Florida cold and cause a spaceship to explode; an algorithm misses a line of code, and a million doses of vaccine go astray; where once a housefly might land amusingly on a bowl of soup, now a steel cog is discovered deep within a factory-made apple pie. Such perceived shortcomings of technology inevitably provide rich fodder for a public still curiously keen to scatter schadenfreude where it may, especially when anything new appears to etch a blip onto their comfortingly and familiarly flat horizon.

But technology as a concept traces back to classical times, when it described the systematic treatment of knowledge in its broadest sense, its more contemporary usage relates almost wholly either to the mechanical, the electronic, or the atomic. And though the consequences of all three have been broad, ubiquitous, and profound, we have really had little enough time to consider them thoroughly. The sheer newness of the thing intrigued Antoine de Saint-Exupéry when he first considered the sleekly mammalian curves of a well-made airplane. We have just three centuries of experience with the mechanical new and even less time – under a century – fully to consider the accumulating benefits and disbenefits of its electronic and nuclear kinsmen.

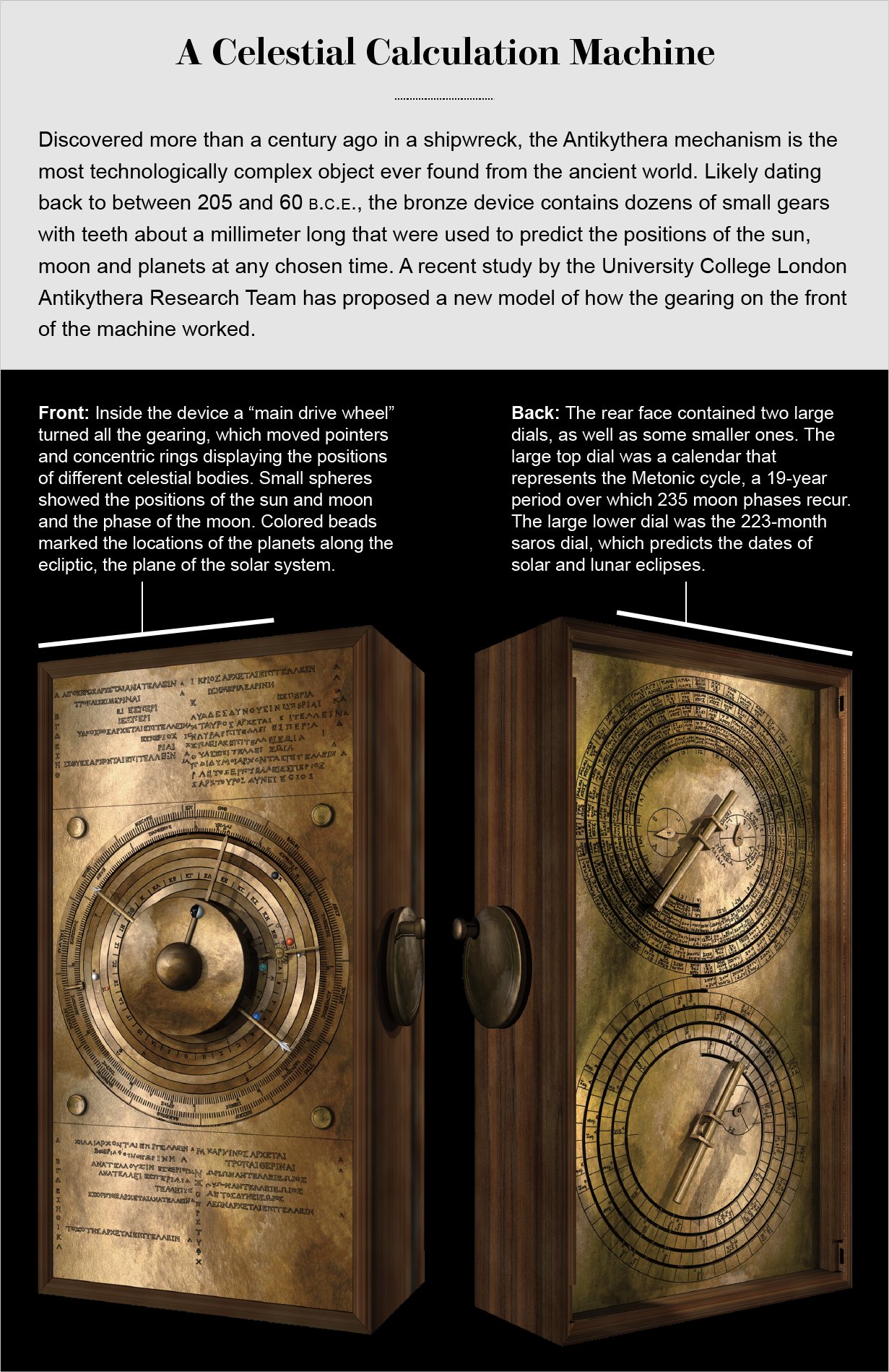

Except, though, for one curious outlier, which predates all and puzzles us still. The Antikythera mechanism was a device dredged up from the Aegean in the net of a sponge diver a century ago:

Main drive wheel of the Antikythera mechanism, as seen in a photograph of its present state (Courtesy of the National Archaeological Museum in Athens, Greece

In 1900 diver Elias Stadiatis, clad in a copper and brass helmet and a heavy canvas suit, emerged from the sea shaking in fear and mumbling about a “heap of dead naked people.” He was among a group of Greek divers from the Eastern Mediterranean island of Symi who were searching for natural sponges. They had sheltered from a violent storm near the tiny island of Antikythera, between Crete and mainland Greece. When the storm subsided, they dived for sponges and chanced on a shipwreck full of Greek treasures – the most significant wreck from the ancient world to have been found up to that point. The “dead naked people” were marble sculptures scattered on the seafloor, along with many other artifacts.

Soon after, their discovery prompted the first major underwater archaeological dig in history. One object recovered from the site, a lump the size of a large dictionary, initially escaped notice amid more exciting finds. Months later, however, at the National Archaeological Museum in Athens, the lump broke apart, revealing bronze precision gearwheels the size of coins. According to historical knowledge at the time, gears like these should not have appeared in ancient Greece, or anywhere else in the world, until many centuries after the shipwreck. The find generated huge controversy.

The lump is known as the Antikythera mechanism, an extraordinary object that has befuddled historians and scientists for more than 120 years. Over the decades the original mass split into 82 fragments, leaving a fiendishly difficult jigsaw puzzle for researchers to put back together. The device appears to be a geared astronomical calculation machine of immense complexity. Today we have a reasonable grasp of some of its workings, but there are still unsolved mysteries. We know it is at least as old as the shipwreck it was found in, which has been dated to between 60 and 70 B.C.E., but other evidence suggests it may have been made around 200 B.C.E.

NOTE: In March 2021 a group at University College London, known as the UCL Antikythera Research Team, published a new analysis of the machine. The paper posits a new explanation for the gearing on the front of the mechanism, where the evidence had previously been unresolved. We now have an even better appreciation for the sophistication of the device – an understanding that challenges many of our preconceptions about the technological capabilities of the ancient Greeks.

It is too much to cover for this post so I’ll just reference this paper here.

I just spent a day with the Antikythera mechanism (a revisit after a number of years) which included working with a reconstruction and understanding it was a astronomical calculation machine of immense complexity:

So whatever technical wonders might later be created in the Industrial Revolution, the Greeks clearly had an evident aptitude and appetite for mechanics, as even Plutarch reminds us. On the evidence of this one creation, this aptitude seems to have been as hardwired in them as was the fashioning of spearheads or wattle fences or stoneware pots to others in worlds and times beyond.

And so I immediately thought of where we are with artificial intelligence. Because it has given us a new kind of algorithmic intelligence that comes with an unsettling prospect of which one can only say: The future is a foreign country. They will do things differently there.

The whole machine learning / deep learning / neural networks breakthrough is now over a decade old. It works now. There was a period maybe 5-7 years ago when every other company coming out of Silicon Valley was an “AI company” (some of them weren’t much more than a couple of PhDs with a domain name planning to be an acquihire). AI has suddenly started working and everyone was very excited about the step change this brought in what we could do with software.

That excitement has mostly gone now, or at least split apart. On one side there is so-called “applied AI”, which means actual products. This or that company doesn’t do “AI” or “natural language processing” – it analyses your Salesforce flows to spot where you could improve your conversion. You’re not selling a neural network anymore – it’s been instantiated into software, product and a route to market that you can sell to CIOs and PMs instead of scientists. it’s just software now. It has “disappeared”. Much like when then-Google chief executive Eric Schmidt said 8 years ago the Internet was going to “disappear soon”. He wasn’t referring to a collapse of global data networks. Instead the number of Web-enabled devices and processes and networks in our lives would simply continue to soar so that we’d stop thinking of the Internet as a separate entity, and recognize it simply as a part of everything around us.

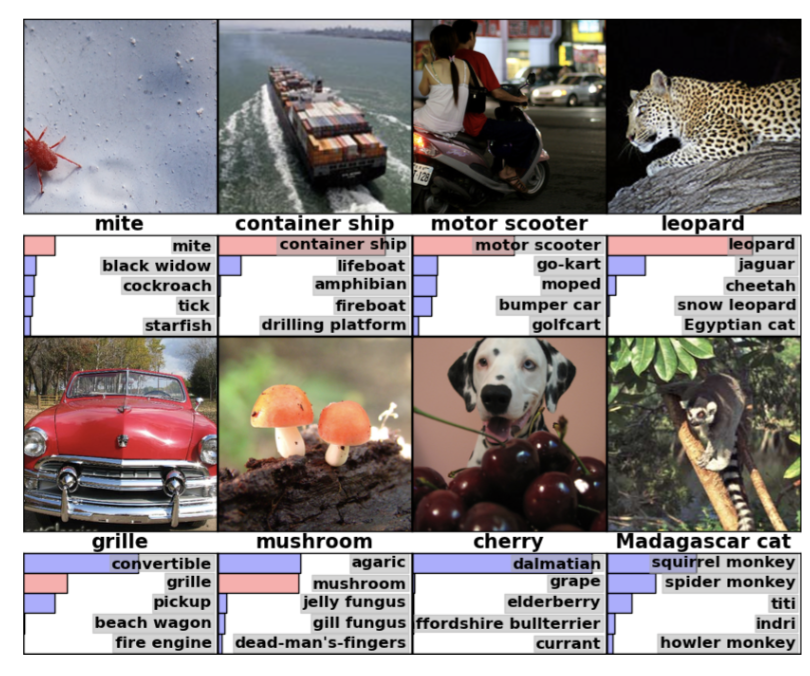

Meanwhile, we have the other side, what one might call “frontier AI”, which means things like DALL-E, Imagen or GPT3 (soon to be GPT4). These are research projects that push the frontiers of what it’s possible to do with machine learning, and produce astonishing demos, but where it’s not entirely clear from outside, yet, how generalisable or useful this is. You will all recognise the early artificial intelligence ImageNet grids:

People shrugged “Cool, but so what?” Well, as I have written, and as Benedict Evans noted in a recent post, those ImageNet tests had three implications:

First, image recognition, of any kind, was very suddenly now a solved problem. It created Google Photos, which we now take utterly for granted and don’t notice or think of as “artificial intelligence” (“AI is anything that doesn’t work”)

Second, it enabled countries like China and Myanmar to build panopticons. It allowed image sensors to become a kind of universal input system – the images aren’t being looked at by people but machines, whether it’s industrial quality control, AR or AVs.

Third, but much more generally, machine learning became a general technique that could be applied to a whole class of problems, which is what really led to the creation of 1000s of companies. We worked out how to turn all sorts of things into AI problems – and thereby solved them better.

We haven’t really gone down that path for GPT3 or DALL-E yet. We have hypothetical conversations about what this means for generating spam or revel at those creative covers every week on The Economist. Or even silly graphics. Here is the result when Casey Newton fed into DALL-E “create the Twitter logo on a hot potato”:

But we don’t have the equivalent of “now you can use image sensors to do automatic telemetry of the ground crew at an airport”.

Or as Benedict Evans recently noted:

We do, however, have lots of conversations about whether any of this takes us closer to AGI (artificial general intelligence), which rather feels like a red herring, since most people in the field don’t think so – and we don’t really agree on what AGI means anyway (both a dog and an octopus have general intelligence, after all).

But all the buzz in tech has gone from a machine that can recognise monkeys, to trading monkey pictures, without noticing that we have a machine that can make monkey pictures. And what else can it make? What kind of problem is that, and what can we turn into that kind of problem?

So, just as the “ImageNet companies” have disappeared because they just became “software companies” so shall the GPT companies and DALL-E companies. But no, I still do not know how they disappear.

Technology has eaten society

When moving across a wide landscape, sometimes you cannot wrestle a lot of ideas to the ground so they just float out there for a time. So to wrap this up, some incomplete musings that are in search of a post. I was struggling with this conclusion.

Historically, computers, human and mechanical, have been central to the management and creation of populations, political economy, and apparatuses of security. Without them, there could be no statistical analysis of populations: from the processing of censuses to bioinformatics, from surveys that drive consumer desire to social security databases. Without them, there would be no government, no corporations, no schools, no global marketplace, or, at the very least, they would be difficult to operate.

Tellingly, the beginnings of IBM as a corporation – it began as the “Herman Hollerith’s Tabulating Machine Company” – dovetails with the mechanical analysis of the U.S. census. Before the adoption of these machines in 1890, the U.S. government had been struggling to analyze the data produced by the decennial census (the 1880 census taking seven years to process). Crucially, Hollerith’s punch-card-based mechanical analysis was inspired by the “punch photograph” used by train conductors to verify passengers. Similarly, the Jacquard Loom, a machine central to the Industrial Revolution inspired (via Charles Babbage’s “engines”) the cards used by the Mark 1, an early electromechanical computer. Scientific projects linked to governmentality also drove the development of data analysis: eugenics projects that demanded vast statistical analyses, nuclear weapons that depended on solving difficult partial differential equations.

Importantly, though, computers in our current “modern” period (post-1960s) coincide with the emergence of neoliberalism. As well as control of “masses,” computers have been central to processes of individualization or personalization. “Neoliberalism”, according to David Harvey is “a theory of political economic practices that proposes that human well-being can best be advanced by liberating individual entrepreneurial freedoms and skills within an institutional framework characterized by strong private property rights, free markets, free trade”. Although neoliberals, such as the Chicago School economist Milton Friedman, claim merely to be “resuscitating” classical liberal economic theory, Michel Foucault called it for what it really was. His books argue (successfully) that neoliberalism differs from liberalism in its stance that the market should be “the principle, form, and model for a state”. We’ve seen where that has taken us.

But we cannot turn back. Big Tech (through artificial intelligence) has permitted it to built interlinked markets constituted by contemporary information processing practices. Information services now sit within complex media ecologies, and networked platforms and infrastructures create complex interdependencies and path dependencies. With respect to harms, information technologies have given scientists and policymakers tools for conceptualizing and modeling systemic threats. At the same time, however, the displacement of preventive regulation into the realm of models and predictions complicates, and unavoidably politicizes, the task of addressing those threats.

The power dynamic has changed. Because data has become the crucial part of our infrastructure, enabling all commercial and social interactions. Rather than just tell us about the world, data acts in the world. Because data is both representation and infrastructure, sign and system. As the brilliant media theorist Wendy Chun puts it, data “puts in place the world it discovers”.

Which is why I scoff at attempts to restrict or protect data. AI has led to a massively intermediated, platform-based environment, with endless network effects, commercial layers, and inference data points. The regulatory state must be examined through the lens of the reconstruction of the political economy: the ongoing shift from an industrial mode of development to an informational one. Regulatory institutions will continue to struggle in the era of informational capitalism they simply cannot understand.

As these systems became more sophisticated and the AI became more ubiquitous, we experienced what Big Tech calls “phase transition” – the transition from collection-and-storage surveillance to mass automated real-time monitoring. At scale.The ship has sailed. Technology has eaten society.

I think that (finally) most of the world has realised that when it comes to Information Technology, after decades of developing the Internet and AI, these are not simply new mediums, but new makers of reality. And all of it will “disappear” into our daily lives.

I keep coming back to Jacques Ellul, the French philosopher and sociologist who had a lifelong concern with what he called “technique”:

“Technique constitutes a fabric of its own, replacing nature. Technique is the complex and complete milieu in which human beings must live, and in relation to which they must define themselves. It is a universal mediator, producing a generalised mediation, totalizing and aspiring to totality. The concrete example of this is the city. The city is the place where technique excludes all forms of natural reality. The concrete example of this is the city. The city is the place where technique excludes all forms of natural reality. But apart from the city, the only choices left are either the urbanization of rural areas, or ‘desertification’ (nature then being submitted to a technical exploitation controlled by a very small number of people). Technique will be the Milieu in which modern humanity is placed”.

He published three volumes which explained his thoughts in detail, explaining “technique” and the role of technology in the contemporary world. In the introduction to the first volume (1954) he notes:

“Technology will increase the power and concentration of vast structures, both physical (the factory, the city) and organizational (the State, corporations, finance) that will eventually constrain us to live in a world that no longer may be fit for mankind. We will be unable to control these structures, we will be deprived of real freedom – yet given a contrived element of freedom – but the result will be that technology will depersonalize the nature of our modern daily life, and we shall not realize it”.