“All of our exalted technological progress, civilization for that matter, is comparable to an ax in the hand of a pathological criminal”

– Albert Einstein, 1917

This is the last of three end-of-the-year essays about transformational technologies (and one very transformational company) that will take us into the next decade and maybe beyond.

Not predictions. Predictions are a fool’s game. These are transformational technologies that have already set the table.

For links to the first essay (“The Language Machines : a deep look at GPT-3, an AI that has no understanding of what it’s saying”) and the second essay (“Amazon: America and the world cannot stop falling under the company’s growing shadow), plus an introduction to this end-of-the-year series, click here.

22 December 2021 (Malta) – Over the past few years I’ve had the opportunity to read numerous books and long-form essays that question mankind’s relation to the machine – best friend or worst enemy, saving grace or engine of doom? Such questions have been with us since James Watt’s introduction of the first efficient steam engine in the same year that Thomas Jefferson wrote the Declaration of Independence. My primary authors of late have been Charles Arthur, Ugo Bardi, Wendy Chun, Jacques Ellul, Lewis Lapham, Donella Meadows, Maël Renouard, Matt Stoller, Simon Winchester – and Henry Kissinger. Yes, THAT Henry Kissinger.

But lately these questions have been fortified with the not entirely fanciful notion that machine-made intelligence, now freed like a genie from its bottle, and still writhing and squirming through its birth pangs (yes, these are still the very early years of machine learning and AI), will soon begin to grow phenomenally, will assume the role of some fiendish Rex Imperator, and will ultimately come to rule us all, for always, and with no evident existential benefit to the sorry and vulnerable weakness that is humankind.

A decade into the artificial intelligence boom, scientists in research and industry are making incredible breakthroughs. Increases in computing power, theoretical advances and a rolling wave of capital have revolutionised domains from biology and design to transport and language analysis. In this past year advancements in artificial intelligence are leading to small but significant scientific breakthroughs across disciplines. AI systems are helping us explore spaces that we couldn’t previously access. In biochemistry, for example, researchers are using AI to develop new neural networks that “hallucinate” proteins with new, stable structures. This breakthrough expands our capacity to understand the construction of a protein. In another discipline, researchers at Oxford are using DeepMind to develop fundamentally new techniques in mathematics. They have established a new theorem with the help of DeepMind, known as knot theory, by connecting algebraic and geometric invariants of knots.

Meanwhile, this year NASA scientists took these developments to space on the Kepler spacecraft. Using a neural network called ExoMiner, scientists have discovered 301 exoplanets outside of our solar system. We don’t need to wait for AI to create thinking machines and artificial minds to see dramatic changes in science. By enhancing our capacity, AI is transforming how we look at the world.

And to all of my readers: don’t be ashamed of baby steps or really basic beginnings to get into this stuff. Years ago I dove head first into natural language processing and network science, and then pursued a certificate of advanced studies at ETH Zürich because I immediately understood that I had to learn this stuff to really know how tech worked. But I was fortunate because I began with a (very) patient team at the Microsoft Research Lab in Amsterdam – a Masterclass in basic machine learning, speech recognition, and natural language understanding. But it provided a base for getting to sophisticated levels of ML and AI.

So are our worries about tech justified? Do periods of rapid technological advance necessarily bring with them accelerating fears of the future? It was not always so, especially during the headier days of scientific progress. The introduction of the railway train did not necessarily lead to the proximate fear that it might kill a man. When a locomotive lethally injured William Huskisson on the afternoon of September 15, 1830, there was such giddy excitement in the air that an accident seemed quite out of the question.

The tragedy was caused by “The Rocket”, a newfangled and terrifically noisy, breathing fire, gushing steam, and clanking metal, an iron horse in full gallop. But when it knocked a poor man down – a British government minister of only passing distinction but thereby elevating him to the indelible status of being the first-ever victim of a moving train – the event was occasioned precisely because the very idea of steam-powered locomotion was so new. The thing was so unexpected and preposterous, the engine’s sudden arrival on the newly built track linking Manchester to Liverpool so utterly unanticipated, that we can reasonably conclude that Huskisson, who had incautiously stepped down from his assigned carriage to see a friend in another, was killed by nothing less than technological stealth. Early science, imbued with optimism and hubris, seldom supposed that any ill might come about.

Which tales me to my most fun read this year:

This edition of Frankenstein was annotated specifically for scientists and engineers and is – like the creature himself – the first of its kind and just as monstrous in its composition and development for the purposes of educating students who are pursuing science, technology, engineering, and mathematics (STEM). Up until this edition, Frankenstein has been primarily edited and published for and read by humanities students, students equally in need of reading this cautionary tale about forbidden knowledge and playing God. As Mary Shelley herself wrote:

“Most fear what they do not understand. The psychological effects may of not been considered while creating, or even in adaptation to technology. With each adaptation there is error. Is this error the result of differences within a new evolution in which one must learn on their own and be used as a guide for a re-evolution?”

All so, so appropriate because the action in Frankenstein takes place in the 1790s, by which time James Watt (1736–1819) had radically improved the steam engine and in effect started the Industrial Revolution, which accelerated the development of science and technology as well as medicine and machines in the nineteenth century. The new steam engine powered paper mills, printed newspapers, and further developed commerce through steamboats and then trains. These same years were charged by the French Revolution, and anyone wishing to do a chronology of the action in Frankenstein will discover that Victor went off to the University of Ingolstadt in 1789, the year of the Fall of the Bastille, and he developed his creature in 1793, the year of the Reign of Terror in France. Terror (as well as error) was the child of both revolutions, and Mary’s novel records the terrorizing effects of the birth of the new revolutionary age, in the shadows of which we still live. (Hat tip to my researcher, Catarina Conti, for pulling out those historical nuggets).

Which seems so often to have been the way with the new, with mishaps being so frequently the inadvertent handmaiden of invention. Would-be astronauts burn up in their capsule while still on the ground; rubber rings stiffen in an unseasonable Florida cold and cause a spaceship to explode; an algorithm misses a line of code, and a million doses of vaccine go astray; where once a housefly might land amusingly on a bowl of soup, now a steel cog is discovered deep within a factory-made apple pie. Such perceived shortcomings of technology inevitably provide rich fodder for a public still curiously keen to scatter schadenfreude where it may, especially when anything new appears to etch a blip onto their comfortingly and familiarly flat horizon.

But technology as a concept traces back to classical times, when it described the systematic treatment of knowledge in its broadest sense, its more contemporary usage relates almost wholly either to the mechanical, the electronic, or the atomic. And though the consequences of all three have been broad, ubiquitous, and profound, we have really had little enough time to consider them thoroughly. The sheer newness of the thing intrigued Antoine de Saint-Exupéry when he first considered the sleekly mammalian curves of a well-made airplane. We have just three centuries of experience with the mechanical new and even less time – under a century – fully to consider the accumulating benefits and disbenefits of its electronic and nuclear kinsmen.

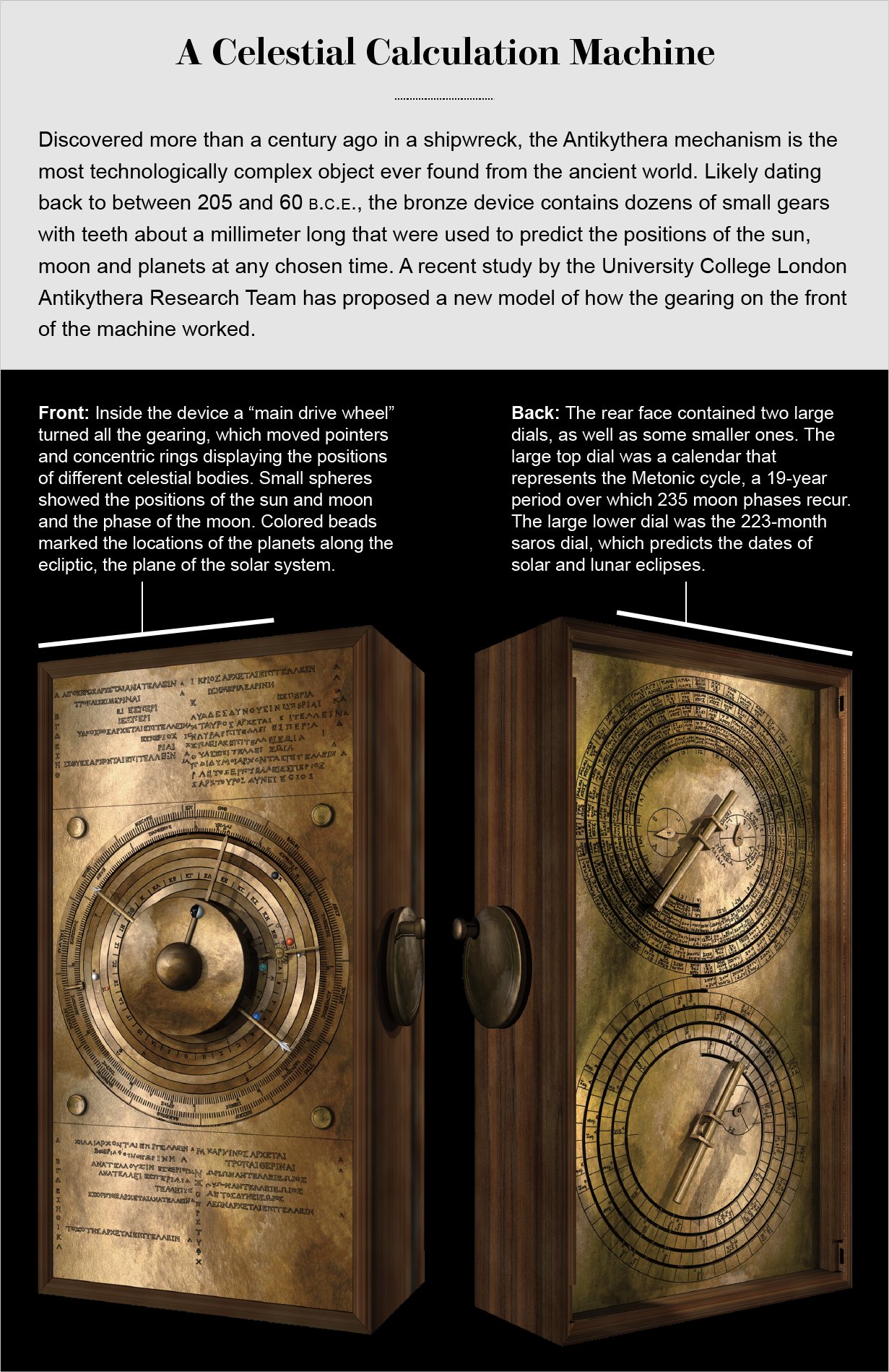

Except, though, for one curious outlier, which predates all and puzzles us still. The Antikythera mechanism was a device dredged up from the Aegean in the net of a sponge diver a century ago:

Main drive wheel of the Antikythera mechanism, as seen in a photograph of its present state (Courtesy of the National Archaeological Museum in Athens, Greece

In 1900 diver Elias Stadiatis, clad in a copper and brass helmet and a heavy canvas suit, emerged from the sea shaking in fear and mumbling about a “heap of dead naked people.” He was among a group of Greek divers from the Eastern Mediterranean island of Symi who were searching for natural sponges. They had sheltered from a violent storm near the tiny island of Antikythera, between Crete and mainland Greece. When the storm subsided, they dived for sponges and chanced on a shipwreck full of Greek treasures – the most significant wreck from the ancient world to have been found up to that point. The “dead naked people” were marble sculptures scattered on the seafloor, along with many other artifacts.

Soon after, their discovery prompted the first major underwater archaeological dig in history. One object recovered from the site, a lump the size of a large dictionary, initially escaped notice amid more exciting finds. Months later, however, at the National Archaeological Museum in Athens, the lump broke apart, revealing bronze precision gearwheels the size of coins. According to historical knowledge at the time, gears like these should not have appeared in ancient Greece, or anywhere else in the world, until many centuries after the shipwreck. The find generated huge controversy.

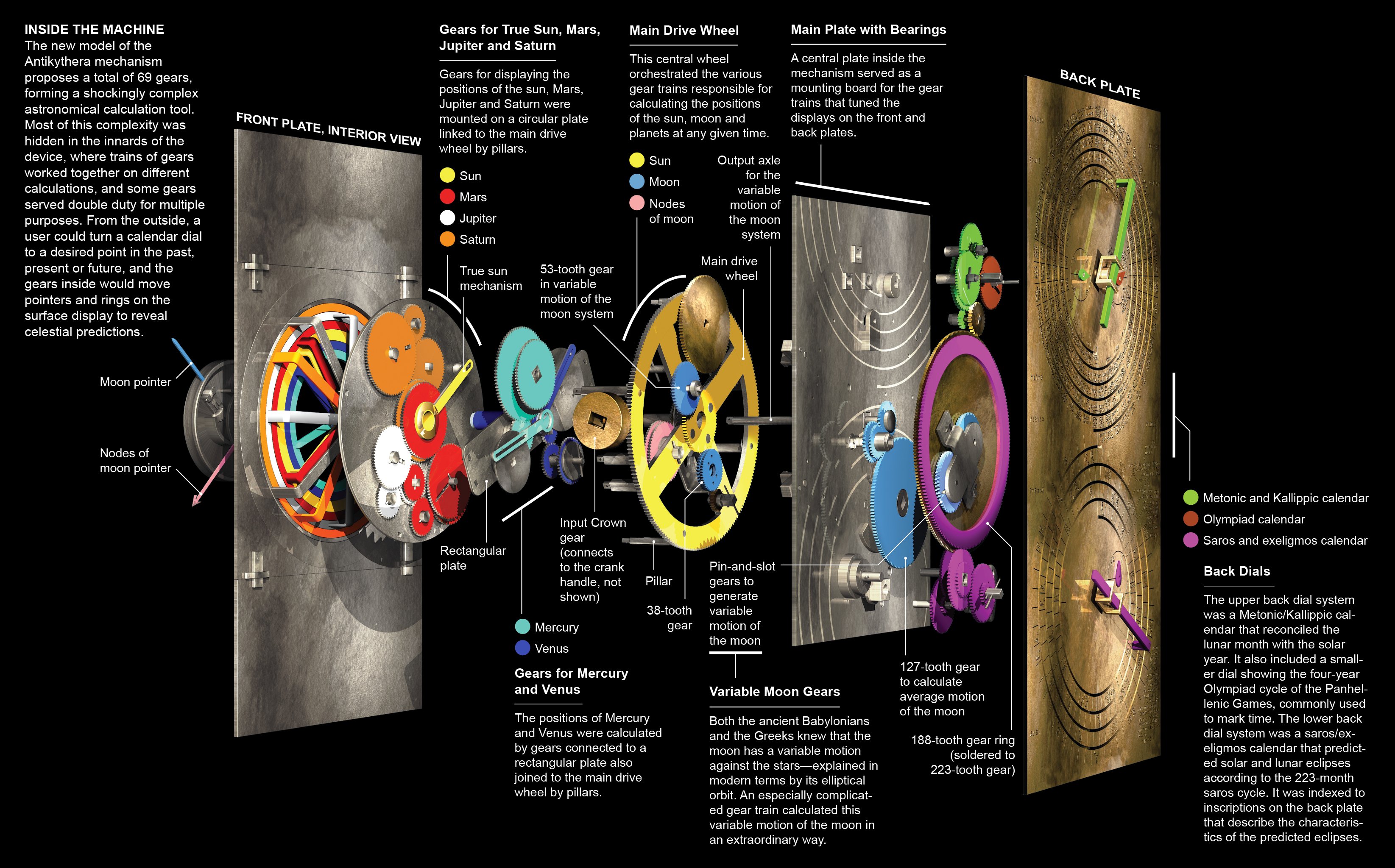

The lump is known as the Antikythera mechanism, an extraordinary object that has befuddled historians and scientists for more than 120 years. Over the decades the original mass split into 82 fragments, leaving a fiendishly difficult jigsaw puzzle for researchers to put back together. The device appears to be a geared astronomical calculation machine of immense complexity. Today we have a reasonable grasp of some of its workings, but there are still unsolved mysteries. We know it is at least as old as the shipwreck it was found in, which has been dated to between 60 and 70 B.C.E., but other evidence suggests it may have been made around 200 B.C.E.

NOTE: In March 2021 a group at University College London, known as the UCL Antikythera Research Team, published a new analysis of the machine. The paper posits a new explanation for the gearing on the front of the mechanism, where the evidence had previously been unresolved. We now have an even better appreciation for the sophistication of the device – an understanding that challenges many of our preconceptions about the technological capabilities of the ancient Greeks.

It is too much to cover for this post so all I will do is reference the paper here although I do want to make two points:

The team has been able to model what the machine probably looked like. Two graphics from the summary paper the team produced:

By a thorough study they determined much of the mechanism’s design relies on wisdom from earlier Middle Eastern scientists. Astronomy in particular went through a transformation during the first millennium B.C.E. in Babylon and Uruk (both in modern-day Iraq). The Babylonians recorded the daily positions of the astronomical bodies on clay tablets, which revealed that the sun, moon and planets moved in repeating cycles – a fact that was critical for making predictions. The moon, for instance, goes through 254 cycles against the backdrop of the stars every 19 years—an example of a so-called period relation. The Antikythera mechanism’s design uses several of the Babylonian period relations.

So whatever technical wonders might later be created in the Industrial Revolution, the Greeks clearly had an evident aptitude and appetite for mechanics, as even Plutarch reminds us. On the evidence of this one creation, this aptitude seems to have been as hardwired in them as was the fashioning of spearheads or wattle fences or stoneware pots to others in worlds and times beyond. While Pliny the Elder and Cicero mention similar technology – as does the Book of Tang, describing an eighth-century armillary sphere made in China – the Antikythera mechanism remains the one such device ever found. Little of an intricacy equal to it was to be made anywhere for the two thousand years following – a puzzle still unsolved. When mechanical invention did reemerge into the human mind, it did so in double-quick time and in a tide that has never since ceased its running – almost as if it had been pent up for two millennia or more.

I’m not going to drag you through the entire history of technology. It’s best to read Ugo Bardi, Wendy Chun, Jacques Ellul, Lewis Lapham, and Matt Stoller for detailed histories.

But what I will do is make a few general points about three major “leaps” that technology has made (two from my readings and the third from my musings), and close with a few comments on where we are today.

LEAP #1: “Mechanical arrangement”

Technology has arrived in “leaps” and then developed in multiple steps. The first “leap” was the notion that some mechanical arrangement might be persuaded to perform useful physical work rather than mere astronomical cogitation. This was born of one demonstrable and inalienable fact: water heated to its boiling point transmutes into a gaseous state, steam, which occupies a volume fully 1,700 times greater than its liquid origin. It took a raft of eighteenth-century British would-be engineers to apprehend that great use could somehow be made of this property.

It eventually fell to the Scotsman James Watt to perfect, construct, and try out in the intricate arrangement of pistons and exactly machined cylinders and armatures that could efficiently undertake steam-powered tasks. Afterward only the cleverness of mechanical engineers (that term entering the English language in tandem with the invention) could determine exactly how the immense potential power of a steam engine might best be employed. The Industrial Revolution is thus wholly indebted to Watt: no more seminal a device than his engine and its countless derivatives would be born for almost two centuries.

And then after that first leap, a full spectrum of reactions and steps to technology’s perceived benefit and cost. The advantages with steam were obvious, somewhat anticipated, and often phenomenal: steam-powered factories were thrown up, manufacturing was inaugurated, Thomas Carlyle wrote somewhat sardonically of “the Age of Machinery that will doom us all”. New cities were born, populations were moved, demographics shifted, old cities changed their shapes and swelled prodigiously, entrepreneurs abounded, fortunes were accumulated, physical sciences became newly valued, new materials were born, fresh patterns of demand were created, new trade routes were established to meet them, empires altered their size and configurations, competition accelerated, advertising was inaugurated, a world was swiftly awash with products all made to greater or lesser extent from “powered machinery”.

And yet there was also talk of the apparent “disbenefits”, especially with the matter of the environment. One of my researchers scanned a UK newspaper database for the period of the early Industrial Revolution and found articles and opinion pieces about the the sudden change in the color of the industrial sky and the change in quality of the industrial rivers and lakes; the immense gatherings of fuel (coal, mainly) that were needed to heat the engines’ water so as to alter its phase and make it perform its duties spewed carbon pollutants all around, ruining arcadia forever. Satanic mills were born. Dirt was everywhere. Gustave Doré painted it. Charles Dickens and Henry Mayhew wrote about it.

Other effects were less overt and more languid in their arrival. The UK Royal Navy factory built in the southern city of Portsmouth around the turn of the nineteenth century was the first in the world to harness steam power, for the making of a sailing vessel’s pulley blocks. Blocks had been used for centuries; Herodotusreminds us how blocks of much the same design could have been employed in the making of the pyramids of Khufu. But a fully furnished man-of-war might need fourteen hundred such devices—some small, enabling a sailor to raise and lower a signal flag from a yardarm, others massive confections of elm wood and iron and with sheaves of lignum vitae through which to pass the ropes, and which shipboard teams would use to haul up a five-ton anchor or a storm-drenched mainsail, with all the mechanical advantage that block-and-tackle arrangements have been known to offer since antiquity.

NOTE: at the turn of the century, with Napoleon causing headaches for the British Admiralty worldwide, ever greater armadas were needed, ever more pulley blocks required, the want vastly outpacing the legions of cottage-bound carpenters who had previously fashioned them by hand, as they had done for centuries. The engineer Marc Isambard Brunel (father of the better-known bridge- and shipbuilder Isambard Kingdom Brunel) then reckoned that steam power could help make blocks more quickly and more accurately and in such numbers as the navy might ever need, and he hired Henry Maudslay, another engineer, to create machinery for the Portsmouth Block Mills to perform the necessary task. He calculated that just sixteen exactly defined steps were required to turn the trunk of an elm tree into a batch of pulley blocks able to withstand years of pounding by monstrous seas and ice storms and the rough handling of men and ships at battle stations.

But gradually social orders were subtly changed, the Luddites were eventually disdained, the skeptics seen off, the path toward machine-made progress most decidedly taken. The fork in the road had been seen, recognized, appreciated, and chosen. But as writers like Wendy Chun and Marshall McLuhan and Lewis Mumford have written, if there was still any dithering, it took the manufacture of automobiles to consolidate the relationship between man and machinery. Eighty years on from Portsmouth, the Michigan-born Henry Ford became the engineer who most keenly persuaded society, especially in America, to ratify the overwhelmingly beneficial role of technology.

Ford believed that the motorcar might become a means of transport for the everyman, allowing the majesty of the country to be experienced by anyone with just a few hundred dollars to his name. He believed that inexpensive and interchangeable parts, and not too many of them, could be assembled on production lines to make millions of cars, for the benefit of all. His rival in the UK, Henry Royce, believed the opposite: he employed discrete corps of craftsmen who painstakingly assembled the finest machines in the world, legends of precision and grace. History has judged Ford’s vision to be the more enduring, the model for the making of volumes of almost anything, mobile or not. It was this sequential system of mechanized or manual operations for the manufacture of products that was to be the basis of “the American manufacturing system” – soon to be dominant around the globe.

LEAP #2: “Breaking the limitations of material”

But there is another great “leap” that brings us into our times. The system of technology-based manufacturing turned out to have limits, which were imposed not as a consequence of any proximate disadvantages, social or otherwise, but by another unexpected factor: the physical limitations of materials. Engineering the alloys of steel and titanium and nickel and cobalt of which so much contemporary machinery is made, and under the commercial pressures that demanded ever greater efficiency – or profit, or speed, or power – has in recent times collided with harsh realities of metallurgy.

Through experimentation it was found that technologies centered around solid-state printed circuitry suffer no metal fatigue, no friction losses, no wear. The idea of such circuitry was first bruited in 1925 by a Leipzig engineer named Julius Lilienfeld. But it took a generation to marinate in the minds of physicists around the world until shortly before Christmas 1947, when a trio of researchers at Bell Labs in New Jersey fashioned a working prototype of a phenomenon they created and called “the transistor effect”. The invention of the first working transistor marked the moment when technology’s primal driving power switched from Newtonian mechanics to Einsteinian theoretics – unarguably the most profound development in the technological universe and the second of the three steps, or leaps, that have come to define this aspect of the modern human era.

Why? The sublime simplicity of the transistor – its ability to switch electricity on or off, to be there or not, to be counted as 1 or 0, and thus to reside at, and essentially to be, the heart of all contemporary computer coding – renders it absolutely, totally, entirely essential to contemporary existence, in previously unimaginable numbers. The devices that populate the processors at the heart of almost all electronics, from cell phones and navigation devices and space shuttles to Alexas and Kindles and wristwatches and weather-forecasting supercomputers and, most significantly of all, to the functioning of the internet, are being manufactured at rates and in numbers that quite beggar belief: thirty sextillion being the latest accepted figure, a number greater than the aggregated total of all the stars in the galaxy or the accretion of all the cells in a human body.

There are 15 billion transistors in my iPhone 13, crammed into a morsel of silicon measuring just ninety square millimeters. And yet in 1947, a single transistor was fully the size of an infant’s hand, with its inventors having no clear idea of the role its offspring would play in human society. The sheer proliferation of the device suggests the physical limitations of this kind of technology, rather than anything to do with the integrity of its materials, as was true back in the purely mechanical world. Instead the limitations have to do with the ever-diminishing size of the transistors themselves, with so many of them now forced into such tiny pieces of semiconducting real estate.

They are now made so small – made by machines almost all created entirely in the Netherlands that are so gigantic that several wide-bodied jets are needed to carry each one to the chip-fabrication plant – that they operate down on the atomic and near-subatomic levels, their molecules almost touching and in imminent danger of interfering with one another and causing atomic short circuits.

NOTE: mention of atomic-level interference brings to mind another great leap that has been made by technology: the practical fissioning of an atom and the release of the energy that Albert Einstein had long before calculated resided within it. Too much to include in this post. And the irony, of course. The first detonation of an uncontrolled fission device in the summer of 1945 led to the utter destruction of two Japanese cities, Hiroshima and Nagasaki. That the world’s sole nuclear attack was perpetrated on Japan, of all places, serves to expose one irony about technology’s current hold on humanity. Japan is a country much given to the early adoption and subsequent enthusiastic use of technology. Canon, Sony, Toshiba, Toyota are all companies that have flourished mightily since World War II making products, from cameras to cars, that are revered for their precision and exquisite functionality. Yet at the same time, as Simon Winchester notes, “the country has held in the highest esteem its own centuries-old homegrown traditions of craftsmanship – carpenters and knife grinders, temple builders and lacquerware makers, ceramicists and those who work in bamboo are held in equally high regard, with the finest in their field accorded government-backed status as living national treasures, with a pension and a standing that encourages others to follow in their footsteps”.

And so our computational muscle has now reached a point where we can create machines – devices variously mechanical, electronic, or atomic – that can think for themselves. And often outthink us, to boot. And as I wrote in the first essay in this series, write coherent articles. Which is why, though our trains may run safely and our clocks keep good time and our houses may remain at equable temperatures, and in certain places our ability to perform archaic crafts remains intact, still there is an ominous note sounding not too far offstage. A new kind of algorithmic intelligence is teaching itself the miracle of Immaculate Conception. And with it comes an unsettling prospect of which one can only say: The future is a foreign country. They will do things differently there.

And before I continue to “Leap #3” and final thoughts, a quick note. Not a leap, really, but let’s call it a mutation. We are moving into a photonic world. Photonics may become more important that electronics. This is something Kevin Kelly (the guy who launched Wired magazine and wrote the seminal book What Technology Wants) has been writing about.

The digital realm runs on electrons. Electrons underpin the entire realm of computing and today’s communication. Almost all bits are electronic bits. We use packets of electrons to make binary codes of off/on. We make logic circuits out of packets of electronics flowing around circuits. Electrons are close to our idea of particles flowing like bits of matter.

Photons on the other hand are waves, or wavicles. Light and all electromagnetic spectrum like radio travel as wave-particles. They are not really discrete particles. They are continuous, analog waves. They are almost the opposite of binary. To one approximation, the photonic world is closer to analog than is the electronic world.

We are building interfaces for moving between electronics and photonics. That’s how you get Instagram pics on your phone, and Netflix over fiber optic cables. Electronic binary packets rush into screens which emit photons. As we enhance our virtual worlds we will do ever more with photons. Augmented reality, mixed reality … or what Kelly calls “Mirrorworlds” … are spatially rendered spaces with full volumetric depth and minute visual detail that will mimic the photons of the real world.

To render full volumetric spatial scenes with the resolution needed, and to light it convincingly, in a shared world with many participants will require astronomical amounts of computation. Since the results we want are in photons, it may turn out that we do as much of the computation in photonics, rather than going from photons to electrons and then back to photons. The Mirrorworld and even the metaverse will be primarily photonic realm, with zillions of photons zipping around, being sensed, and then re-created. It will be the Photonic Age.

LEAP #3: “Technology eats society”

When moving across a wide landscape, sometimes you cannot wrestle a lot of ideas to the ground so they just float out there for a time. So to wrap this up, some incomplete musings that are in search of a post. I was struggling with this conclusion.

Historically, computers, human and mechanical, have been central to the manage ment and creation of populations, political economy, and apparatuses of security. Without them, there could be no statistical analysis of populations: from the processing of censuses to bioinformatics, from surveys that drive consumer desire to social security databases. Without them, there would be no government, no corporations, no schools, no global marketplace, or, at the very least, they would be difficult to operate.

Tellingly, the beginnings of IBM as a corporation – it began as the “Herman Hollerith’s Tabulating Machine Company” – dovetails with the mechanical analysis of the U.S. census. Before the adoption of these machines in 1890, the U.S. government had been struggling to analyze the data produced by the decennial census (the 1880 census taking seven years to process). Crucially, Hollerith’s punch-card-based mechanical analysis was inspired by the “punch photograph” used by train conductors to verify passengers. Similarly, the Jacquard Loom, a machine central to the Industrial Revolution inspired (via Charles Babbage’s “engines”) the cards used by the Mark 1, an early electromechanical computer. Scientific projects linked to governmentality also drove the development of data analysis: eugenics projects that demanded vast statistical analyses, nuclear weapons that depended on solving difficult partial differential equations.

Importantly, though, computers in our current “modern” period (post-1960s) coincide with the emergence of neoliberalism. As well as control of “masses,” computers have been central to processes of individualization or personalization. “Neoliberalism”, according to David Harvey is “a theory of political economic practices that proposes that human well-being can best be advanced by liberating individual entrepreneurial freedoms and skills within an institutional framework characterized by strong private property rights, free markets, free trade”. Although neoliberals, such as the Chicago School economist Milton Friedman, claim merely to be “resuscitating” classical liberal economic theory, Michel Foucault called it for what it really was. His books argue (successfully) that neoliberalism differs from liberalism in its stance that the market should be “the principle, form, and model for a state”.

And that is exactly what developed. Individual economic and political freedom were tied together into what Friedman and his cohort called competitive capitalism. Critics argued that neoliberalism thrived by creating a general “culture of consent” – even though it has harmed most people economically by fostering incredible income disparities. In particular, it has incorporated progressive 1960s discontent with government and, remarkably, dissociated this discontent from its critique of capitalism and corporations. And so in the West, in a neoliberal society, the market has become an ethics: it spread everywhere so that all human interactions, from motherhood to education, are discussed as economic “transactions” that can be assessed strictly in individual cost-benefit terms.

And (almost) everybody bought into it. Especially the U.S. government. So for decades a trend: whole sectors of the U.S. – three-quarters of all U.S. industries, by several estimates – grew more concentrated in a set number of certain companies. Yes. A trend underway for decades. As I noted in the second essay in this series the federal government had relaxed its opposition to corporate consolidation, causing all manner of regional, social and political imbalance.

And Big Tech? Its size permitted it to built interlinked markets constituted by contemporary information processing practices. Information services now sit within complex media ecologies, and networked platforms and infrastructures create complex interdependencies and path dependencies. With respect to harms, information technologies have given scientists and policymakers tools for conceptualizing and modeling systemic threats. At the same time, however, the displacement of preventive regulation into the realm of models and predictions complicates, and unavoidably politicizes, the task of addressing those threats.

The power dynamic has changed. Because data has become the crucial part of our infrastructure, enabling all commercial and social interactions. Rather than just tell us about the world, data acts in the world. Because data is both representation and infrastructure, sign and system. As the brilliant media theorist Wendy Chun puts it, data “puts in place the world it discovers”.

Restrict it? Protect it? We live in a massively intermediated, platform-based environment, with endless network effects, commercial layers, and inference data points. The regulatory state must be examined through the lens of the reconstruction of the political economy: the ongoing shift from an industrial mode of development to an informational one. Regulatory institutions will continue to struggle in the era of informational capitalism they simply cannot understand. The ship has sailed. Technology has eaten society.

There is a perspective in which 2021 was an absolute drag: COVID dragged on, vaccines became politicized, new variants emerged, and while tech continued to provide the infrastructure that kept the economy moving, it also provided the infrastructure for all of those things that made this year feel so difficult. To repeat: Technology has eaten society.

At the same time, 2021 also provided a glimpse of a future beyond our current smartphone and social media-dominated paradigm: there was the Metaverse, and crypto. Sure, the old paradigm is increasingly dominated by regulation and politics, a topic that is so soul-sucking that it temporarily made me want to post less. And from my rock in the Mediterranean I look back at my old homeland, America, which is now in fascism’s legal phase. The attacks on the courts, education, the right to vote and women’s rights are further steps on the path to toppling democracy. But that’s fodder for a post in 2022.

But that’s also why I suspect what we lovingly call “Internet 2.0”, despite its economic logic predicated on the technology undergirding the Internet, is not the end-state. It’s why Francis Fukuyama in had it right in The End of History and the Last Man, a book greatly misinterpreted. He wrote (my emphasis added):

What I suggested had come to an end was not the occurrence of events, even large and grave events, but History: that is, history understood as a single, coherent, evolutionary process – it is not – when taking into account the experience of all peoples in all times. Both Hegel and Marx believed that the evolution of human societies was not open-ended, but would end when mankind had achieved a form of society that satisfied its deepest and most fundamental longings. Both thinkers thus posited an “end of history”: for Hegel this was the liberal state, while for Marx it was a communist society. This did not mean that the natural cycle of birth, life, and death would end, that important events would no longer happen, or that newspapers reporting them would cease to be published. It meant, rather, that there would be no further progress in the development of underlying principles and institutions.

It turns out that when it comes to Information Technology, very little is settled; after decades of developing the Internet and realizing its economic potential, the entire world is waking up to the reality that the Internet is not simply a new medium, but a new maker of reality.

Jacques Ellul, the French philosopher and sociologist who had a lifelong concern with what he called “technique” and published three seminal works on the role of technology in the contemporary world, had it right. In 1954 he published the first of those works where he detailed his thoughts:

Technology will increase the power and concentration of vast structures, both physical (the factory, the city) and organizational (the State, corporations, finance) that will eventually constrain us to live in a world that is no longer fit for mankind. We will be unable to control these structures, we will be deprived of real freedom – yet given a contrived element of freedom – but the result will be that technology will depersonalize the nature of our modern daily life.

I’ll write more about Ellul in 2022. For now, wishing you all a healthy New Year. I hope you enjoyed this end-of-the-year series.