Artificial intelligence researchers have made impressive use of Bayesian inference as a means of approximating some human capabilities, which raises the question: do human judgements and decisions adhere to similar rules? The arguments, both for and against

15 October 2020 (Chania, Crete) – As I have noted in previous posts, science has spawned a proliferation of technology that has dramatically infiltrated all aspects of modern life. In many ways the world is becoming so dynamic and complex that technological capabilities are overwhelming human capabilities to optimally interact with and leverage those technologies. I think as we are hurled headlong into the frenetic pace (made worse by all this development in artificial intelligence) we suffer from illusions of understanding, a false sense of comprehension, failing to see the looming chasm between what our brain knows and what our mind is capable of accessing.

Ah, the brain. And the mind. The mind is an evolved system. As such, it is unclear whether it emerges from a unified principle (a physics-like theory of cognition), or is the result of a complicated assemblage of ad-hoc processes that just happen to work. It’s probably a bit of both. It is going to take a very, very long time to unbox that. It’s why three years ago the Google Brain Team made a switch in meta-learning: teaching machines to learn to solve new problems without human ML expert intervention.

As I learned in my neuroscience program, the construction of personhood takes place progressively in early childhood, perhaps by the joining up of previously disparate fragments. Some psychological disorders are interpreted as “split personality”, failure of the fragments to unite. It’s not unreasonable speculation that the progressive growth of consciousness in the infant mirrors a similar progression over the longer timescale of evolution. Does a fish, say, have a rudimentary feeling of conscious personhood, on something like the same level as a human baby?

It’s why the artificial intelligence (AI) folks think intelligence is necessarily a part of a broader system. A brain is just a piece of biological tissue, there is nothing intrinsically intelligent about it. Beyond your brain, your body and senses – your sensorimotor affordances – are a fundamental part of your mind. Your environment is a fundamental part of your mind. What would happen if we were to put a freshly-created human brain in the body of an octopus, and let it live at the bottom of the ocean? Would it even learn to use its eight-legged body? Would it survive past a few days? We cannot perform this experiment, but we do know that cognitive development in humans and animals is driven by hardcoded, innate dynamics.

Or human culture which is a fundamental part of your mind. These are, after all, where all of your thoughts come from. You cannot dissociate intelligence from the context in which it expresses itself.

Over the past decade, science has made some notable progress in using technology to defy the limits of the human form, from mind-controlled prosthetic limbs to a growing body of research indicating we may one day be able to slow the process of aging. Our bodies are the next big candidate for technological optimization, so it’s no wonder that Big Tech has focused so much interest in them. A lot of interest.

But when I look at the most “computational” part of the body, the brain, I do not see a computer. Our brains do not “store” memories as computers do, simply calling up a desired piece of information from a memory bank. If they did, you’d be able to effortlessly remember what you had for lunch yesterday, or exactly the way your high school boyfriend smiled. Nor do our brains process information like a computer. Our gray matter doesn’t actually have wires that you can simply plug-and-play to overwrite depression a la the “Eternal Sunshine of the Spotless Mind”. The body, too, is more than just a well-oiled piece of machinery. We have yet to identify a single biological mechanism for aging or fitness that any pill or diet can simply “hack.”

But research into some of these things continues … it seems scientists have determined the body and brain are incredibly complex 🙂 … and has resulted in some fascinating research.

Like … are our brains Bayesian? That was the topic of a recent webinar/Zoom presentation by the Royal Statistical Society (RSS).

Quite a few years ago I was invited to join the RSS, based in London. It is where I had my first instruction on graphical interpretations of data: the good, the bad, the ugly. It is also the place where I have learned the most about machine learning. These sessions and events are run by “data wranglers”, people who know every facet of how to digest, to apply, and to test data relying on statistics. It is also the organization that launched a guide for the legal profession on how to use basic statistics and probability which I wrote about. The RSS was the venue where I first learned about technology assisted review and predictive coding as applied in the e-discovery world, way before it hit the mainstream legal tech conference circuit.

The RSS is a wonderful organization, set up as a charity that promotes statistics, data and evidence for the public good. It is a learned society and professional body, working with and for members to promote the role that statistics and data analysis play in society, share best practice, and support the profession.

AI researchers have made impressive use of Bayesian inference as a means of approximating some human capabilities, which raises the question: do human judgements and decisions adhere to similar rules? One of the RSS presenters noted “for the clearest evidence of Bayesian reasoning in the brain, we must look past the high‐level cognitive processes”.

First, some basics:

The human brain is made up of 90 billion neurons connected by more than 100 trillion synapses. It has been described as the most complicated thing in the world, but brain scientists say that is wrong: they think it is the most complicated things in the known universe. Little wonder, then, that scientists have such trouble working out how our brain actually works. Not in a mechanical sense: we know, roughly speaking, how different areas of the brain control different aspects of our bodies and our emotions, and how these distinct regions interact. The questions that are more difficult to answer relate to the complex decision‐making processes each of us experiences: how do we form beliefs, assess evidence, make judgements, and decide on a course of action?

Figuring that out would be a great achievement, in and of itself. But this has practical applications, too, not least for those artificial intelligence researchers who are looking to transpose the subtlety and adaptability of human thought from biological “wetware” to computing hardware.

In looking to replicate aspects of human cognition, AI researchers have made use of algorithms that learn from data through a process known as Bayesian inference. Based on Bayes’ famous theorem (see below), Bayesian inference is a method of updating beliefs in the light of new evidence, with the strength of those beliefs captured using probabilities. As such, it differs from frequentist inference, which focuses on how frequently we might expect to observe a given set of events under specific conditions.

* * * * * * *

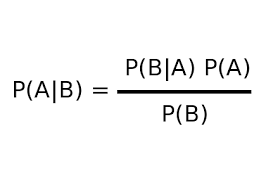

Bayes’ theorem

Bayes’ theorem provides us with the means to calculate the probability of certain events occurring conditional on other events that may occur – expressed as a probability between 0 and 1. Consider the following formula:

This tells us the probability of event B occurring given that event E has happened. This is known as a conditional probability, and it is derived by multiplying the conditional probability of E given B, by the probability of event B, divided by the probability of event E.

The same basic idea can be applied to beliefs. In this context, P(B|E) is interpreted as the strength of belief B given evidence E, and P(B) is our prior level of belief before we came across evidence E.

Using Bayes’ theorem, we can turn our “prior belief” into a “posterior belief”. When new evidence arises, we can repeat the calculation, with our last posterior belief becoming our next prior. The more evidence we assess, the sharper our judgements should get.

* * * * * * *

Bayesian inference is seen as an optimal way of assessing evidence and judging probability in real‐world situations; “optimal” in the sense that it is ideally rational in the way it integrates different sources of information to arrive at an output. In the field of AI, Bayesian inference has been found to be effective at helping machines approximate some human abilities, such as image recognition. But are there grounds for believing that this is how human thought processes work more generally? Do our beliefs, judgments, and decisions follow the rules of Bayesian inference?

Beneath the surface

For the clearest evidence of Bayesian reasoning in the brain, we must look past the high‐level cognitive processes that govern how we think and assess evidence, and consider the unconscious processes that control perception and movement. Professor Daniel Wolpert of the University of Cambridge’s neuroscience research centre believes we have our Bayesian brains to thank for allowing us to move our bodies gracefully and efficiently – by making reliable, quick‐fire predictions about the result of every movement we make.

Imagine taking a shot at a basketball hoop. In the context of a Bayesian formula, the “belief” would be what our brains already know about the nature of the world (how gravity works, how balls behave, every shot we have ever taken), while the “evidence” is our sensory input about what is going on right now (whether there is any breeze, the distance to the hoop, how tired we are, the defensive abilities of the opposing players). Wolpert, who has conducted a number of studies on how people control their movements, believes that as we go through life our brains gather statistics for different movement tasks, and combine these in a Bayesian fashion with sensory data, together with estimates of the reliability of that data. “We really are Bayesian inference machines,” he says.

To demonstrate this, Wolpert and his team invited research subjects to their lab to undergo tests based on a cutting‐edge neuroscientific technique: tickling. Professor Wolpert believes that as we go through life our brains gather statistics for different movement tasks, and combine these in a Bayesian fashion with other data. As every five‐year‐old knows, other people can easily have you in fits of laughter by tickling you, but you cannot tickle yourself. Until now. Wolpert’s team used a robot arm to mediate and delay people’s self‐tickling movements by fractions of a second – which turned out to be enough to bring the tickly feeling back.

The problem with trying to tickle yourself is that your body is so good at predicting the results of your movement and reacting that it cancels out the effect. But by delaying people’s movements, Wolpert was able to mess with the brain’s predictions just enough to bring back the element of surprise. This revealed the brain’s highly attuned estimates of what will happen when you move your finger in a certain way, which are very similar to what a Bayesian calculation would produce from the same data.

A study by Erno Téglás et al., published in Science, looked at one‐year‐old babies and how they learn to predict the movement of objects – measured by how long they looked at the objects as they moved them around in different patterns. The study found that the babies developed “expectations consistent with a Bayesian observer model”, mirroring “rational probabilistic expectations”. In other words, the babies learned to expect certain patterns of movement, and their expectations were consistent with a Bayesian analysis of the probability of different patterns occurring.

Other researchers have found indications of Bayesianism in higher‐level cognition. A 2006 study by Tom Griffiths of the University of California, Berkeley, and Josh Tenenbaum of MIT asked people to make predictions of how long people would live, how much money films would make, and how long politicians would last in office. The only data they were given to work with was the running total so far: current age, money made so far, and years served in office to date. People’s predictions, the researchers found, were very close to those derived from Bayesian calculations.

This suggests the brain not only has mastered Bayes’ theorem, but also has finely‐tuned prior beliefs about these real‐life phenomena, based on an understanding of the different distribution patterns of human life‐spans, box office takings, and political tenure. This is one of a number of studies that provide evidence of probabilistic models underlying the way we learn and think.

However, the route the brain takes to reach such apparently Bayesian conclusions is not always obvious. The authors of the study involving the babies said it was “unclear how exactly the workings of our model correspond to the mechanisms of infant cognition”, but that the close fit between the model and the observed data about the babies’ predictions suggests “at least a qualitative similarity between the two”.

To achieve such Bayesian reasoning, the brain would have to develop some kind of algorithm, manifested in patterns of neural connections, to represent or approximate Bayes’ theorem. Eric‐Jan Wagenmakers, an experimental psychologist at the University of Amsterdam, says the nature of the brain makes it difficult to imagine something as neat and elegant as Bayes’ theorem being reflected in a form we would recognise. We are dealing, he says, with “an unsupervised network of very stupid individual units that is forced by feedback from its environment to mimic a system that is Bayesian”.

Probability puzzles

Before we accept the Bayesian brain hypothesis wholeheartedly, there are a number of strong counter‐arguments that must be considered. For starters, it is fairly easy to come up with probability puzzles that should yield to Bayesian methods, but that regularly leave many people flummoxed. For instance, many people will tell you that if you toss a series of coins, getting all heads or all tails is less likely than getting, for instance, tails–tails–heads–tails–heads. It is not and Bayes’ theorem shows why: as the coin tosses are independent, there is no reason to expect one sequence is more likely than another.

There is also the well‐known Monty Hall problem, which can fool even trained minds. Participants are asked to pick one of three doors – A, B, or C – behind one of which lies a prize. The host of the game then opens one of the non‐winning doors (say, C), after which the contestant is given the choice of whether to stick with their original door (say, A) or switch to the other unopened door (say, B). Long story short: most people think switching will make no difference, when in fact it improves your chances of winning. Mathematician Keith Devlin has shown how you can use Bayes’ formula to work this out, so that the prior probability that the prize is behind door B, 1 in 3, becomes 2 in 3 when door C is opened to reveal no prize. The details are online (here) and they are fairly straightforward. Surely a Bayesian brain would be perfectly placed to cope with calculations such as these?

Even Sir David Spiegelhalter, professor of the public understanding of risk at the University of Cambridge, admits to mistrusting his intuition when it comes to probability. “The only gut feeling I have about probability is not to trust my gut feelings, and so whenever someone throws a problem at me I need to excuse myself, sit quietly muttering to myself for a while, and finally return with what may or may not be the right answer,” Spiegelhalter writes in guidance for maths students (here).

Psychologists have uncovered plenty of examples where our brains fail to weigh up probabilities correctly. The work of Nobel prize winner and bestselling author Daniel Kahneman, among others, has thrown light on the strange quirks of how we think and act – yielding countless examples of biases and mental shortcuts that produce questionable decisions. For instance, we are more likely to notice and believe information if it confirms our existing beliefs. We consistently assign too much weight to the first piece of evidence we encounter on a subject. We overestimate the likelihood of events that are easy to remember – which means the more unusual something is, the more likely our brains think it is to happen.

These weird mental habits are a world away from what you would expect of a Bayesian brain. At the very least, they suggest that our “prior beliefs” are hopelessly skewed. There’s considerable evidence that most people are dismally non‐Bayesian when performing reasoning. For example, people typically ignore base‐rate effects and overlook the need to know both false positive and false negative rates when assessing predictive or diagnostic tests.

In the case of testing for diseases, Bayes’ theorem reveals how even a test that is said to be 99% accurate might be wrong half of the time when telling people they have a rare condition – because its prevalence is so low that even this supposedly high accuracy figure still leads to just as many false positives as true positives. It is a counter‐intuitive result that would elude most people not equipped with a calculator and a working knowledge of Bayes’ theorem.

Diagnostic test accuracy explained. How is it that a diagnostic test that claims to be 99% accurate can still give a wrong diagnosis 50% of the time? In testing for a rare condition, we scan 10 000 people. Only 1% (100 people) have the condition; 9900 do not. Of the 100 people who do have the disease, a 99% accurate test will detect 99 of the true cases, leaving one false negative. But a 99% accurate test will also produce false positives at the rate of 1%. So, of the 9900 people who do not have the condition, 1% (99 people) will be told erroneously that they do have it. The total number of positive tests is therefore 198, of which only half are genuine. Thus the probability that a positive test result from this “99% accurate” test is a true positive is only 50%.

Life is full of really hard problems, which our brains must try and solve in a state of uncertainty and constant change As for base rates, Bayes’ theorem tells us to take these into account as “prior beliefs” – a crucial step that our mortal brains routinely overlook when judging probabilities on the fly.

All in all, that is quite a bit of evidence in favor of the argument that our brains are non‐Bayesian. But do not forget that we are dealing with the most complicated thing in the known universe, and these fascinating quirks and imperfections do not give a complete picture of how we think. On RSS presenter noted:

This kind of irrationality “s most striking because it arises against a backdrop of our extreme competence. For every heuristics‐and‐biases study that shows that we, for instance, cannot update base rates correctly, one can find instances where people do update correctly.

Humans need to give themselves more credit for how well they make decisions. Over the past decade or two, behavioral economics has told a very particular story about human beings: that we are irrational and error‐prone, owing in large part to the buggy, idiosyncratic hardware of the brain. This self‐deprecating story has become increasingly familiar, but certain questions remain vexing. Why are four‐year‐olds, for instance, still better than million‐dollar supercomputers at a host of cognitive tasks, including vision, language, and causal reasoning?

So while our well‐documented flaws may shed light on the limits of our capacity for probabilistic analysis, we should not write off the brain’s statistical abilities just yet. Perhaps what our failings really reveal is that life is full of really hard problems, which our brains must try and solve in a state of uncertainty and constant change, with scant information and no time.

Critical thinking

Bayesian models of cognition remain a hotly debated area. Critics complain of too much post hoc rationalisation, with researchers tweaking their models, priors, and assumptions to make almost any results fit a probabilistic interpretation. The issue is Bayesian brain theory could become a one‐size‐fits‐all explanation for human cognition:

If the probabilistic approach is to make a lasting contribution to researchers’ understanding of the mind, beyond merely flagging the obvious facts that people are sensitive to probabilities and adjust their beliefs (sometimes) in light of evidence, its practitioners must face apparently conflicting data with considerably more rigour. They must also reach a consensus on how models will be chosen, and stick to that consensus consistently.

Also, there are doubts about how far the Bayesian brain theory can go when it comes to higher‐level cognition. For a theory that purports to deal with updating beliefs, it struggles to explain the vagaries of belief acquisition, for instance. Good objections to our views leave us unmoved. Excellent objections cause us to be even more convinced of our views. It is the rare egoless soul who finds arguments persuasive for domains they care deeply about. It is somewhat ironic that the area for which Bayesianism seems most well suited – belief updating – is the area where Bayesianism has the most problems.

Staying alive

Stepping back from studies of the brain and looking at the bigger picture, some would take the fact that humanity is still here as evidence that something Bayesian is going on in our heads. After all, Bayesian decision‐making is essentially about combining existing knowledge and new evidence in an optimal fashion. Or, as the renowned French mathematician Pierre‐Simon Laplace put it, it is “common sense expressed in numbers”. It’s difficult to believe that any organism isn’t at least approximately Bayesian in its environment, given its limits. Even if people use a heuristic that’s not Bayesian, you could argue it’s Bayesian because of the utility argument: the effort you have to put in is easy. Many decisions need to be fast decisions, so you have to make shortcuts sometimes. The shortcut itself is sub‐optimal and non‐Bayesian – but the fact you’re taking the shortcut every time means huge savings in effort and energy. This may boil down to a more successful organism that’s more Bayesian than its competitor.

On the other hand, having a Bayesian brain can still lead us into trouble. Several of the RSS presenters explained how Bayesian inference is powerless in the face of strongly held irrational beliefs such as conspiracy theories and psychiatric delusions – because people’s trust in new evidence is so low. In this way, the mechanism by which a Bayesian brain updates beliefs can become corrupted, leading to false beliefs becoming reinforced by fresh evidence, rather than corrected.

To be fair, not even the most fervent supporter of the Bayesian brain hypothesis would claim that the brain is a perfect Bayesian inference engine. But we could reasonably describe a brain as “Bayesian” if it were approximately optimal given its fundamental limitations. For example, people do not have infinite memory or infinite attentional capacity.”

Another crucial caveat: the Bayesian aspects of our brains are rooted in the environment in which we operate. It’s approximately Bayesian given the hardware limitations and given that we operate in this particular world. In a different world we might fail completely. We would not be able to adjust.

Much of what the brain does is about making trade‐offs in difficult situations – rather than aiming for perfection, the challenge becomes how to manage finite space, finite time, limited attention, unknown unknowns, incomplete information, and an unforeseeable future; how to do so with grace and confidence; and how to do so in a community with others who are all simultaneously trying to do the same.

Plenty to learn

Clearly the Bayesian brain debate is far from settled. Some things our brains do seem to be Bayesian, others are miles off the mark. And there is much more to learn about what is really happening behind the scenes.

In any case, if our Bayesian powers are limited by the size of our brains, the time available, and all the other constraints of the environment in which we live (which differ from person to person and from moment to moment), then judging whether what is going on is Bayesian quickly becomes impossible. At some point it becomes a philosophical discussion whether you would call that Bayesian or not.

Even for those who are not convinced by the Bayesian brain hypothesis, the concept is proving a useful starting point for research. At this point, it’s more an idea guiding a research programme than a specific hypothesis about how cognition works.

Bayesian brain theories are used as part of rational analysis, which involves developing models of cognition based on a starting assumption of rationality, seeing whether they work, then reviewing them. The RSS panel’s concluding comments:

It turns out using this approach for making models of cognition works quite well. Even though there are ways that we deviate from Bayesian inference, the basic ideas behind it are right.

The idea of the Bayesian brain is a gift to researchers because it gives us a tool for understanding what the brain should be doing. And for a lot of what the brain does, Bayesian inference remains “the best game in town” in terms of a characterisation of what that ideal solution looks like.

Oh, and the COVID-19 graphic for the section Beneath the Surface came from a New York Times article titled “How to Think Like an Epidemiologist – Don’t worry, a little Bayesian analysis won’t hurt you” which you can read by clicking here.