Ah. The desire to catch a glimpse of what lies ahead.

17 August 2020 (Serifos, Greece) – A number of years ago a long-time business colleague invited me to join the Royal Statistical Society (RSS) in London. It is where I had my first instruction on graphical interpretations of data: the good, the bad, the ugly. It is also the place where I had my most intensive training in machine learning. These sessions and events are run by “data wranglers”, people who know every facet of how to digest, to apply, and to test data relying on statistics. The RSS was also the venue where I first learned about technology assisted review and predictive coding as applied in the e-discovery world, way before this technology hit the mainstream legal tech conference circuit. And it was this organization that three years ago launched a guide for the legal profession on how to use basic statistics and probability which I wrote about here. Also, please read my companion piece The misuse of statistics in court.

As I have noted before (and love repeating), the RSS is a wonderful organization, set up as a charity that promotes statistics, data and evidence for the public good. It is a learned society and professional body, working with and for members to promote the role that statistics and data analysis play in society, share best practices, and support many professions and industries that use statistics.

I just finished a multipart series on the history of prediction. Herein a few notes.

We are all fascinated by the future. Whether it is the rise and fall in interest rates, the outcome of elections, or winners at the Oscars, there is sure to be something you want to know ahead of time. There is certainly no shortage of pundits with ready opinions about what the future might hold – but their predictions might not be entirely reliable. Philip Tetlock, in his 2006 book Expert Political Judgment: How Good Is It? How Can We Know?, a 20-year study of prediction, showed that the average expert did little better than a normal pundit guessing on many of the political and economic questions asked of them. David Orrell, in his 2014 updated book The Future of Everything: The Science of Prediction, would find that data science had improved that percentage by almost 70%.

But expert predictions are only part of the forecasting story (see the Appendix “Prediction versus forecasting” at the end of this post). A raft of methods – from mathematical models to betting markets – are promising new ways of seeing into the future. And it is not only academics and professionals who can do it – specialized online services have become so sophisticated and easy to use that anyone can have a go.

Forecasting could probably stake a claim to being one of the world’s oldest professions. Beginning in the eighth century BC, a priestess known as the Pythia would answer questions about the future at the Temple of Apollo on Greece’s Mount Parnassus. It is said that she, the Oracle of Delphi, dispensed her wisdom in a trance – caused, some believe, by the hallucinogenic gases that would seep up through natural vents in the rock. For an interesting read about those gases … and, no, this piece was not part of this program … click here.

By the second century BC, the ancient Greeks had moved on to more sophisticated methods of prediction, such as the Antikythera mechanism, whose intricate bronze gears seemed capable of predicting a host of astronomical events such as eclipses.

Over time, our astronomical predictions became more refined, and in 1687 Isaac Newton published his laws of motion and gravitation. Newton’s friend Edmond Halley predicted in 1705 the return of the comet that now bears his name. But forecasters also started to concern themselves with more mundane, earthly matters. By the nineteenth century, the new technology of long‐distance telegrams meant that, for the first time, data from a network of weather stations could be transmitted in advance of changing conditions. This did not only spur developments in meteorology. People began to believe that similar scientific measurements might be useful in other areas, such as business.

Forerunners

Early economic forecasters, such as Roger Babson, are profiled by Walter Friedman in his book Fortune Tellers. Their work was not only inspired by weather forecasters, said Friedman:

It developed because of the sharp ups and downs of prices in the late nineteenth and early twentieth century, coupled with the desire of businesspeople to make future plans. Babson developed his service, for instance, after the 1907 panic. The way he shaped his forecasting method was reliant on traditions of barometers, ideas about the business cycle, and the availability of data. Whereas Babson’s method looked at trends in data over time, his contemporary, Irving Fisher, built a machine to model how the flow of supply and demand of one commodity affected that of others. It was a hydraulic computer. When Fisher adjusted a lever, water flowed through a series of tubes to restore equilibrium between the prices of goods.

A similar device was built by William Phillips at the London School of Economics in 1949. It used channels of colored water to replicate taxes, exports and spending in the British economy. Babson was alone among his contemporaries in predicting the 1929 stock market crash. However, says Friedman, he and other early forecasters made assumptions that were very simplistic and often misguided.

Wrong though they may have been, the methods employed by Babson and Fisher were forerunners of the range of statistical tools available today. Babson worked with time series, plotting the aggregate of variables like crop production, commodity prices and business failures on a single chart that forecast how the economy would fare. Fisher’s hydrostatic machine, on the other hand, did not include a time element. In Friedman’s book, the machine is described as the grandparent of the economic forecasting models developed after World War II and run on computers. For my readers who are interested in the history of computers, Friedman’s book is an excellent read.

The science of forecasting

Techniques that we know today were refined in a variety of different fields, said Rob Hyndman, professor of statistics at Monash University in Australia:

In the last 50 years or so, people started doing more time series models that try to relate the past to the future. They became extremely useful in sales forecasting and in predicting demand for items. In other fields such as engineering, they started trying to build models to predict things like river flow based on rainfall. Models for electricity demand were developed so that they could plan generation capacity.

The science of forecasting went full gear in the 1980s. People realized that if you took all of the ideas that people had developed in different fields, and you thought of it as a collection of techniques and overlaid that with analytical and scientific thinking, then forecasting itself could be considered a scientific discipline.

But how do we know whether or not quantitative forecasting will work in any given area?

Hyndman believes the predictability of an event boils down to three factors:

1. The first is whether you have an idea of what’s driving it – the causal factors. So you might just have data on the particular thing you’re interested in, but you don’t understand why the fluctuations occur or why the patterns exist in the data. You can still forecast it, but not so well. If you haven’t understood the way in which the thing you’re interested in reacts to the driving factors, you’re going to lose that coupling over time.

2. The availability of data

3. Whether a forecast will itself affect what it is that you are trying to predict. If an exchange rate rise is predicted, for instance, it will affect prices in the real markets, which will end up influencing the rate itself.

These factors aside, a successful forecaster also needs a toolbox of statistical methods and the know‐how to pick the right method for a particular situation, says Hyndman (see the Appendix “Forecasting techniques explained” at the end of this post).

Statistical models, though, only work in the short term. They’re not very good for long‐term forecasting because the big assumption – that the future looks similar to the past – slowly breaks down the further you get into the future. Even the near future. Many of those March predictions of “this-will-be-the-new-world” folks were making as COVID-19 swirled around us are in dust … and it’s only August.

Then there are problems where there’s just not really enough data to be able to build good models, or situations that are not reflected at all historically, such as technological changes. There’s no data available that will tell you what’s going to happen.

Superforecasters

Unforeseen developments – whether technological, political or social – pose an interesting dilemma for those whose job is to anticipate such things: national security agencies, for instance. What if such developments are predictable, not from a single data set or time series, perhaps, but from the aggregated opinions of groups of individuals? We used a model from 2011 to look at that.

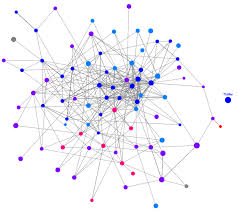

In 2011, the Aggregative Contingent Estimation (ACE) programme set out to answer that question, with funding from the Intelligence Advanced Research Projects Activity (IARPA). ACE announced a forecasting tournament that would run from September 2011 to June 2015, in which five teams would compete by answering 500 questions on world affairs. One of the teams was the Good Judgment Project (GJP), which was created by Barbara Mellers and political scientist Philip Tetlock, the man behind the 2006 research on expert predictions.

The GJP attracted over 20,000 wannabe forecasters in its first year. In his 2015 book, Superforecasting, Tetlock recalls how the forecasters were asked to predict “if protests in Russia would spread, the price of gold would plummet, the Nikkei would close above 9500, or war would erupt on the Korean peninsula”. The GJP won the tournament hands down. According to Tetlock, it beat a control group by 60% in its first year and 78% in its second year.

After that, IARPA decided to drop the others, leaving the GJP as the last team standing. The GJP continually assigned its participants an ever‐changing rating called a “Brier score”, which measures the accuracy of predictions on a scale from 0 to 2; the lower the number, the more accurate the prediction. By doing so, they were able to identify the best among them, whom they called superforecasters. Some superforecasters were plucked from the crowd and placed in 12‐person “superteams” that could share information with each other. Would the superteams perform any better? It turns out they could. Superteams were pitched against teams of regular forecasters. They also competed against individual forecasters, whose forecasts were aggregated to produce an unweighted average prediction. This average represented the “wisdom of the crowds”, an idea put forward in 1907 by the statistician Sir Francis Galton, who proposed that the accumulated knowledge of a group of people could be more accurate than individual predictions.

The superteams faced one final group of competitors: forecasters who had been assigned to work as traders in prediction markets, a popular form of forecasting in which people place bets on the outcome they think is most likely to happen:

The results were clear‐cut. Teams of ordinary forecasters beat the wisdom of the crowd by about 10%. Prediction markets beat ordinary teams by about 20%. And superteams beat prediction markets by 15% to 30%.

Want to bet?

The fact that the superteams were able to beat the markets was something of a surprise. Futures exchanges, such as the Iowa Electronic Markets (IEM) and PredictIt, have an enviable track record in predicting outcomes, especially political outcomes. In November 2015, Professor Leighton Vaughan Williams, director of the Betting Research Unit at Nottingham Business School, and his co‐author Dr James Reade, published “Forecasting Elections”, a study that compared prediction markets to more traditional methods:

We got huge amounts of data from InTrade and Betfair, plus statistical modelling, expert opinion and every opinion poll. We compared them over many years and literally hundreds of different elections using state‐of‐the‐art econometrics. We found that prediction markets significantly outperformed the other methodologies included in our study, and even more so as you get closer to the event. Similarly, a study conducted by Joyce E. Berg and colleagues, published in 2008, compared IEM predictions to the results of 964 polls over five US presidential elections since 1988. They found that the market is closer to the eventual outcome 74% of the time and that the market significantly outperforms the polls in every election when forecasting more than 100 days in advance.

So why were prediction markets beaten by superforecasters? One reason could be that the prediction markets in his contest lacked “liquidity” – in other words, they did not feature substantial amounts of money or activity. Vaughan Williams believed liquid markets would normally win for particular kinds of event:

If you ask superforecasters to beat a market on who’s going to win the Oscars or the Super Bowl or Florida in the 2020 US election they’d find it tough – because millions of pounds will be traded. But if you ask them: ‘Will Boris Johnson have a meeting with Emmanuel Macron Thursday night?’, in that situation there’s no real market for them to beat. Markets are not very good at predicting things that are inherently unpredictable, however.

A prediction market can’t aggregate information on something that people can’t work out, like the Lottery or earthquakes. You know where earthquakes are more likely to happen, but you can’t predict on what date they’ll occur. Foreseeing terrorist attacks is something else that markets are just not built for. Terrorists are hardly going to be putting their money in and tipping their hand – if anything, they would be doing the opposite.

NOTE: Future attacks by terrorist organizations are difficult to predict because their organizational capabilities and resources are hidden. But we did discuss a fascinating paper published last year in Science magazine which has been peer reviewed. The authors developed a modeling approach for estimating these parameters to predict future terror attacks. By testing their model against the Global Terrorism Database, they could explain about 60% of the variance in a terrorist group’s future lethality using only its first 10 to 20 attacks, outperforming previous models. The model also captures the dynamics by which terror organizations shift from random, low-fatality attacks to nonrandom, high-fatality attacks. These results have implications for efforts to combat terrorism worldwide.

A second paper was issued this past March. Machine learning methods were used to develop techniques for counterterrorism based on artificial intelligence. Since deep learning has recently gained more popularity in the machine learning domain, these techniques are explored to understand the behavior of terrorist activities. Five different models based on deep neural network were created to understand and possibly predict the behavior of terrorist activities. These papers deserve a lengthy review and it is on my “to do” list.

But getting back to Vaughan Williams, he said prediction markets have another advantage:

Often what’s just as important as knowing what the future will be is knowing it before somebody else. There has been a steam of papers on the coronavirus/influenza market over the last 5 years which now, in hindsight were effective for predicting outbreaks – but ignored. It’s because of our advanced ability to collect and aggregate information from everywhere in real time, second by second. But somebody needs to actually read this stufff, and act upon it.

Managing complexity

Picking up on that theme, predicting epidemics was an area where statistical forecasting methods struggled. But there has been steady progress in analyzing weather, climate, trade flows, human movement, etc. in what the presenters called the “quiet revolution” in numerical aggregation and analysis. It is due to the improvements driven by number‐crunching power from supercomputers, backed by a hierarchy of models of varying complexity, plus superb global data from satellites. But modelling complex systems like the climate or pandemics does have its limits. Said one presenter:

My experience is more with climate. If you look at the formation of a cloud, you have an interaction between minute particles of something that forms a seed for a droplet. The droplet grows, and that process is incredibly non‐linear and very sensitive to small changes. It involves things over all scales, from the microscopic scale to the scale of a cloud. I hear much the same from my colleagues involved in forecasting the effects of cancer drugs. Organic systems like the climate and the human body are fundamentally different from the kinds of mechanistic systems we are good at modelling. The dream is that if we just add more and more levels of detail we’d be able to capture this behavior, but there’s a fundamental limitation to what you can do with mechanistic models.

Whatever next?

So how will forecasting evolve in the future? In terms of opinion‐based predictions, I suppose we look no further than Almanis, a cross between a prediction market and the Good Judgment Project.

Describing itself as a “crowd wisdom platform”, Almanis incentivises forecasters with points, not pounds, although it awards real money prizes to the most accurate users. It is a commercial entity, making money by charging companies or governments to post questions. Services like Almanis will be commonly used more and more within the next 5 years. One presenter noted:

As academic research further improves their efficiency I think they’ll become a key part of corporate forecasting and information aggregation. Say I want to reduce the waiting list in an eye clinic and I’ve got a budget of £100,000. Should I hire a doctor, or two nurses? A prediction market can give you that sort of information.

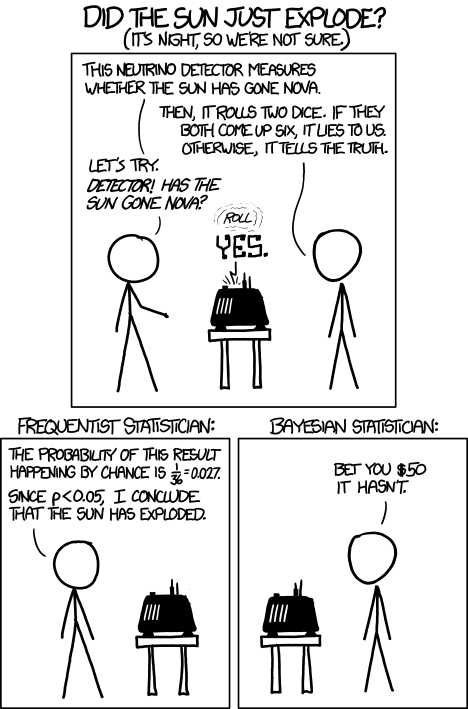

In terms of mathematical forecasting, our methods are being developed to cope with these massive, complex data streams. One trend that’s happening at the moment is that a lot of the techniques that computer scientists have developed in machine learning in other fields are coming into forecasting.

It’s very interesting to see what’s going on. Statistical models are being used to combine other types of predictions into meta‐forecasts. One example is PredictWise, a tool that combines information from prediction markets, opinion polls and bookmakers’ odds to come up with probabilities for everything from the next James Bond film (they were spot on) to the likelihood of the UK leaving the European Union (again, they were spot on). They picked Biden as the U.S. Democratic nominee for the 2020 Presidential election. Scores of journalists are watching their numbers for the 2020 U.S. Presidential Election, and their predictions for the U.S. Congressional elections.

But probabilities are not the same as certainties. Vaughan Williams:

People said the prediction markets had got the U.S. 2016 election all wrong but, in fact, several nailed it. But as any statistician would know, what they’re saying is that one time in five it’s going to happen. As for mathematical models, they are always just simplifications of reality – and life is sometimes too complex to model, whether accurately or approximately.

The future, or parts of it, therefore, will remain unforeseen. But it is safe to predict that forecasters will keep trying to catch a glimpse of what lies ahead.

* * * * * * * * * * * * * * *

APPENDIX 1

Forecasting techniques explained

The different kinds of statistical forecasting methods:

Time‐series forecasts

A purely time‐series approach just looks at the history of the variable you are interested in and builds a model that describes how that has changed over time. You might look at the history of monthly sales for a company. You look at the trends and seasonality and extrapolate it forward, but you do not take any other information into account.

Explanatory models

This is where you relate the thing you are trying to forecast with other things that might affect it. So if you are forecasting sales you might also take into account population and the state of the economy. All you actually need is for the drivers to be good predictors of the outcome, whether or not there is a direct causal relationship or something more complicated. You can also have models that combine the two. So you have some time series and some other information, and you build a model that puts it all together.

Probabilistic forecasts

For a long time people have been producing predictions with prediction intervals – giving a statement of uncertainty using probabilities. The new development is not just giving an interval but giving the entire probability distribution as your forecast. So you will say, “Here’s a number: the chance of being below this is 1%. Here’s another number: the chance of being below this is 2%,” and so on over 100 percentiles.

But if you are giving an entire probability distribution, you cannot measure the accuracy of your forecast in a simple way. New techniques for measuring forecast accuracy that take this into account have become popular in the last five years or so: these are called probability scoring methods.

* * * * * * * * * * * * * * *

APPENDIX 2

Prediction versus forecasting

The terms “prediction” and “forecasting” are often used interchangeably – as was the case in this series. But as far as anyone has managed to pin down a definition, one school of thought holds that forecasting is about the future – tomorrow’s temperature, for example. Prediction, in contrast, involves finding out about the unobserved present. If you want to determine how much your house will sell for, you could make a prediction based on the prices of houses in your neighborhood.

That was the attempt: to differentiate predictive analytics from forecasting. The point was that predictive analytics is something else entirely, going beyond standard forecasting by producing a predictive score for each customer or other organizational element, for instance. In contrast, forecasting provides overall aggregate estimates, such as the total number of purchases next quarter. For example, forecasting might estimate the total number of ice cream cones to be purchased in a certain region, while predictive analytics tells you which individual customers are likely to buy an ice cream cone.

Galit Shmueli said:

“The term ‘forecasting’ is used when it is a time series and we are predicting the series into the future. Hence ‘business forecasts’ and ‘weather forecasts’. In contrast, ‘prediction’ is the act of predicting in a cross‐sectional setting, where the data are a snapshot in time (say, a one‐time sample from a customer database). Here you use information on a sample of records to predict the value of other records (which can be a value that will be observed in the future).

She explained it in more detail in a 2016 video made part of the series:

The methodology underlying predictability and forecasting are prevalent in so much of our everyday life. I can easily relate the three factors of predictability in a current project I’m undertaking (which is similar to most project approaches I use), updating and reforming a Records Management/Information Governance program for a large company; without going into boring detail, I implement the following:

1) I first determine the “drivers” or underlying reasons that create or cause the current environment, which is far more important than simply saying “you need new software” or “new policy”, etc.;

2) I then look at the data, which in many cases involves looking at what/how certain tools or apps are used, performance data, or other reportable information specific to the task at hand;

3) The impact of my findings and recommendations; a simple example would be determining how a “cleaner” or more-usable and accurate retention schedule will impact eDiscovery processes, or offsite storage needs, etc.

This is just one example of the impact of successful predictability, reduced down to simple, everyday tasks that should be routine but are often bypassed when quick solutions are sought. New, highly-sophisticated techniques and modeling for forecasting and predictions are extremely powerful, as Mr. Bufithis explains so clearly here. Thank you for an insightful article.

Thanks, Aaron. I hope to expand the article (or maybe a “Part 2”) and delve a bit deeper into the new, highly-sophisticated techniques and modeling for forecasting and predictions.