An avalanche of images offers unsettling insights into bias

12 January 2020 (Brussels, Belgium) – If you are in or around London, or going to London, in the next month I highly recommend the Trevor Paglen exhibit at the Barbican (I saw it last month). My film crew has been in London this past week working on our AI documentary and I gave them a “weekend extra” to enjoy themselves and they had a chance to see the exhibit. I wrote about Trevor last year. He is an American artist, geographer, and author whose work tackles mass surveillance and data collection.

For information about the exhibit click here.

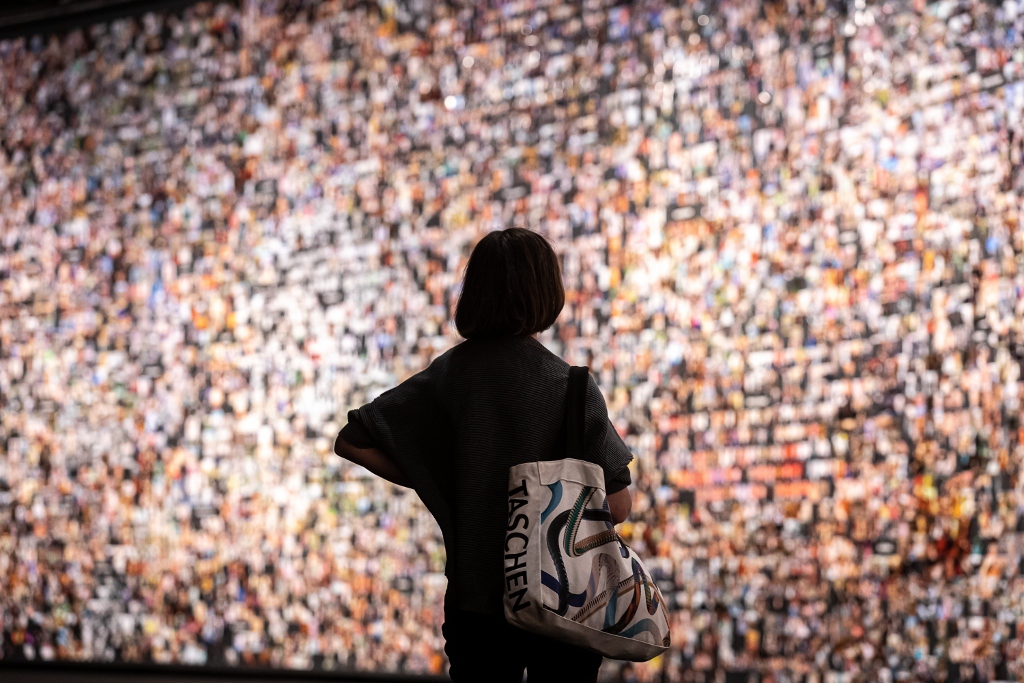

There are 35,000 images following The Curve’s long and narrow arc, from floor to ceiling. Snapshot-size, from a distance they form a pleasingly decorative mosaic. Zoom in and it gets complicated. The pictures are from ImageNet, a dataset that trains the artificial intelligence systems that surround and surveil us, dividing the visual world into 21,000 categories.

I have written about ImageNet a few times. This is one of the most widely shared, publicly available collection of images out there – and it is also used to train artificial intelligence networks. It’s available online, so you can have a go searching its huge image bank. Apparently, ImageNet contains more than fourteen-million images organized into more than 21,000 categories or ‘classes’. It is a “go to” source for machine learning.

Paglen has chosen keywords and arranged images in clusters around them. The categories blur and the images, though fixed on the wall, heave and swell across it. There are moments of beauty – a cluster of lichen, for instance – but the longer one looks, the more problematic it becomes: why is one image labelled “alcoholic” and similar one “wine lover”? Why do the images around “convict” predominantly feature black people? Why is “fucker” mostly surrounded by half-naked women?

Paglen’s project exposes the dark heart of machine learning. There isn’t an AI computer system free from emotion and bias. ImageNet was created in U.S. universities and based on pictures taken from Flickr. Crowdsourced workers labelled the pictures, and that’s where the racist and misogynist stereotypes surge in – a prejudiced ghost lurks in the machine.

Trevor takes as his starting point the way Artificial Intelligence networks are taught how to “see”, “hear” and “perceive” the world by engineers who feed them vast “training sets”.

Standard “training sets” consist of images, video and sound libraries that depict objects, faces, facial expressions, gestures, actions, speech commands, eye movements and more. The point is that the way these objects are categorized, labelled and interpreted are not value-free; in other words, the human categorizers have to bring in all kinds of subjective and value judgements – and that this subjective element can lead to all kinds of wonky outcomes.

Thus Trevor wants to point out that the ongoing development of artificial intelligence is rife with hidden prejudices, biases, stereotypes and just wrong assumptions. And that this process starts (in some iterations) with the scanning of vast reservoirs of images. Such as the one he’s created here. To quote from my Trevor piece last year:

Machine-seeing-for-machines is a ubiquitous phenomenon, encompassing everything from facial-recognition systems conducting automated biometric surveillance at airports to department stores intercepting customers’ mobile phone pings to create intricate maps of movements through the aisles. But all this seeing, all of these images, are essentially invisible to human eyes. These images aren’t meant for us; they’re meant to do things in the world; human eyes aren’t in the loop.

So where’s the work of art?

Well, the Curve is that long tall curving exhibition space at the Barbican which I am sure you know if you have been to exhibits there, so uniquely shaped that the curators commission works of art specifically for its shape and structure. For his Curve work Trevor had the bright idea of plastering the long curving wall with those 35,000 individually printed photographs pinned in a complex mosaic of images along the immense length of the curve. It has an awesome impact. That’s a lot of photos.

In most cases, the connotations of image categories and names are uncontroversial i.e. a ‘strawberry’ or ‘orange’ but many others are ambiguous and/or a question of judgement – such as ‘debtors’, ‘alcoholics’ and ‘bad people’. As the old computer programming cliché has it: ‘garbage in, garbage out.’ If artificial intelligence programs are being taught to teach themselves based on highly questionable and subjective premises, we shouldn’t be surprised if they start developing all kinds of errors, extrapolating and exaggerating all kinds of initial biases into wild stereotypes and misjudgements.

So the purpose of From Apple to Anomaly (from the sign at the exhibit entrance) is to:

Question the content of the images which are chosen for machine learning. These are just some of the kinds of images which researchers are currently using to teach machines about “the world”.

Conceptually, it seemed to me that the work doesn’t really go much further than that. It has a structure of sorts which is that, when you enter, the first images are of the uncontroversial ‘factual’ type – specifically, the first images you come to are of the simple concept ‘apple’.

Nothing can go wrong with images of an apple, right? Then as you walk along it, the mosaic of images widens like a funnel with a steady increase of other categories of all sorts, until the entire wall is covered and you are being bombarded by images arranged according to (what looks like) a fairly random collection of themes. (The themes are identified by black cards with clear white text, as in ‘apple’ below, which are placed at the centre of each cluster of images.)

Having read the blurb about the way words, and AI interpretation of words, it becomes increasingly problematic as the words become increasingly abstract. I expected that the concepts would start simple and become increasingly vague. But the work is not, in fact like that – it’s much more random, so that quite specific categories – like ‘paleontologist’ – can be found at the end while quite vague ones crop up very early on.

THE BIG VIEW

Most people are aware that Facebook harvests their data, just like Google and all the other big computer giants: Instagram, Twitter, blah blah blah. The disappointing reality for deep thinkers like Trevor is that most people, quite obviously, don’t care. As long as they can instant message their mates or post photos of their cats for the world to see, most people don’t appear to give a monkey’s ass what these huge American corporations do with the incalculably vast tracts of date they harvest and hold about us. Oh, yes, they hit the comment boards but they still use the tech, rely on the tech.

I think the same is true of artificial intelligence. Most people don’t care because they don’t think it affects them now or is likely to affect them in the future. So some broad points:

1. American bias

The primary AI books are written by Americans and feature examples from America.

One exception: facial recognition technology. The Chinese own this. More to come.

And when you dig deep you tend to find that AI, insofar as it is applied in the real world, tends to exacerbate inequalities and prejudices which already exist. In America. The examples about America’s treatment of its black citizens, or the poor, or the potentially dreadful implications of computerised programmes on healthcare, specifically for the poor – all these examples tend to be taken from America, which is a deeply and distinctively screwed-up country. My point is a lot of the scarifying about AI turns out, on investigation, really to reflect the scary nature of American society, its gross injustices and inequalities.

2. Britain is not America

As much as I call Britain the 51st state (sorry Puerto Rico), it is a different country, with different values, run in different ways. Jack Mabry, my AI guru at Deep Mind in London:

I take the London Underground or sometimes the overground train service every day. Every day I see the chaos and confusion as large-scale systems fail at any number of pressure points. The idea that learning machines are going to make any difference to the basic mismanagement and bad running of most of our organisations seems to me laughable. From time to time I see headlines about self-driving or driverless cars, sometimes taken as an example of artificial intelligence. OK. At what date in the future would you say that the majority of London’s traffic will be driverless cars, lorries, taxis, buses and Deliveroo scooters? In ten years? Twenty years? Never?

3. The triviality of much AI

There’s also a problem with the triviality of much AI research. I’ve read (too many) articles about AI and I quickly get bored of reading how supercomputers can now beat grand chessmasters or world champions at the complex game of Go. I can hardly think of anything more irrelevant to the real world. Last year the Barbican itself hosted an exhibition AI: More Than Human but the net result of the scores of exhibits and interactive doo-dahs was how trivial and pointless most of them were.

4. No machine will ever ‘think’

And this brings us to the core of the case against AI, which is that it’s impossible. And I’m talking Artificial general intelligence (AGI) here, the intelligence of a machine that can understand or learn any intellectual task that a human being can. Creating any kind of computer programwhich ‘thinks’ like a human is, quite obviously impossible. This is because people don’t actually ‘think’ in any narrowly definable sense of the word. People reach decisions, or just do things, based on thousands of cumulated impulses and experiences, unique to each individual, and so complicated and, in general, so irrational, that no programs or models can ever capture it. The long detailed Wikipedia article about artificial intelligence includes this:

Moravec’s paradox generalizes that low-level sensorimotor skills that humans take for granted are, counterintuitively, difficult to program into a robot; the paradox is named after Hans Moravec, who stated in 1988 that ‘it is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility’.

Intelligence tests, chess, Go – tasks with finite rules of the kinds computer programmers understand – relatively easy to program. The infinitely complex billions of interactions which characterise human behaviour – impossible.

5. People are irrational

As most readers know, I completed an AI program at ETH in Zurich, and a neuroscience program at Cambridge – both a combination of in-class and MOOC. Now I am in an art/literature program and history via University of Thessaloniki. Through all of these studies there is one thing that comes over: how irrational, perverse, weird and unpredictable people can be, as individuals and in crowds. Especially through my art/literature study. Because the behavior of people is the subject matter of novels, plays, poems and countless art works; the really profound, bottomless irrationality of human beings is – arguably – the subject matter of the arts.

People smoke and drink and get addicted to drugs (and computer games and smart phones), people follow charismatic leaders like Hitler or Donald Trump. People, in other words, are semi-rational animals first and only a long long way afterwards, rational, thinking beings and even then, only rational in limited ways, around specific goals set by their life experiences or jobs or current situations.

Hardly any of this can be factored into any computer program. Last year as part of the preparation for our AI documentary I was invited into the IT department of a large American corporation. From one of the employees I met:

Let me tell you. Users. Damn their eyes. They keep coming up with queer and unpredicted ways of using our system which none of our program managers, project managers, designers or programmers had anticipated ANY of it!

Yep. People keep outwitting and outflanking the computer systems because that’s what people do, not because any individual person is particularly clever but because, taken as a whole, people here, there and across the range, stumble across flaws, errors, glitches, bugs, unexpected combinations, don’t do what ultra-rational computer scientists and data analysts expect them to. Stop it, dammit!

6. It doesn’t work

The most obvious thing about tech, is that it’s always breaking. My team just finished a week at CES 2020 in Las Vegas and they are writing up a doozy of a review. One lovely tidbit from an IT department staffer at the show (ok, they plied him with drinks):

I am on the receiving end of a never-ending tide of complaints and queries about why this, that or the other functionality our tech has broke. Some of our stuff is always broken. I deal with bugs and glitches but the large, structural problems are due to the enormous complexity of these systems. People do not get it. My boss does not get it. If you only knew the developer time and budget expended.

7. Big government, dumb government

I had an inside seat on the development of one of Europe’s data works-of-art in Europe: the new GDPR. It was an education on bureaucratic mindsets, and the ability of Big Tech to manipulate the system so any real “change” in the area of data protection was strangled to death. More next week in special post.

So when I see government rallying around “we must regulate AI!”, I cringe. No amount of prejudicing or stereotyping in, to take the anti-AI campaigners’ biggest worries, image recognition, will ever compete with the straightforward bad, dumb, badly thought out, terribly implemented and often cack-handedly horrible decisions which governments and their bureaucracies always take. Any remote and highly speculative threat about the possibility that some AI programs may or may not be compromised by partial judgements and bias is dwarfed by the bad judgements and stereotyping which characterise our society and, in particular our governments, in the present, in the here-and-now.

8. Destroying the world

And just a heads up. It isn’t artificial intelligence which is opening a new coal-fired power stations every two weeks, or building a 100 new airports and manufacturing 75 million new cars and burning down tracts of the rainforest the size of Belgium every year. The meaningful application of artificial intelligence is decades away, whereas good-old-fashioned human stupidity is destroying the world here and now in front of our eyes, and nobody cares very much.

Summary

So, back to the exhibit. I liked it not because of the supposed warning it makes about artificial intelligence – and quite frankly, it doesn’t really give you very much background information to get your teeth into or ponder; it’s a visual thing – but because:

• it is huge and awesome and an impressive thing to walk along – so “American”! So big!

• its stomach-churning glut of imagery is testimony to the vast, unstoppable, planet-wasting machine which is … humanity