11 November 2019 (Washington, DC) — Times are, indeed, interesting. My weekend was filled with activities at the Smithsonian space museums and libraries across D.C. Oh, and the Apple credit card “AI bias story”.

Two very powerful outfits, Apple (the “we-really-mean-privacy!” outfit that has a thing for Chinese food) and Goldman Sachs (the we-make-money-every-way-possible organization) are the subject of the story “Viral Tweet about Apple Card Leads to Goldman Sachs Probe”.

On November 7th, web entrepreneur David Hansson posted a now-viral tweet that the Apple Card had given him 20 times the credit limit of his wife. This was despite the fact they filed joint tax returns and, upon investigation, his wife had a better credit score than he did. Apple co-founder Steve Wozniak tweeted that he had experienced the same issue.

Upshot: the New York’s Department of Financial Services has launched an investigation into the algorithm run by Goldman Sachs, which manages the card. Its superintendent, Linda Lacewell, said in a blog post that the watchdog would “examine whether the algorithm used to make these credit limit decisions violates state laws that prohibit discrimination on the basis of sex.”

Wider problem: Goldman Sachs posted a statement on Twitter on the weekend where it said that gender is not taken into account when determining creditworthiness. But the unexplainable disparity in the card’s credit limits is yet another example of how algorithmic bias can be unintentionally created.

And then dozens and dozens and dozens and dozens of bloggers piled on commenting about this bias, this algorithm, this “big name” bias story.

Even more intriguing: the aggrieved tweeter’s wife had her credit score magically changed over the weekend. Remarkable how smart algorithms work.

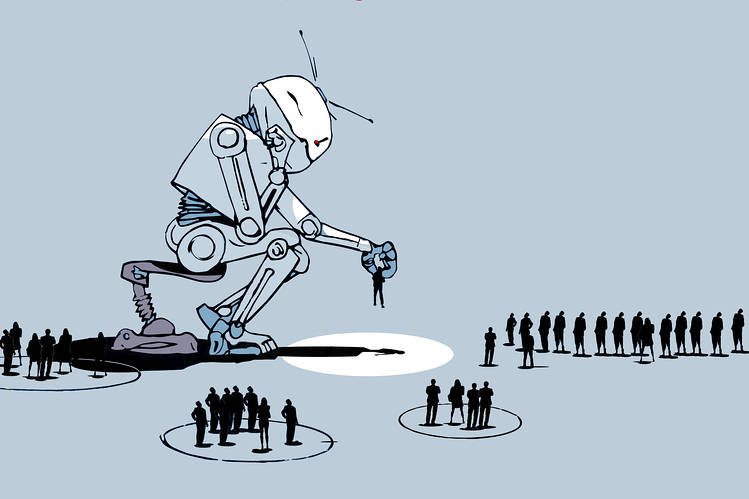

It’s the algorithm part that is more important than the bias angle. The reason is that algorithms embody bias (duh), and now non-technical and non-financial people are going to start asking questions: superficial at first and then increasingly on point. It’s not really good for algorithms when humans obviously can fiddle the outputs, is it?

So now we have two (somewhat) untouchable companies in the spotlight for subjective, touchy feely things with which neither company is particularly associated. Oh, scores of other bad deeds but not this kind of stuff. The investigation may lead to some interesting information about what’s up in the clubby world of the richest companies in the world. Discrimination maybe? Carelessness? Indifference? Greed? That’s the cynic in me. I mean, what fun: those corporate halls which are often tomb-quiet may resound with stressed voices. “Apple” carts which allegedly sell to anyone may be upset. Cleaning up after the spill may drag the double’s partners from two exclusive companies into a task similar to cleaning sea birds after the gulf oil spill.

But this issue suddenly gets news traction? Well, the lawyers are salivating. Hoping to jump onto one of those lovely lawyer-powered railroad handcars creeping down the loooooooong line of litigation. Boys and girls, ladies and gents, dear readers … THIS IS A SURPRISE? For a good start on learning why AI bias is hard (impossible) to fix I suggest you start with just these two sources:

• A short one: Karen Hao’s MIT Technology magazine piece here.

• A long one: Shoshana Zuboff’s The Age of Surveillance Capitalism

But imagine if Mr Hansson and Mr Wozniak, incensed by how algorithmic bias affected them and their wives personally, had decided to do a little research. They would have encountered the works of (primarily women and persons of color) scholars who’ve been warning us for years why something like Apple/Goldman Sachs would happen, and continually does happen. They would find scholars such as :

• Safiya Umoja Noble and her book Algorithms of Oppression

• Virginia Eubanks and her book Automating Inequality

• Mar Hicks and her book Programmed Inequality

• Ruha Benjamin and her book Race After Technology

• Meredith Broussard and her book Artificial Unintelligence

• Sarah Roberts and her book Behind the Screen: Content Moderation in the Shadows of Social Media

Sarah Roberts’ book is especially illuminating because it explains “the tubes and the pipes” of social media technology. It is a directly related counterpoint to understanding AI as it relates to human labor in the machine. The primary shield against hateful language, violent videos, abuse of women, and every level of online cruelty uploaded by users is not an algorithm. It is people. A structure mostly invisible by design.

And I have merely scratched the surface. These authors and many others have explored these issues with deep thinking, issues that have been been going on for some time. All of them explain why there are no “values-free” tools, how “agendas” are built in … and how bias can be expressed by lines of code.

And gents: in your Tweets you say you are REALLY concerned about protecting women against bias?

So why in hell are you not highlighting how child abusers run rampant as tech companies look the other way? Who gives a damn about your wife’s credit rating. As the New York Times detailed in a recent story, social media companies have the technical tools to stop the recirculation of abuse imagery by matching newly detected images against databases of the material. Yet the industry does not take full advantage of any of these tools.

Oh, yeah: tech companies are far more likely to review photos and videos and other files on their platforms for facial recognition, malware detection and copyright enforcement. But “abuse content”? Nope. That raises “significant privacy concerns” they say.

And the coming irony? We cheer because Facebook has announced it wants to eventually encrypt everything on its platforms. Everybody is pushing for encryption. But that encryption will vastly limit detection of abusive content by the (unemployed) technology it has now.

And when deep fakes exploded on the market two and one-half years ago, supercharged by powerful and widely available artificial-intelligence software developed by Google, and these videos were quickly weaponized disproportionately against women … representing a new and degrading means of humiliation, harassment and abuse … where were you, given your stature and your disproportionate high level of power? You were talking about the First Amendment and “their legality hasn’t been tested in court” when you should have been arguing that it’s pure defamation, identity theft and fraud. And you are worried about credit ratings ??!!

CONCLUSION

Ok, I have gone a wee bit off-the-rails and outside the Apple/Goldman Sachs issue. But much of this AI bias against women is because women are so underrepresented in technology leadership roles, especially AI leadership roles. Just two commercial examples:

• When Microsoft was upgrading its Xbox gaming system, in which users play with gestures and spoken commands rather than a controller, it was ready to go to market. But just before it did, a woman who worked for the company took the game home to play with her family, and a funny thing happened. The motion-sensing devices worked just fine for her husband, but not so much for her or her children. That’s because the system had been tested on men ages 18 to 35 and didn’t recognize as well the body motions of women and children.

• When Amazon was developing voice recognition tools that rely on A.I. those tools did not recognize higher-pitched voices (there were no women on the team). And its facial recognition technology was far more accurate with lighter-skin men than with women and, especially, with darker-skin people (not only no men, no persons of color on the team). That’s because if white men are used much more frequently to train A.I. software, it will much more easily recognize their voices and faces.

That is what happens when teams aren’t diverse. Women and people of color are fighting many battles in the tech world and in the fast-growing world of artificial intelligence.

Oh … hold on. Women do have a major leadership role. One point I forgot, the phenomenon known as the “glass cliff”. When a company stumbles, profits decline, and market share drops, suddenly the chances it will hire a woman as CEO increase. This is the “glass cliff”. Yes, it lacks the simple metaphorical elegance of the “glass ceiling”. And to be fair, it’s also a judgment call whether a company that just hired a woman is truly in moment of crisis or simply facing normal fluctuations. Still, the women who grab these precarious positions and gamely clean up messes they didn’t make can attest: the glass cliff is real. It’s a slippery slope. There is a recent study out of the Harvard Business School that posits why this happens: that (white) men reject precarious roles, preferring to wait for a better opportunity, while women are more likely to jump at a chance to lead. And the glass cliff isn’t limited to business. It also exists in politics and in high-profile industries like sports.

And as to AI and bias, I think any dream of fixing it is a fool’s game. What most people are worried about is that when they run the algorithm is there some mysterious or unknown input or stimulus that changes the output to be something that’s outside of the margin of error, and of which you are unaware. At the root of what all machine intelligence is about: you’re trying to predict decisions better. If a decision gets distorted in some way, whatever process that decision is a part of, potentially it can lead to an incorrect answer or a sub-optimal path in the decision tree.

It’s one of those nasty little things: people are aware that human beings have bias in their thinking – we’ve been talking about it for ages. And technologies that are fed by information that is created in the real world, fundamentally could have the same effect. So now the question is: how do you think about that when you’re actually shifting a cognitive task completely into a machine, where you don’t have the same kind of qualitative reaction that human beings will have? We either have to re-create those functions, or try to minimize bias, or choose areas where bias is less impactful.

And if human biases truly are imprinted upon AI algorithms, either subconsciously or through a phenomenon we don’t yet understand, then what’s to stop that same phenomenon from interfering with humans tasked with correcting that bias? Granted, I sound like I borrowed a page from Rod Sterling’s “The Twilight Zone”. But I have been thinking about this stuff. But that’s really where any discussion of bias should be: the source of error in neural network algorithms should be treated not as a mathematical factor but as a subliminal influence.

When I was pursuing my AI program at ETH Zurich, a big study we poured over was done by a team of researchers from the Prague University of Economics and Germany’s Technische Universitat. They were investigating this phenomenon: specifically, the links between the psychological well-being of AI developers and the inference abilities of the algorithms they create. Their theory was based on Martie Haselton and Daniel Nettle’s research into cognitive bias and how that may simply be “embedded” in our brain and have evolved along with the human species.

The research suggested that the human mind evolved survival strategies over countless generations, all of which culminated in people’s capability to make snap-judgment, rash, risk-averse decisions. Humans learned to leap to conclusions, in other words, when they didn’t have time to think about it. And it echoed the pontification of my head instructor: “Look, the human mind shows good design. But remember: it was designed for fitness maximization, not for truth preservation.”