In Part 1 I addressed the issue of bias in artificial intelligence. I received 102 email comments to that post. I will summarize them and issue an amended post with those comments next week.

Here in Part 2, a discussion of the real problems with machine learning,

with an emphasis on its application in the legal technology ecosystem.

29 July 2018 (Serifos, Greece) – We’re now four or five years into the current explosion of machine learning (ML), and pretty much everyone has heard of it. It’s not just that startups are forming every day or that the big tech platform companies are rebuilding themselves around it – everyone outside tech has read the Economist or Financial Times or BusinessInsider or Scientific American or [insert magazine title here] cover story, and many big companies have some projects underway. We know this is a “Next Big Thing”. Because “they” have told us it is.

Last month in his bi-monthly column, Benedict Evans (head of mobile and digital media strategy and analysis for Andreessen Horowitz, the private American venture capital firm) tried to offer some perspective and noted:

I still don’t think we have a settled sense of quite what machine learning means – what it will mean for tech companies or for companies in the broader economy, how to think structurally about what new things it could enable, or what machine learning means for all the rest of us, and what important problems it might actually be able to solve.

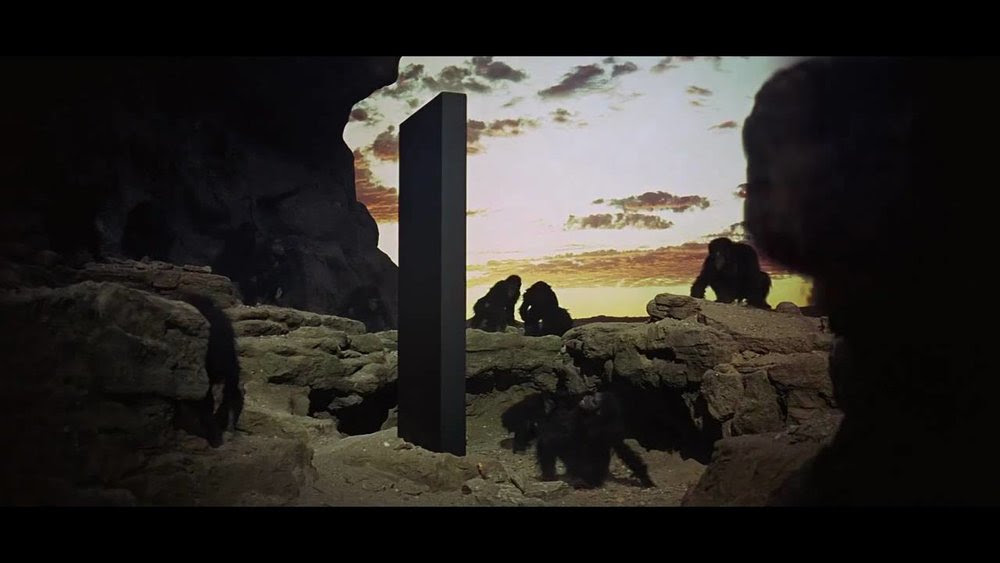

And the problem isn’t helped by the term “artificial intelligence”, which tends to end any conversation as soon as it’s begun. As soon as we say “AI”, it’s as though the black monolith from the beginning of 2001 has appeared, and we all become apes screaming at it and shaking our fists. You can’t analyze “AI”.

But listen to pundits like Erik Brynjolfsson and Andrew McAfee and AI/ML will be the engine for massive economic growth. Why do we not see it in productivity statistics? Their answer is that its breakthroughs haven’t yet had time to deeply change how work gets done in call centres, hospitals, banks, utilities, supermarkets, trucking fleets, logistics management and other businesses. Plus we may not have quite figured out how to measure the effect of ML. Oh, and we do a terrible job of predicting supply chain effects which is where all of this stuff really counts.

Nah. Technology may be the catalyst, but technology alone will not bring a productivity boom. We’re talking about the need to invent new business models, workers that need to develop new skills … and policymakers who need to update (HA!) rules and regulations. And I understate the enormous challenges that need to be overcome in order for the full potential of technology to be realized. What if entrepreneurs realize far greater rewards by exploiting technology and governance loopholes than by sharing prosperity? What if workers do not want to develop new skills, or cannot, or do not do so quickly enough? What if policymakers fail to update rules and regulations? What if they do so in ways that are actually harmful to competition and rather serve entrenched interests? What if too few people understand technology well enough to form well-reasoned positions? It is much more complex than these dreamers opine.

And will all this AI/ML cost jobs, or create jobs? Those predictions are a mess. Last week PwC, an accountancy firm, published a study where it reckoned AI and machine learning will create 7.2m jobs in the UK, more than the 7m displaced by automation. But The Economist cover story three weeks ago? A “visionary essay” of a world of work … where companies have no employees.

These join the collection of long-range forecasts which sadly never expose their models for wide scrutiny. And this stuff requires you do your homework. You need to understand it, to examine it.

Taken at face value the PwC study suggests a massive dislocation, both geographically, and in terms of skills, equality, identity, and psychology. The sectors which gain most roles, healthcare and technical, are vastly different to those which get slammed (transport & manufacturing). London, naturally, does well, the Midlands and the North poorly. Seven million losing their job in 20 years is not a pretty picture and one which will require real serious policy interventions, especially at a geographical level to avoid a fractious social response.

As if 20-year forecasts have much validity. But without the models to examine these are all just click bait … and have been Retweeted a million times. My hunch is the timing is way off. Things may move faster and hit harder, especially in a UK economy enfeebled by Brexit, and alongside the growth of work co-ordination platforms that put pressure on the quality of employment most workers can enjoy.

And what of the effect of automation/machine learning in emerging markets? For two centuries, countries have used low-wage labor to climb out of poverty. What will happen when robots take those jobs? During my summer sojourn here in Greece I was at the Mykonos “unconference” and Chris Larson (a writer for Foreign Policy magazine) noted he had spoken with the owners of garment factories in Bangladesh and India who have described the pressure their Western fashion customers had put under them to switch from manual labor to (mostly Chinese-made) automated machines. They hadn’t yet make any redundancies … job losses would likely be met by “sabotage and riots”. Humans embrace technology that helps and extends them. They don’t take so kindly to technology that replaces them.

Side note: Pascal Garcia, one of the organizers behind the Mobile World Congress, was also here and he noted that in Southeast Asia and Sub-Saharan Africa many workers at factories are forming new collective labor organizations, organized using messaging platforms, albeit fragmented by nationality. Ah, technology.

And let me say this: watching the violence in Barcelona perpetrated by taxi drivers who are against the giving of licences to Uber and Cabify (on a very low equation: 1 new entrant per 30 official cabs basis) leaves me thinking a lot of people are going to try to fight technology. But we are all guilty for lapping up this stuff without examining it. We have a tendency to revere technologists who solve the world’s woes by recasting complex social situations as neatly defined problems. It’s our obsession with dataism — the quantitative approach to solving all problems, ignoring the subtlety and avoiding analytical thought.

And the really, really dark side? As if we are not all inured by reports of the “dark side” and “disturbing” tech stories? Several medical research facilities have begun generational studies. Why? They believe in a generation we might see widespread early-onset dementia because the apps we use to do everything from navigate us through traffic to select a restaurant to “finding something fun to do” are depriving our brains of the exercise they’ve become used to over millions of years. That 9% of our brain that is still “the Reptilian Brain” is being snuffed out.

This is not an attempt to denigrate machine learning. We just need some perspective. Machine learning lets us find patterns or structures in data that are implicit and probabilistic (hence “inferred”) rather than explicit, that previously only people and not computers could find. They address a class of questions that were previously “hard for computers and easy for people”, or, perhaps more usefully, “hard for people to describe to computers”. And I have seen seen some very, very cool (ok, and sometimes worrying, depending on your perspective) speech and vision and analytical demos across the U.S., Europe and the Far East.

What burns me …

We need to end the most common misconception that comes up in talking about machine learning – that it is in some way a single, general purpose thing, on a path to HAL 9000, and that Google or Microsoft have each built *one*, or that Google “has all the data”, or that IBM has an actual thing called “Watson”. As writers and thinkers like Ben Evans and Amanda Ripley and Ben Alright have noted, this is always the mistake we make looking at automation: with each wave of automation, we imagine we’re creating something anthropomorphic or something with general intelligence. The latest? Sophie …

Yes, Sophia is quite entertaining. But she’s a bit of a non-persona non grata in the AI community. The creators exaggerate the bot’s abilities, pretending that it’s “basically alive” rather than just a particularly unnerving automaton. For serious AI researchers, she has been an annoyance. Giving AI a human platform – and over-humanizing the technology, in general – creates more problems than it solves. It also presents the global community with a false sense of what AI actually is, what the technology can do, and why people dedicate their lives to building AI platforms.

But hey, not to worry. For a simplistic event like Legaltech Sophie would be fine. They will surely make Sophie the “keynote speaker” some day.

In the 1920s and 30s we imagined steel men walking around factories holding hammers, and in the 1950s we imagined humanoid robots walking around the kitchen doing the housework. We didn’t get robot servants – we got …

Yes, washing machines are robots, but they’re not “intelligent”. They don’t know what water or clothes are. Moreover, they’re not general purpose even in the narrow domain of washing – you can’t put dishes in a washing machine, nor clothes in a dishwasher (or rather, you can, but you won’t get the result you want). They’re just another kind of automation, no different conceptually to a conveyor belt or a pick-and-place machine. To quote Ben Evans who uses the washing machine analogy in several of his presentations:

Machine learning lets us solve classes of problem that computers could not usefully address before, but each of those problems will require a different implementation, and different data, a different route to market, and often a different company. Each of them is a piece of automation. Each of them is a washing machine.

And that is the key to all of this. Building sets of ML tools in terms of a procession of types of data and types of questions. Francois Collet (who is pretty much head of deep learning at Google and who introduced me to Python and R as tools for doing deep e-discovery analytics) notes:

- Machine learning may well deliver better results for questions that you are already asking about data you already have, simply as an analytic or optimization technique.

- So machine learning lets you ask new questions of that data. For example, in e-discovery the technology already exists for a lawyer to search for “angry” emails, or “anxious” emails or even those aberrant clusters of documents that could be the key to a case, levels above plain vanilla keyword searches.

- Taking these two points together, when I was at DLD Tel Aviv last September I saw a marvelous piece of artificial intelligence being developed by Google for the e-discovery market (it is still in beta) which is a text analysis technology that data mines a database and pulls snippets out of related documents, in effect almost creating a narrative. Now, granted: several e-discovery vendors are developing the exact same technology albeit less sophisticated (obviously not having the resources that Google can throw at a project like this).

Machine learning in legal technology

As an example of where things need perspective … yet ending with some very positive developments … a brief chat about ML in legal tech. There was recent competition at Columbia University Law School between human lawyers and their artificial counterparts, in which both read a series of non-disclosure agreements with loopholes in them. The statement:

The AI [LawGeex AI] found 95 per cent of them, and the humans 88 per cent. But the human took 90 minutes to read them. The AI took 22 seconds. Game, set and match to the robots.

Well you know what? That’s great and all, but what isn’t accounted for is:

- The AI software required $21.5 million in development funding

- There were dozens of software specialists required to develop the specialized AI

- The software needed to be trained on tens of thousands of NDAs prior to this matchup, which requires much, much longer time than 22 seconds

- Most importantly, this software has been trained solely to detect issues on legal contracts, whereas a human lawyer has a much wider breadth of knowledge.

So it’s not exactly “game, set and match to the robots”. And at the end of the day a single human lawyer does not need millions of dollars in development costs for the simple task of reviewing contracts. On the flip-side, if LawGeex wants to expand its software to a different aspect of legal practice such as writing an affidavit then the software must be trained all over again just to reach a comparable level of competence to that of a human lawyer.

And yes, I know the counterargument: this software is now permanent and can be build upon and will (may?) keep improving. A human lawyer once “trained up” has pretty much reached her/his limit. When she/he leaves/retires/dies someone else needs to be trained up, which isn’t (exactly) the case with software.

And perhaps the NDA example for legal work is poorly selected. NDAs are typically boilerplate and mostly generated by paralegals (or even laypeople) from templates with blank spaces provided for customization.

A better example is technology assisted review which is a process (and this is a simplification) used by lawyers of having computer software electronically classify documents based on input from expert reviewers, in an effort to expedite the organization and prioritization of the document collection. It was not that long ago that a new philosophy emerged in the e-discovery industry which was to view e-discovery as a science — something that is repeatable, predictable, and efficient, with higher quality results and not an art or something that is recreated with every project. Underpinning this transformation was the emergence of new intelligent technology — predictive coding, smarter review tools, and financial tools for managing a portfolio of cases. This approach was to deliver real results when it comes to controlling the costs of litigation.

But like all machine learning programs, garbage in/garbage out. Right now, in four cities in the U.S., one of the largest e-discovery document reviews we’ve seen in awhile is being conducted. It would should have been a brilliant use of predictive coding. There are (literally) millions of documents to be reviewed. But predictive coding failed. It was botched, and it could not be rescued, and “brute force” linear review employed … much to the delight of the 800+ contract attorneys subsequently employed.

I cannot go into full details because it will be the subject of a law journal article this fall. Via my company The Posse List (which helps law firms and staffing agencies find attorneys to staff e-discovery document reviews), several of the attorneys on this particular project provided to the magazine detailed journals on the process and we are all under an NDA (surprisingly something the law firm did not ask them to sign at the project). But the main reasons for the failure will be well-known to the practitioners of this art. The training set and procedure “went askew”:

(1) the random selection size was too small for statistically meaningful calculations;

(2) the random selection was too small for a low prevalence collection (the last for complete training selections);

(3) it really required the attention of a high-level Subject Matter Expert for days of document review work, and she was absent-without-notice.

So time being of the essence, “brute force” linear review was employed.

This is NOT an indictment of predictive coding. Predictive coding will advance because of the collision of two simple, major trends: (1) the economics of traditional, linear review have become unsustainable while (2) the early returns from those employing predictive coding are striking.

But as one of the leading lights in predictive coding told me:

Look, there are far, far too few Subject Matter Experts in predictive coding and in the hands of the unwashed … well, it yields disaster. As in your example.

As I said, I will end on a positive note. Machine learning is not just helping us find things we can already recognize, but find things that humans can’t recognize, or find levels of pattern, inference or implication that might be impossible to discern. That’s why some legal technology vendors are moving beyond predictive coding. Yes, go to Legaltech and it seems every e-discovery vendor does the exact same thing: find your stuff, all in the same way. The process has been commoditized. Just hand them your database.

But there is more. How do you find what is not there? How do you find what is hidden? Last year my e-discovery team finished an internal investigation for a Fortune 100 company operating in Europe. In-your-face issues: fraud, collusion with competitors, carving up markets, etc. All hidden via intranets, Messenger and chat bots. Few emails … which had been “cleansed”: “Ok, found we found an email. But where is the expected reply to that email?” We used ML platforms built on Python and R (a trend in the e-discovery world) because no present e-discovery software would ever have surmised what is not there. But we also stumbled upon it via “brute force” because my team had to do an “eye-to-eye” on 100s of documents saved on hard drives.

Yes, we all know that AI can create false audio, false emails, etc. which is why I think AI is going to make e-discovery harder and harder, the “truth” far easier to hide.

But e-discovery execution will get better. Already several e-discovery AI laboratories are succeeding at the test phases of “story telling”: using natural language understanding technology and a form of “early case assessment” technology, artificial intelligence experts have developed algorithms that can parse structured and unstructured data sets to enable computers to in effect “unite” relationships and “tell a story”.

CONCLUSION

As technology has weaved its magic and makes our transactions, communications and the application of human intelligence to our various disciplines faster, smarter and global it has altered our traditional roles. And it also leaves behind a data trail that companies and their lawyers must not only grapple with and manage but also exploit for commercial advantage. As Ron Friedmann and others have well-noted, a transformation is taking place in legal services, as we adjust to the commercial reality we find ourselves in and we are likely to see new business structures, legal service partnerships, consulting services and costs models emerging.

The problem is that our technology-driven society has deemed that any human activity that can be adequately duplicated by computer code and the related data matched to the performance of a human accomplishing the activity falls within the purview of so-called “artificial intelligence”. There is no difference fundamentally between so called white collar work such as rules-based accounting or parsing legal documents or medical diagnosis, accomplished by a computer and associated programming directions, and the range of controls that regulate physical machinery.

Yes, physical machinery is far superior to human labour in accomplishing the same repetitive tasks. Human-written computer code, run through a computer, therefore “should” be far superior to humans in rule-based “office” tasks.

But machine learning is a brittle system. The areas where machine learning is trying to be good requires enormous amounts of data and quantitative comparisons (is that an apple or a face? can I make a medical prognosis? are these documents all relevant/responsive?). But show it something it has never seen before … or screw up the training set … and it won’t have a clue. As I noted in Part 1, if you train an ML to play “Go” on a 19 x 19 board, and then add an extra row and column, it won’t be able to extrapolate how to play. Humans beat it hands down. In some cases, it cannot even determine a move.

And as for rare problems, with little data, and novel situations, which is most of life actually … well, we need to work on that. Yes, there are chemical and metabolic limits to the size and processing power of “wet” organic brains. And perhaps we’re close to these already. But while there seem to be no such limits to constrain silicon-based computers (still less, perhaps, quantum computers) and their potential for further development could be as dramatic as the evolution from monocellular organisms to humans, we need to slow down just a bit. Abstract thinking by biological brains has underpinned the emergence of all culture and science. It will continue to do so for awhile. But let me get back to you on that.