26 May 2018 (Washington, DC) – About six years ago, Nick Patience (one of the founders of 451 Research) invited me to attend the annual 451 Hosting & Cloud Transformation Summit in London. I had known Nick for a number of years and he had invited me to several 451 events where the knowledge I gained informed my work and my blog posts.

The Cloud Summit was different. Nick introduced me to Brian Cox, who was the keynote speaker. Brian, as most of you know, is Professor of Particle Physics and one of the leaders on the ATLAS experiment at the Large Hadron Collider (LHC) at CERN in Geneva. He is a physics star turned pin-up professor whose several series on the solar system and science have sent his career into orbit. Universities who have seen a surge in applications for physics programs over the last 3 years call it the “‘Brian Cox effect” (physics was my minor in college). At the conference … populated by folks who certainly work with large amounts of data … Brian dazzled the crowd with real data loads: how about 40 terabytes created – per second. That’s was how much data was thrown off at the time by the LHC, the world’s largest and highest-energy particle accelerator.

That introduction led to an email exchange, and an invitation to CERN which is located near Geneva. It rekindled my interest in physics and led to a whole string of further introductions at the Swiss Federal Institute of Technology (ETH), the Swiss AI Lab, the Delft University of Technology, the Grenoble Institute of Technology, etc., etc. And it also led to the start of my AI/informatics program at ETH which I will complete this year.

CERN and the LHC depend on a massive computer grid, as does the global network of scientists who use LHC data. CERN scientists have been teaching an AI system to protect the grid from cyber threats using machine learning.

But I was back there recently for something different. Physicists at the world’s leading atom smasher are calling for help. In the next decade, they plan to produce up to 20 times more particle collisions in the LHC) than they do now, but current detector systems aren’t fit for the coming deluge. So a group of LHC physicists has teamed up with computer scientists to launch a competition to spur the development of artificial-intelligence techniques that can quickly sort through the debris of these collisions. Researchers hope these will help the experiment’s ultimate goal of revealing fundamental insights into the laws of nature.

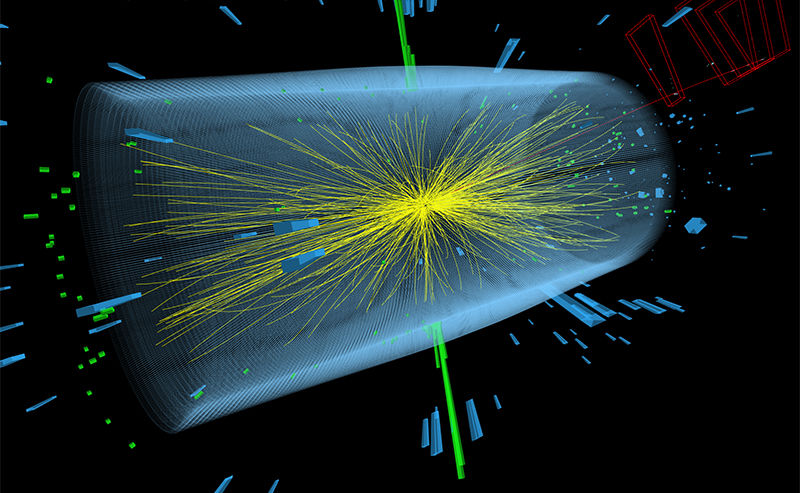

At the LHC two bunches of protons collide head-on inside each of the machine’s detectors 40 million times a second. Every proton collision can produce thousands of new particles, which radiate from a collision point at the centre of each cathedral-sized detector. Millions of silicon sensors are arranged in onion-like layers and light up each time a particle crosses them, producing one pixel of information every time. Collisions are recorded only when they produce potentially interesting by-products. When they are, the detector takes a snapshot that might include hundreds of thousands of pixels from the piled-up debris of up to 20 different pairs of protons. Because particles move at or close to the speed of light, a detector cannot record a full movie of their motion.

From this mess, the LHC’s computers reconstruct tens of thousands of tracks in real time, before moving on to the next snapshot. “The name of the game is connecting the dots,” said a physicist.

The yellow lines depict reconstructed particle trajectories from collisions recorded by CERN’s CMS detector.

Credit: CERN

After future planned upgrades, each snapshot is expected to include particle debris from 200 proton collisions. Physicists currently use pattern-recognition algorithms to reconstruct the particles’ tracks. Although these techniques would be able to work out the paths even after the upgrades, the problem is, they are too slow. Without major investment in new detector technologies, LHC physicists estimate that the collision rates will exceed the current capabilities by at least a factor of 10.

Researchers suspect that machine-learning algorithms could reconstruct the tracks much more quickly. To help find the best solution, LHC physicists teamed up with computer scientists to launch the TrackML challenge. For the next three months, data scientists will be able to download 400 gigabytes of simulated particle-collision data — the pixels produced by an idealized detector — and train their algorithms to reconstruct the tracks. Participants will be evaluated on the accuracy with which they do this. The top three performers of this phase hosted, by Google-owned company Kaggle, will receive cash prizes of US$12,000, $8,000 and $5,000. A second competition will then evaluate algorithms on the basis of speed as well as accuracy.

Such competitions have a long tradition in data science, and many young researchers take part to build up their CVs. Getting well ranked in challenges is extremely important. Perhaps the most famous of these contests was the 2009 Netflix Prize. The entertainment company offered US$1 million to whoever worked out the best way to predict what films its users would like to watch, going on their previous ratings. TrackML isn’t the first challenge in particle physics, either: in 2014, teams competed to ‘discover’ the Higgs boson in a set of simulated data (the LHC discovered the Higgs, long predicted by theory, in 2012. Other science-themed challenges have involved data on anything from plankton to galaxies.

From the computer-science point of view, the Higgs challenge was an ordinary classification problem. But the fact that it was about LHC physics added to its lustre. That may help to explain the challenge’s popularity: nearly 1,800 teams took part, and many researchers credit the contest for having dramatically increased the interaction between the physics and computer-science communities.

TrackML is “incomparably more difficult”, said a physicist. In the Higgs case, the reconstructed tracks were part of the input, and contestants had to do another layer of analysis to “find” the particle. In the new problem, you have to construct thousands of helix arcs — the shape of the decay products’ tracks — from roughly 100,000 data points. One physicist told me:

The winning technique might end up resembling those used by the program AlphaGo, which made history in 2016 when it beat a human champion at the complex game of Go. In particular, they might use reinforcement learning, in which an algorithm learns by trial and error on the basis of ‘rewards’ that it receives after each attempt.

Other physicists are also beginning to consider more untested technologies, such as neuromorphic computing and quantum computing.