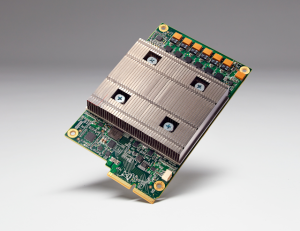

Tensor Processing Unit board

19 May 2016 – A few years ago at a Google AI workshop in Paris I Iearned about a technology that would become Google’s Tensor Processing Unit, or TPU – an advanced machine learning capability by a factor of three generations. Google had begun this stealth project several years ago to see what they could accomplish with their own custom accelerators for machine learning applications.

The result? The TPU, a custom ASIC they built specifically for machine learning — and tailored for TensorFlow. They have been running TPUs inside their data centers for more than a year, and have found them to deliver an order of magnitude better-optimized performance per watt for machine learning. This is roughly equivalent to fast-forwarding technology about seven years into the future (three generations of Moore’s Law).

TPU is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation. Because of this, they can squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models and apply these models more quickly, so users get more intelligent results more rapidly. A board with a TPU fits into a hard disk drive slot in their data center racks.

TPU is an example of how fast a company can turn research into practice — from first tested silicon, the team had them up and running applications at speed in their data centers within 22 days.

TPUs already power many applications at Google, including RankBrain, used to improve the relevancy of search results and Street View, to improve the accuracy and quality of its maps and navigation. AlphaGo was powered by TPUs in the matches against Go world champion, Lee Sedol, enabling it to “think” much faster and look farther ahead between moves.

Server racks with TPUs used in the AlphaGo matches with Lee Sedol

Google’s goal has always been pretty simple: to lead the industry on machine learning and make that innovation available to its customers. Building TPUs into their infrastructure stack will allow them to bring the power of Google to developers across software like TensorFlow and Cloud Machine Learning with advanced acceleration capabilities.

And the battle is on! Just a few days ago Amazon made its leap into open-source technology with the unveiling of its machine-learning software DSSTNE, competing directly with TensorFlow. According to the press release, Amazon says DSSTNE (which stands for Deep Scalable Sparse Tensor Network Engine and is pronounced “Destiny”) excels in situations where there isn’t a lot of data to train the machine-learning system, whereas TensorFlow is geared for handling tons of data.

And Doug Marsch, my “go to guy” on chip technology over at RSA, says DSSTNE is also faster than TensorFlow, and agrees with Amazon’s claim it is up to 2.1 times the speed in low-data situations.

Note: according to Doug, the software comes from Amazon’s need to make recommendations in its retail platform, which required the company to develop neural network programs. However, Amazon doesn’t always have a lot of data to work from when making those recommendations. Amazon’s system can achieve those speeds in part due to multi-GPU capabilities. Unlike other open-source “deep learning” programs, DSSTNE can automatically distribute its workload across many GPUs without speed or accuracy tradeoffs that often come with training across multiple machines.

In fact, the DSSTNE FAQ says:

“This means being able to build recommendations systems that can model ten million unique products instead of hundreds of thousands. For problems of this size, other packages would need to revert to CPU computation for the sparse data, decreasing performance by about an order of magnitude.”

One interesting note: Amazon said it is releasing the software as an open-source project to help machine learning grow beyond speech and language recognition that many companies are focusing on, instead expanding into areas like search and recommendations. They hope that “researchers around the world can collaborate to improve it. But more importantly, we hope that it spurs innovation in many more areas.”