2 April 2016 – I was reading a few obits on Andrew Grove, who passed away two weeks ago. I came across the photo above, an iconic shot of him with Steve Jobs. Grove propelled Intel into becoming a household name in computers, and he played a key role in the company’s shift in focus from memory chips to microprocessors.

In my travel bag (I was in Rome for two weeks on a combined business/pleasure trip) I found a wonderful assessment of the state of computing and Gordon Moore’s famed law in the

Economist (

Technology quarterly, March 12th).

Moore was one of the founders of Intel. In 1971 it released the 4004, its first ever microprocessor. The chip, measuring 12 square millimetres, contained 2,300 transistors—tiny electrical switches representing the 1s and 0s that are the basic language of computers. The gap between each transistor was 10,000 nanometres (billionths of a metre) in size, about as big as a red blood cell. The result was a miracle of miniaturization, but still on something close to a human scale. A child with a decent microscope could have counted the individual transistors of the 4004.

My cousin was fortunate enough to work under Moore at the Fairchild research facility and he heard Moore’s early presentation on the trend he observed in transistor density at a meeting of local engineers in Palo Alto. My cousin noted that at the time, Moore wondered how all these projected thousands (not billions) of transistors could possibly be utilized.

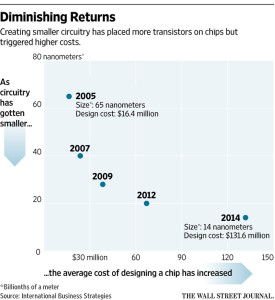

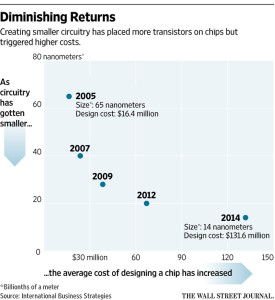

I think the Economist piece may have understated the cost/transistor trend. In the past, shrinking transistor geometry augmented by increased wafer diameter drove the cost of chips ever lower and functionality ever higher, as predicted by the self-fulfilling trajectory of Moore’s law. But a graphic from the Wall Street Journal shows the number of transistors bought per dollar and illustrates the incredible cost reduction that we had experienced until about 2012, when the curve actually peaks, and then shows costs increasing:

The electronic revolution has been fueled by the low cost of memory and microprocessor chips because this opened up the possibility of previously inconceivable cost-effective applications.

Although, as the Economist piece suggests, clever programming and specialized chip designs can still deliver some interesting products, the main cost-reduction driver will no longer be available and this will undoubtedly have a dampening effect on the future rate of change in electronic innovation. Hence the push in quantum physics and quantum computing.