Guest blogger:

Eric De Grasse

Chief Technology Officer

Project Counsel Media

26 March 2019 (San Francisco, CA) — This week I am out in San Francisco for EmTech Digital, the annual “all-things-AI” event sponsored by MIT Technology Review. It covers a whirlwind of topics (just click on the link in the first line above and you will see what I mean) so I will cover just one that I thought our general audience might fancy: how malevolent machine learning could derail AI.

The presentation was given by Dawn Song, a professor at UC Berkeley who studies the security risks involved with AI, and she warned of new techniques that can mess with machine-learning systems.

Note: regular readers will probably recognise her name. She is part of the Berkeley Artificial Intelligence Research Lab, and Greg and I often quote her work. Her research covers: deep learning and program synthesis and analysis; secure deep learning and artificial intelligence; computer security; privacy; and, applied cryptography. Her “big thing” is using program analysis, algorithms design, and machine learning for security and privacy.

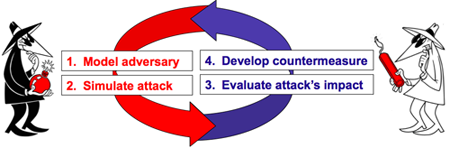

What she was addressing is known as “adversarial machine learning,” some methods that involve experimentally feeding input into an algorithm to reveal the information it has been trained on, while others involve distorting the input in a way that causes the system to “misbehave”.

Song presented several examples of adversarial-learning trickery that her research group has explored. In one project, conducted in collaboration with Google they tricked machine-learning algorithms that auto-generate email responses to spit out sensitive data such as credit card numbers. Google then used those findings to prevent Smart Compose (that is the tool that auto-generates text in Gmail) from being exploited.

Note to our e-discovery readers: they used the Enron e-mail data set, which is being used more and more for this type of research, because it is a “real-world” data set and ideal for black-box settings that force a deep-learning classifier to misclassify a text input. But this technology can undermine any database. Machine learning models are powerful but fallible. Despite their surge in popularity, a growing body of research demonstrates that many models are vulnerable to adversarial examples and the use of the Enron set works for that purpose.

In another example, Song showed a video demo of how a few innocuous-looking stickers could trick a self-driving car’s AI into “seeing” a stop sign and interpreting it as a speed limit for 45 miles per hour. She noted that there are still huge problems to be solved for automated driving systems that rely on such AI information.

As our cyber security listserv members know, adversarial machine learning is a growing area of interest for machine-learning researchers because it is of huge interest to the defense community. Many military systems – including sensing and weapons systems – harness machine learning, so there is huge potential for these techniques to be used both defensively and offensively.

If you really want to get into the weeds of adversarial machine learning, a Google chap passed along this link.