- “Cyber War” panel

- Shirley Ann Jackson, President, Rensselaer Polytechnic Institute (RPI)

- Michael Gregoire, Chief Executive Officer, CA Technologies

- Moises Naim, Distinguished Fellow, Carnegie Endowment for International Peace

- Thomas E. Donilon, Vice-Chair, O’Melveny & Myers LLP

- There was an introductory discussion on how few of us understand the “architecture within which we live”. We have moved into an “internet singularity” where most of us are connected and living off a totally man-made system. Panelists discussed how that architecture is:

- algorithmically-driven

- subject to an almost uncontrollable connectivity, and

- now subject to an exponential growth in cyber threat avenues

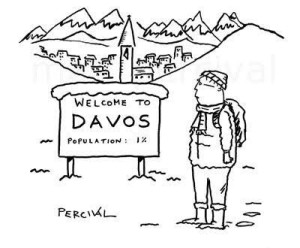

- No country has even come close to the U.S. in harnessing the power of computer networks to create and share knowledge, produce economic goods, intermesh private and government computing infrastructure, etc. — and so left the U.S. as the most vulnerable technology ecosystem to those who can steal, corrupt, harm, and destroy public and private assets, at a pace often found unfathomable.

- The continuing issue which will be most difficult to overcome: the “capitalistic mindset” – get to the market quickly, in the least expensive fashion, building functionality first, security second.

- The overriding fears of the panel: the barriers to technology are non-existent. Looking at the three “Horsemen of the Apocolypse” …

- IEDs (improvised explosive devices)

- drones

- cyber

- And on a point mirrored in an earlier session, the intel community notes that most cyberterrorist attacks are not very sophisticated but they have been highly effective. And most have been committed by non-state actors targeting critical infrastructure systems. And obviously nation-state actors attempting to gain a tactical advantage by sabotaging military and critical infrastructure systems.

- The U.S. role: a sore point. Yes, the U.S. invented the internet, probably has the most expert people, expert companies to deal with cyber security. But now you have a U.S. president who “hoped Russian intelligence services had successfully hacked Hillary Clinton’s email” and who encouraged them to “publish whatever they may have stolen”, essentially urging a foreign adversary to conduct cyberespionage against a former secretary of state. That’s the guidance we need? And as one panel member said: “We are also at the dawn of a U.S. administration with many supporters who’ve shown a loose regard for “facts,” to put it charitably.”

- One interesting point: the economics of cyber attacks are skewed to favor the attacker: exploits are easily acquired and can be reused on multiple targets, and the likelihood of detection and punishment is low. Companies and governments are engaged in an increasingly difficult battle against persistent and agile cyber adversaries. Improved information sharing is critical. BUT … companies will not share due to legal restrictions. They need a “safe harbor” to address outstanding legal limitations on sharing and companies’ concern that sharing cyber threat information could expose them to civil and criminal liability for disclosing sensitive personal or business information.

- “Weaponized narrative”

There was a session I could not attend, but watched from the closed-circuit media room. It was about “hybrid warfare”, defined as “wide range of overt and covert military, paramilitary, and civilian measures employed in a highly integrated design”. The focus was, obviously, the U.S. elections and Russia’s alleged involvement in influencing the result.

- Weaponized narrative seeks to undermine an opponent’s civilization, identity, and will by generating complexity, confusion, and political and social schisms. It can be used tactically, as part of explicit military or geopolitical conflict; or strategically, as a way to reduce, neutralize, and defeat a civilization, state, or organization. Done well, it limits or even eliminates the need for armed force to achieve political and military aims.

- The efforts to muscle into the affairs of the American presidency, Brexit, the Ukraine, the Baltics, and NATO reflect a shift to a “post-factual” political and cultural environment that is vulnerable to weaponized narrative.

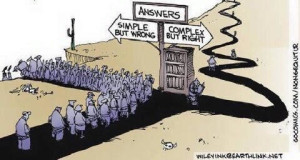

- One fundamental underpinning – often overlooked – is the accelerating volume and velocity of information. Cultures, institutions, and individuals are, among many other things, information-processing mechanisms. As they become overwhelmed with information complexity, the tendency to retreat into simpler narratives becomes stronger.

- The speed of upheaval in our lives is unprecedented. It will be filled by something. We are desperate for something to hang on to.

- By offering cheap passage through a complex world, weaponized narrative furnishes emotional certainty at the cost of rational understanding. The emotionally satisfying decision to accept a weaponized narrative – to believe, to have faith – inoculates cultures, institutions, and individuals against counterarguments and inconvenient facts.

- And there was a wonderful exposition on how the Chinese government has an army of people who surreptitiously insert huge numbers of pseudonymous and other deceptive writings into the stream of real social media posts to regularly distract the public and change the subject on policy moves.

- Oh, and the “economics” of fake news? One presenter noted that fake news challenges traditional news organizations because:

- it spreads faster

- it’s easier to create

- it makes a lot of money

My editorial note: It’s a self-reinforcing loop. As one panel and the briefing notes make evident, this process was clear in Ukraine, in Brexit, in creation of alt-right and other far right and left communities in many countries, and in the American presidential election. All of these campaigns combine indigenous factors with known or suspected Russian deployment of weaponized narrative, achieving significant benefits for Russia with low risk of conventional military responses by the West. Indeed, the response by America, NATO, and European states has been confused, sporadic, and ineffective.

- Machine learning in cyber security

- The issues are easy. We have reams of data being generated and transferred over networks. Cybersecurity experts have a hard time monitoring everything that gets exchanged – potential threats can easily go unnoticed. The initial answer was “hiring more security experts” which did offer a temporary reprieve But as McKinsey pointed out the cybersecurity industry is already dealing with a widening talent gap, and organizations and firms are hard-pressed to fill vacant security posts.

- So somebody thought the solution might lie in machine learning. And to no surprise, the topic is being hotly debated by security professionals, with strong arguments on both ends of the spectrum.

- Many call machine learning the pipe dream of cybersecurity, arguing that “there’s no silver bullet in security.” What backs up this argument is the fact that in cybersecurity, you’re always up against some of the most devious minds, people who already know very well how machines and machine learning works and how to circumvent their capabilities. Many attacks are carried out through minuscule and inconspicuous steps, often concealed in the guise of legitimate requests and commands.

- BUT … some argue that machine learning is cybersecurity’s answer to detecting advanced breaches, and it will shine in securing IT environments as they “grow increasingly complex” and “more data is being produced than the human brain has the capacity to monitor” and it becomes nearly impossible “to gauge whether activity is normal or malicious.” These proponents are quick to note that AI is still not yet ready to replace humans, but it can boost human efforts by automating the process of recognizing patterns which is helpful in cyber security.

- The main argument against security solutions powered by unsupervised machine learning is that they churn out too many false positives and alerts, effectively resulting in alert fatigue and a decrease in sensibility.

- Much discussed was MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) which has led one of the most notable efforts in this regard, developing a system called AI2, an adaptive cybersecurity platform that uses machine learning and the assistance of expert analysts to adapt and improve over time. The system, which takes its name from the combination of artificial intelligence and analyst intuition, reviews data from tens of millions of log lines each day and singles out anything it finds suspicious. The filtered data is then passed on to a human analyst, who provides feedback to AI2 by tagging legitimate threats. Over time, the system fine-tunes its monitoring and learns from its mistakes and successes, eventually becoming better at finding real breaches and reducing false positives. Bottom line: it’s able to show the analyst only up to 200 or even 100 events per day which is considerably less than the tens of thousands security events that cybersecurity experts have to deal with every day.

- Finnish security vendor F-Secure is another firm that has placed its bets on the combination of human and machine intelligence in its most recent cybersecurity efforts, which reduces the time it takes to detect and respond to cyberattacks. On average, it takes organizations several months to discover a breach. F-Secure wants to cut down the time frame to 30 minutes with its Rapid Detection Service.

- I was a bit lost in some of the mechanics of all this tech … “note to self: reread this weekend … but it seems the data are fed to threat intelligence and behavioral analytics engines, which use machine learning to classify the incoming samples and determine normal behavior and identify outliers and anomalies. The system uses near-real-time analytics to identify known security threats, stored data analytics to compare samples against historical data and big data analytics to identify evolving threats through anonymized datasets gathered from a vast number of clients.

- One presenter provided a bit of background. One of the first machine learning algorithms, the artificial neural network, was invented in the 1950s. Interestingly, at that time it was thought the algorithm would quickly lead to the creation of “strong” artificial intelligence. That is, intelligence capable of thinking, understanding itself and solving other tasks in addition to those it was programmed for. Then there is so-called weak AI. It can solve some creative tasks – recognize images, predict the weather, play chess, etc. Now, 60 years later, we have a much better understanding of the fact that the creation of true AI will take years, and what today is referred to as artificial intelligence is in fact machine learning.

- Kaspersky Lab presenter:

- yes, it’s true: for some spheres where machine learning is used there are a few ready-made algorithms. These spheres include facial and emotion recognition, or distinguishing cats from dogs. In these and many other cases, someone has done a lot of thinking, identified the necessary signs, selected an appropriate mathematical tool, set aside the necessary computing resources, and then made all their findings publicly available.

- This creates the false impression that the algorithms already exist for malware detection too. That is not the case. Kaspersky has spent more than 10 years developing and patenting a number of technologies. Because there is a conceptual difference between malware detection and facial recognition. Quoting from a Kaspersky paper:

“Faces will remain faces – nothing is going to change in that respect. In the majority of spheres where machine learning is used, the objective is not changing with time, while in the case of malware things are changing constantly and rapidly. That’s because cybercriminals are highly motivated people (money, espionage, terrorism…). Their intelligence is not artificial; they are actively combating and intentionally modifying malicious programs to get away from the trained model.”

Conclusion? different tools need to be used in different situations. Multi-level protection is more effective than a single level – more effective tools should not be neglected just because they are “out of fashion.”